20

Sep

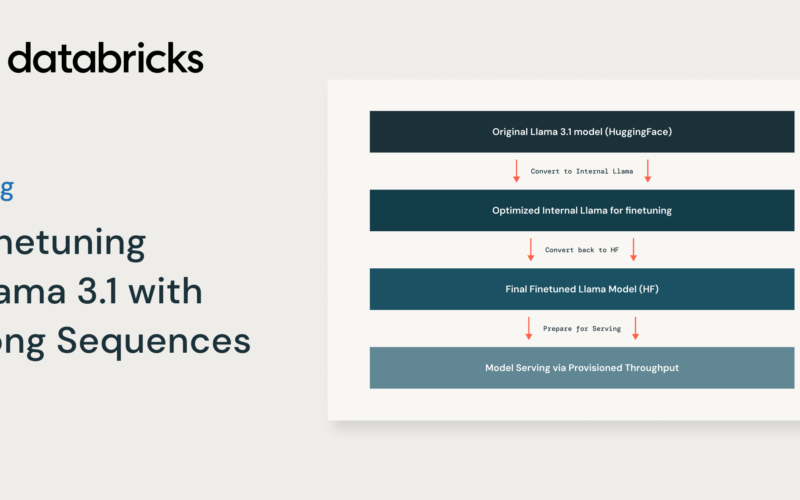

We are excited to announce that Mosaic AI Model Training now supports the full context length of 131K tokens when fine-tuning the Meta Llama 3.1 model family. With this new capability, Databricks customers can build even higher-quality Retrieval Augmented Generation (RAG) or tool use systems by using long context length enterprise data to create specialized models.The size of an LLM’s input prompt is determined by its context length. Our customers are often limited by short context lengths, especially in use cases like RAG and multi-document analysis. Meta Llama 3.1 models have a long context length of 131K tokens. For comparison,…