24

May

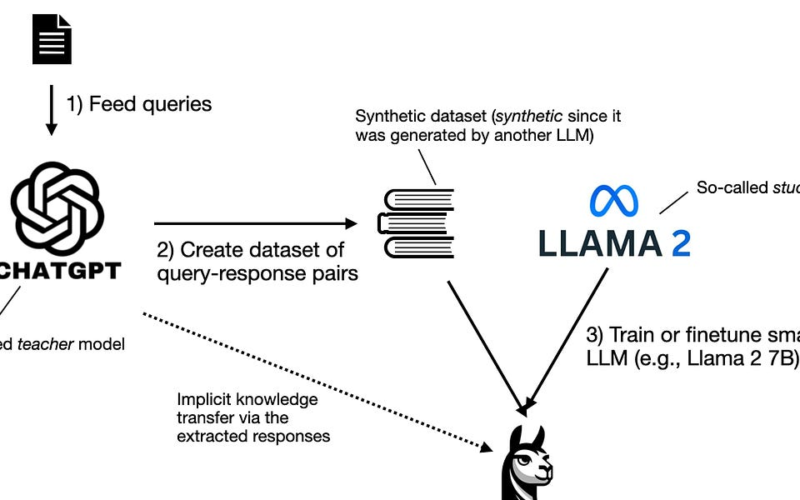

This month, I want to focus on three papers that address three distinct problem categories of Large Language Models (LLMs): Reducing hallucinations.Enhancing the reasoning capabilities of small, openly available models.Deepening our understanding of, and potentially simplifying, the transformer architecture.Reducing hallucinations is important because, while LLMs like GPT-4 are widely used for knowledge generation, they can still produce plausible yet inaccurate information.Improving the reasoning capabilities of smaller models is also important. Right now, ChatGPT & GPT-4 (vs. private or personal LLMs) are still our go-to when it comes to many tasks. Enhancing the reasoning abilities of these smaller models is one…