26

Jul

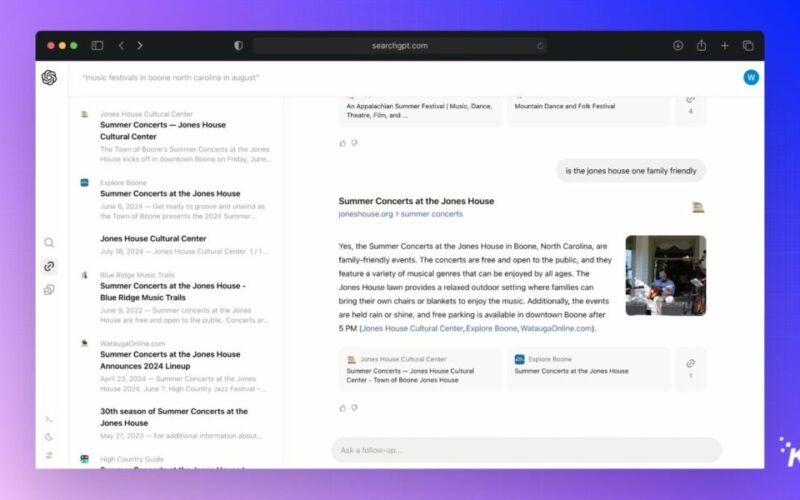

(Anders78/Shutterstock) The advent of generative AI has supercharged the world’s appetite for data, especially high-quality data of known provenance. However, as large language models (LLMs) get bigger, experts are warning that we may be running out of data to train them. One of the big shifts that occurred with transformer models, which were invented by Google in 2017, is the use of unsupervised learning. Instead of training an AI model in a supervised fashion atop smaller amounts of higher quality, human-curated data, the use of unsupervised training with transformer models opened AI up to the vast amounts of data of…