26

May

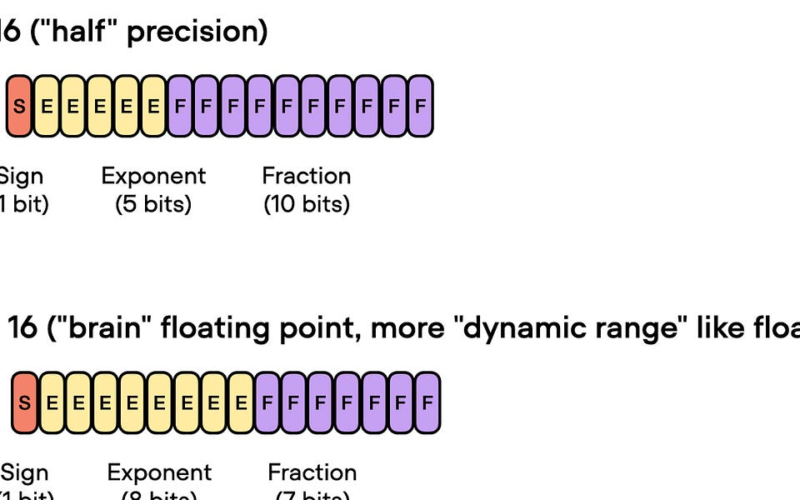

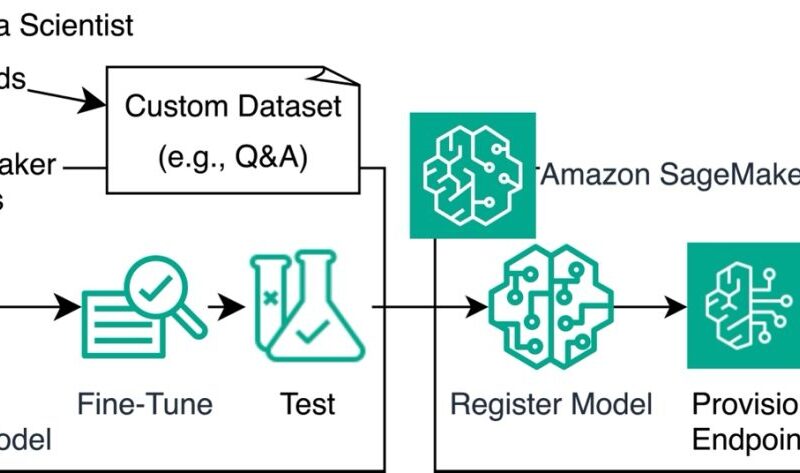

Due to the extensive length of the regular Ahead of AI #11: New Foundation Models article, I removed some interesting tidbits around the Llama 2 weights from the main newsletter. However, it might be nice to include those as a small bonus for the supporters of Ahead of AI. Thanks again for the kind support!In this short(er) article, we will briefly examine the Llama 2 weights and the implications of hosting them using different floating point precisions. The original Llama 2 weights are hosted by Meta but the Hugging Face (HF) model hub also hosts the Llama 2 weights for added…