25

May

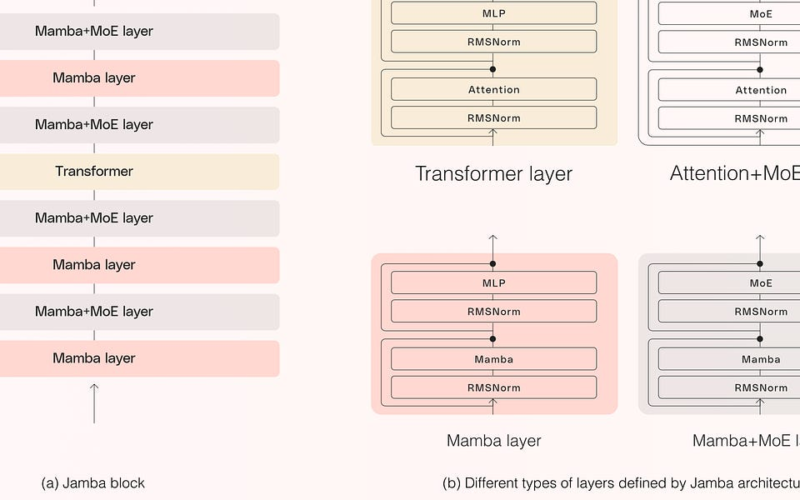

The New Breed of Open Mixture-of-Experts (MoE) Models. In a push to beat the closed-box AI models from the AI Titans, many startups and research orgs have embarked in releasing open MoE-based models. These new breed of MoE-based models introduce many clever architectural tricks, and seek to balance training cost efficiency, output quality, inference performance and much more. For an excellent introduction to MoEs, checkout this long post by the Hugging Face team: Mixture of Experts ExplainedWe’re starting to see several open MoE-based models achieving near-SOTA or SOTA performance as compared to e.g. OpenAI GPT-4 and Google Gemini 1.5 Pro.…