23

May

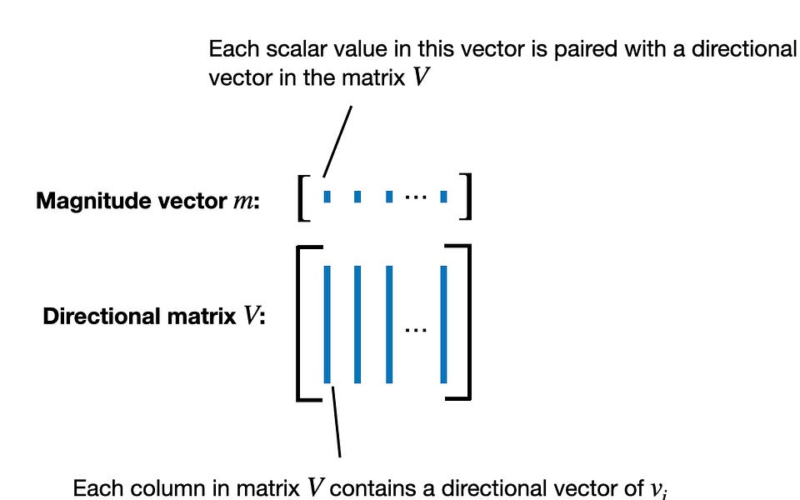

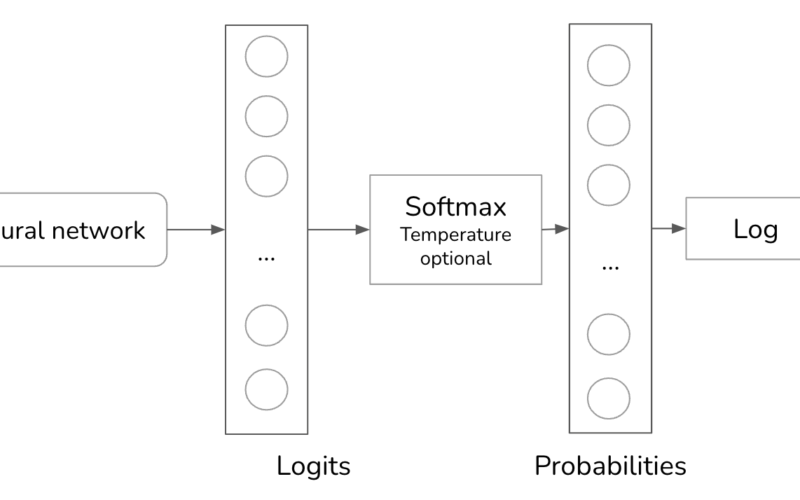

Low-rank adaptation (LoRA) is a machine learning technique that modifies a pretrained model (for example, an LLM or vision transformer) to better suit a specific, often smaller, dataset by adjusting only a small, low-rank subset of the model's parameters. This approach is important because it allows for efficient finetuning of large models on task-specific data, significantly reducing the computational cost and time required for finetuning.Last week, researchers proposed DoRA: Weight-Decomposed Low-Rank Adaptation, a new alternative to LoRA, which may outperform LoRA by a large margin.To understand how these methods work, we will implement both LoRA and DoRA in PyTorch from scratch…