11

Oct

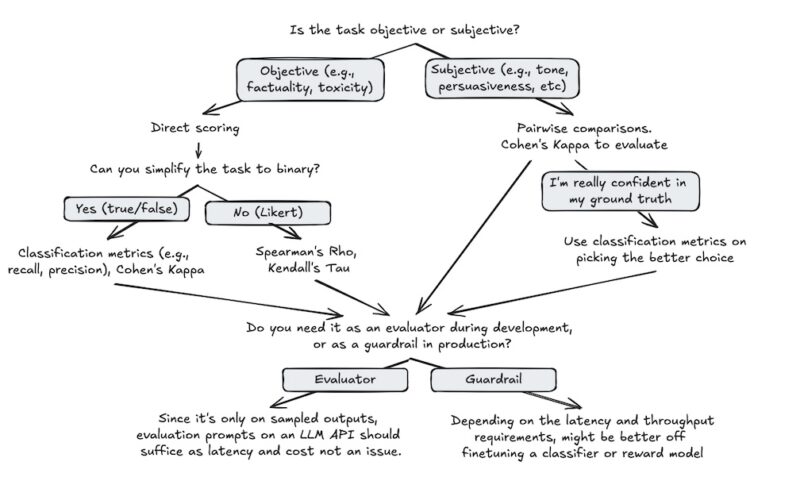

Can we train Transformers to focus more on what's important and less on irrelevant details?In this post, we'll explore a new architecture called the Differential Transformer. It's designed to enhance the attention mechanism in Transformers (“differential” here referring to subtraction, btw, not differential equations), helping models pay more attention to relevant information while reducing the influence of noise.By the way, you can check out a short video summary of this paper and many others on the new Youtube channel!Transformers have become a cornerstone in language modeling and natural language processing. They use an attention mechanism to weigh the importance of…