23

May

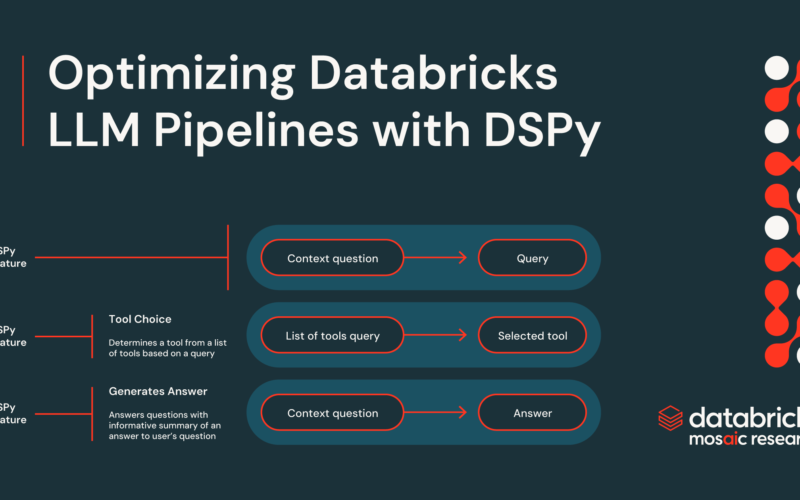

Hyperparameter optimization is an integral part of machine learning. It aims to find the best set of hyperparameter values to achieve the best model performance. Grid search and random search are popular hyperparameter tuning methods. They roam around the entire search space to get the best set of hyperparameters, which makes them time-consuming and inefficient for larger datasets. Based on Bayesian logic, Bayesian optimization considers the model performance for previous hyperparameter combinations while determining the next set of hyperparameters to evaluate. Optuna is a popular tool for Bayesian hyperparameter optimization. It provides easy-to-use algorithms, automatic algorithm selection, integrations with a…