Chatbots are becoming valuable tools for businesses, helping to improve efficiency and support employees. By sifting through troves of company data and documentation, LLMs can support workers by providing informed responses to a wide range of inquiries. For experienced employees, this can help minimize time spent in redundant, less productive tasks. For newer employees, this can be used to not only speed the time to a correct answer but guide these workers through on-boarding, assess their knowledge progression and even suggest areas for further learning and development as they come more fully up to speed.

For the foreseeable future, these capabilities appear poised to augment workers more than to replace them. And with looming challenges in worker availability in many developed economies, many organizations are rewiring their internal processes to take advantage of the support they can provide.

Scaling LLM-Based Chatbots Can Be Expensive

As businesses prepare to widely deploy chatbots into production, many are encountering a significant challenge: cost. High-performing models are often expensive to query, and many modern chatbot applications, known as agentic systems, may decompose individual user requests into multiple, more-targeted LLM queries in order to synthesize a response. This can make scaling across the enterprise prohibitively expensive for many applications.

But consider the breadth of questions being generated by a group of employees. How dissimilar is each question? When individual employees ask separate but similar questions, could the response to a previous inquiry be re-used to address some or all of the needs of a latter one? If we could re-use some of the responses, how many calls to the LLM could be avoided and what might the cost implications of this be?

Reusing Responses Could Avoid Unnecessary Cost

Consider a chatbot designed to answer questions about a company’s product features and capabilities. By using this tool, employees might be able to ask questions in order to support various engagements with their customers.

In a standard approach, the chatbot would send each query to an underlying LLM, generating nearly identical responses for each question. But if we programmed the chatbot application to first search a set of previously cached questions and responses for highly similar questions to the one being asked by the user and to use an existing response whenever one was found, we could avoid redundant calls to the LLM. This technique, known as semantic caching, is becoming widely adopted by enterprises because of the cost savings of this approach.

Building a Chatbot with Semantic Caching on Databricks

At Databricks, we operate a public-facing chatbot for answering questions about our products. This chatbot is exposed in our official documentation and often encounters similar user inquiries. In this blog, we evaluate Databricks’ chatbot in a series of notebooks to understand how semantic caching can enhance efficiency by reducing redundant computations. For demonstration purposes, we used a synthetically generated dataset, simulating the types of repetitive questions the chatbot might receive.

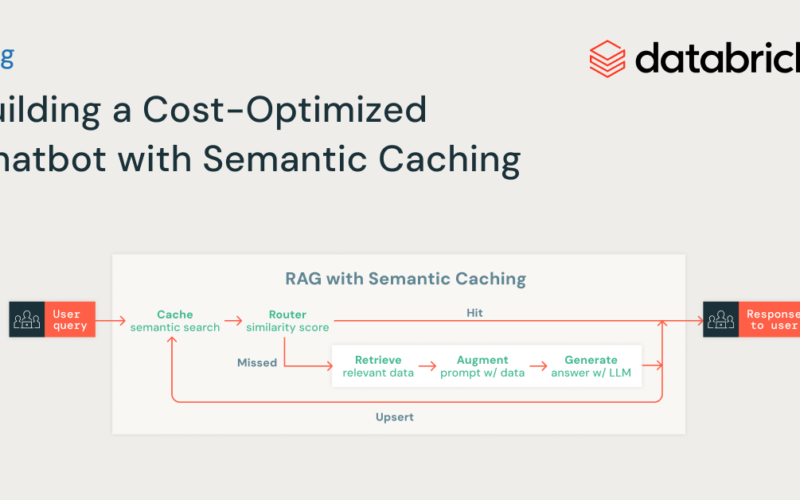

Databricks Mosaic AI provides all the necessary components to build a cost-optimized chatbot solution with semantic caching, including Vector Search for creating a semantic cache, MLflow and Unity Catalog for managing models and chains, and Model Serving for deploying and monitoring, as well as tracking usage and payloads. To implement semantic caching, we add a layer at the beginning of the standard Retrieval-Augmented Generation (RAG) chain. This layer checks if a similar question already exists in the cache; if it does, then the cached response is retrieved and served. If not, the system proceeds with executing the RAG chain. This simple yet powerful routing logic can be easily implemented using open source tools like Langchain or MLflow’s pyfunc.

In the notebooks, we demonstrate how to implement this solution on Databricks, highlighting how semantic caching can reduce both latency and costs compared to a standard RAG chain when tested with the same set of questions.

In addition to the efficiency improvement, we also show how semantic caching impacts the response quality using an LLM-as-a-judge approach in MLflow. While semantic caching improves efficiency, there is a slight drop in quality: evaluation results show that the standard RAG chain performed marginally better in metrics such as answer relevance. These small declines in quality are expected when retrieving responses from the cache. The key takeaway is to determine whether these quality differences are acceptable given the significant cost and latency reductions provided by the caching solution. Ultimately, the decision should be based on how these trade-offs affect the overall business value of your use case.

Why Databricks?

Databricks provides an optimal platform for building cost-optimized chatbots with caching capabilities. With Databricks Mosaic AI, users have native access to all necessary components, namely a vector database, agent development and evaluation frameworks, serving, and monitoring on a unified, highly governed platform. This ensures that key assets, including data, vector indexes, models, agents, and endpoints, are centrally managed under robust governance.

Databricks Mosaic AI also offers an open architecture, allowing users to experiment with various models for embeddings and generation. Leveraging the Databricks Mosaic AI Agent Framework and Evaluation tools, users can rapidly iterate on applications until they meet production-level standards. Once deployed, KPIs like hit ratios and latency can be monitored using MLflow traces, which are automatically logged in Inference Tables for easy tracking.

If you’re looking to implement semantic caching for your AI system on Databricks, have a look at this project that is designed to help you get started quickly and efficiently.

Source link

lol