How do we make LLMs handle long contexts without breaking the bank on computational costs? This is a pressing issue because tasks like document understanding and long-form text generation require processing more information than ever. The quadratic complexity of attention mechanisms in transformers means that, as we extend the context, the computation and memory requirements skyrocket.

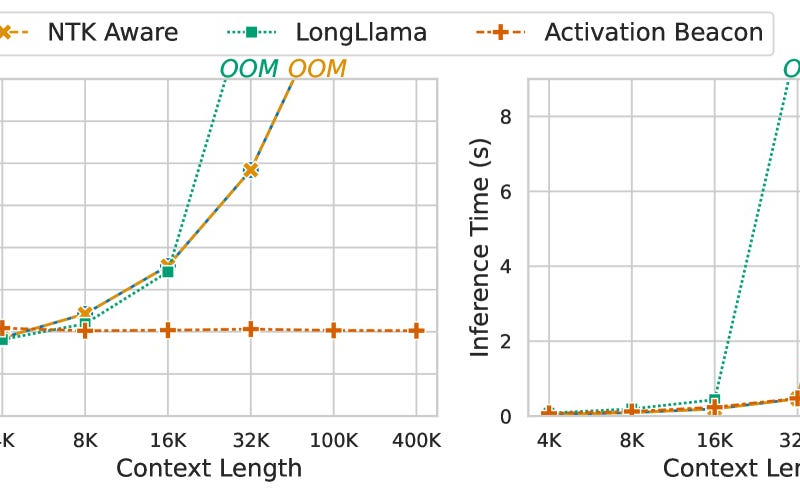

In this post, we will explore how “Activation Beacon,” a new method for long context compression (trending on AImodels.fyi!), attempts to solve this problem. We’ll dig into what makes this approach effective, how it works, and the benefits it brings. And I’ll also highlight some considerations to keep in mind when applying this method.

Source link

lol