RAG (Retrieval-Augmented Generation) is all the rage. And there’s a good reason why. Like so many others, I instinctively felt an air of excitement at the beginning of the internet. The Browser Wars, Java vs Mocha. And then again in 2007 when the iPhone led a paradigm shift to how, where, and when we consume media. Just as I do now,

In the rapidly advancing field of AI, Retrieval-Augmented Generation (RAG) has become a crucial technique, enhancing the capabilities of large language models by integrating external knowledge sources. By leveraging RAG, you can build chatbots that generate responses informed by real-time data, ensuring both coherence and relevance. This guide will provide you with a step-by-step walkthrough of integrating the eBay API with LlamaIndex to develop your own RAG-powered chatbot.

Why RAG?

RAG enhances the capabilities of your chatbot by allowing it to access and retrieve information from external sources in real time. Instead of relying solely on pre-trained data, your chatbot can now query external APIs or databases to obtain the most relevant and up-to-date information. This ensures that the responses generated are not only accurate but also contextually relevant, reflecting the latest available data. It’s like moving from managing a static collection of DVDs or Blu-Rays to streaming on-demand content, where the latest information is always at your fingertips.

Step 1: Setting Up LlamaIndex

To kick off, you’ll need to set up LlamaIndex, a powerful tool that simplifies the integration of external data sources into your chatbot.

- Installation: Start by running the following command in your terminal:

npx create-llama@latest

This command scaffolds out a Next.js project and walks you through the initial setup, including key concepts of RAG and LLMs like document scraping and multi-agent systems. It will provide sample pdfs to work off of. You'll want to remove this if you have an idea in mind, or expect to scrape the web, and want to host your app on a worker like Vercel or Cloudflare.

-

Configuration: Once the setup is complete, navigate to the

llama.config.jsfile. Here, you’ll define the sources your chatbot will retrieve information from. For our purposes, we’ll be focusing on integrating the eBay API.

Step 2: Integrating the eBay API

Now, let’s connect your chatbot to the vast repository of data available through the eBay API.

- OAuth Authentication: eBay’s API requires OAuth for secure access. You’ll first need to generate an OAuth token. Here’s a quick function to handle this:

const eBayAuthToken = require("ebay-oauth-nodejs-client");

const ebayClientId = process.env.EBAY_API_KEY || "";

const ebayClientSecret = process.env.EBAY_CLIENT_SECRET || "";

const redirectUri = process.env.EBAY_REDIRECT_URI || ""; // Optional unless you're doing user consent flow

const authToken = new eBayAuthToken({

clientId: ebayClientId,

clientSecret: ebayClientSecret,

redirectUri: redirectUri, // Optional unless you're doing user consent flow

});

let cachedToken: string | null = null;

let tokenExpiration: number | null = null;

export async function getOAuthToken(): Promise<string | null> {

if (cachedToken && tokenExpiration && Date.now() < tokenExpiration) {

return cachedToken;

}

try {

const response = await authToken.getApplicationToken("PRODUCTION"); // or 'SANDBOX'

let tokenData;

if (typeof response === "string") {

// Parse the response string into a JSON object

tokenData = JSON.parse(response);

} else {

tokenData = response;

}

cachedToken = tokenData.access_token;

tokenExpiration = Date.now() + tokenData.expires_in * 1000 - 60000; // Set expiration time

return cachedToken;

} catch (error) {

console.error("Error obtaining OAuth token:", error);

throw new Error("Failed to obtain OAuth token");

}

}

Replace `YOUR_BASE64_ENCODED_CREDENTIALS` with your actual credentials.

- Fetching Data: With your token in hand, you can now query the eBay API to fetch relevant data. Here’s how you can fetch the price of a specific item:

import axios from 'axios';

export async function fetchCardPrices(searchTerm: string) {

const token = await getOAuthToken();

if (!token) throw new Error("No OAuth token available");

const response = await axios.get(`https://api.ebay.com/buy/browse/v1/item_summary/search?q=${encodeURIComponent(searchTerm)}`, {

headers: {

'Authorization': `Bearer ${token}`,

},

});

return response.data.itemSummaries.map(item => ({

title: item.title,

price: item.price.value,

currency: item.price.currency,

}));

}

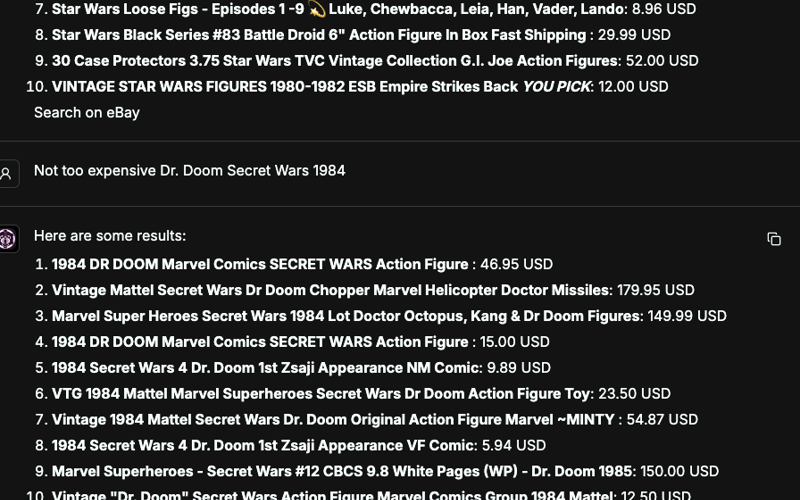

This function returns an array of items with their titles and prices, which your chatbot can use to provide users with up-to-date information.

Step 3: Querying and Responding

With LlamaIndex and eBay integrated, it’s time to build the logic that allows your chatbot to query these sources and generate informed responses.

- Extracting Search Terms: Before querying the eBay API, you need to extract relevant search terms from the user’s input. Here’s a helper function to do that:

function extractSearchTerm(query: string): string {

// Simple keyword extraction logic

return query.replace(/.*price of/i, '').trim();

}

-

Handling API Responses: Finally, you can tie everything together by creating a route in your Next.js app to handle incoming requests, query eBay, and return the results:

typescript

import {NextApiRequest, NextApiResponse} from 'next';

import {fetchCardPrices} from './utils/fetchCardPrices';

export default async (req: NextApiRequest, res: NextApiResponse) => {

const query = req.query.q as string;

const searchTerm = extractSearchTerm(query);

const prices = await fetchCardPrices(searchTerm);

res.status(200).json(prices);

};

Challenges and Solutions

As you build your RAG chatbot, you might encounter some common pitfalls. For instance, I use GPT-4o and Claude Sonnet-3.5 as coding interns. While setting up LlamaIndex in a Python app, I asked GPT to help me debug and the code snippets were outdated.

Conclusion

By following these steps, you’ve empowered your chatbot to fetch and utilize real-time data from eBay, enhancing its usefulness and relevance to your users. RAG is a powerful technique that unlocks a wide range of possibilities, and with the right tools and guidance, you can leverage it to create truly intelligent applications.

Deploying to Vercel: Challenges with Edge Functions

When it comes to deploying your application on Vercel, it’s important to be aware of some limitations, particularly when using edge functions. Vercel’s edge functions are designed for low-latency responses, but they do come with some constraints:

-

Unsupported Modules on Edge Functions: Certain Node.js modules, like

sharpandonnxruntime-node, are not supported in Vercel Edge Functions due to the limitations of the edge runtime environment. If your application relies on these modules, you’ll need to ensure they are only used in serverless functions or consider replacing them with alternative solutions that are compatible with the edge environment. -

Module Not Found Errors: You might encounter “Module not found” errors during the build process, especially when modules are not correctly installed or are being used in the wrong environment. To resolve these issues, double-check that all necessary modules are installed and that they are configured to run in the appropriate environment (Edge vs. Serverless). It’s crucial to separate the logic that requires these modules from the parts of your app that run on the edge.

While Vercel provides a powerful platform for deploying your applications with minimal overhead, being mindful of these challenges will save you from headaches during deployment and ensure your app runs smoothly in production.

Source link

lol