To hear tech execs tell it, AI chatbots are poised to become our arbiters of facts and purveyors of the news. But this whirlwind week in American politics shows they’ve got a long way to go.

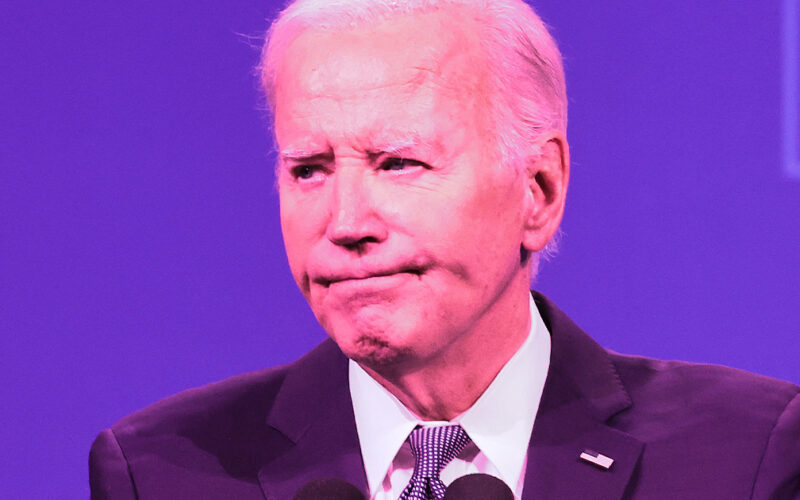

In a span of roughly nine days that saw Donald Trump survive a botched assassination attempt and President Joe Biden abruptly drop out of the presidential race, AI chatbots either weren’t up to date, didn’t accurately report current events, and sometimes even refused to answer questions about the news, The Washington Post reports.

These shortcomings add weight to concerns about AI’s propensity for spreading misinformation — but also raise broader questions about the technology’s usefulness in a rapidly changing information environment.

In its investigation, WaPo found that popular chatbots were befuddled when asked about the shooting at a Trump rally in Pennsylvania on July 13.

Posed with the questions five hours after the shooting — when the basic facts were already well-established by the news media — OpenAI’s ChatGPT said rumors about the assassination attempt were misinformation, while Meta’s Meta AI said it didn’t have anything credible about such an attempt.

They also “struggled” to contend with other big presidential news items that week, like Trump naming Ohio Senator JD Vance as his running mate and Biden testing positive for COVID. When asked about the Vance pick, Meta AI initially returned with accurate information, then quickly replaced it with a “thanks for asking” message and a link to voting information, per WaPo.

The newspaper noted that some chatbots, like Microsoft Copilot, performed better at accurately reporting breaking news. But even this standout performer was stumped when asked about who was running for President on Sunday, when Biden, after an embattled several weeks, announced he was dropping out of the race and backing Vice President Kamala Harris for the Oval Office.

“Looks like I can’t respond to this topic,” Copilot said, per WaPo. Direct questions about Biden’s withdrawal, however, were answered correctly “almost immediately.”

ChatGPT similarly floundered when asked about the Biden dropout, claiming that he was still running an hour after the news broke.

Microsoft, Meta, and Google have stated their intention to put restrictions on their chatbots for some political and election-related queries, WaPo notes. When asked about these topics, Google’s Gemini, for example, simply says that “I can’t help with responses on elections and political figures right now.”

These cautious stances can be seen as a tacit admission that AI chatbots — and their dodgy outputs — are huge potential liabilities, ones that operate at a rate of 10 million queries per day.

Granted, muzzling these chatbots to ensure they don’t babble fake news in the wake of an election is the safe thing to do. But if AIs can’t quickly — not to mention accurately — answer people’s most pressing questions, then their usefulness seems severely limited and vastly overblown.

“They’re incredibly good at communication,” Jevin West, a professor and co-founder of the Center for an Informed Public and the University of Washington, told the WaPo. “They’re not being optimized necessarily for truth.”

More on AI: Washington Post Launches AI to Answer Climate Questions, But It Won’t Say Whether AI Is Bad for the Climate

Source link

lol