This post is co-written with MagellanTV and Mission Cloud.

Video dubbing, or content localization, is the process of replacing the original spoken language in a video with another language while synchronizing audio and video. Video dubbing has emerged as a key tool in breaking down linguistic barriers, enhancing viewer engagement, and expanding market reach. However, traditional dubbing methods are costly (about $20 per minute with human review effort) and time consuming, making them a common challenge for companies in the Media & Entertainment (M&E) industry. Video auto-dubbing that uses the power of generative artificial intelligence (generative AI) offers creators an affordable and efficient solution.

This post shows you a cost-saving solution for video auto-dubbing. We use Amazon Translate for initial translation of video captions and use Amazon Bedrock for post-editing to further improve the translation quality. Amazon Translate is a neural machine translation service that delivers fast, high-quality, and affordable language translation.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities to help you build generative AI applications with security, privacy, and responsible AI.

MagellanTV, a leading streaming platform for documentaries, wants to broaden its global presence through content internationalization. Faced with manual dubbing challenges and prohibitive costs, MagellanTV sought out AWS Premier Tier Partner Mission Cloud for an innovative solution.

Mission Cloud’s solution distinguishes itself with idiomatic detection and automatic replacement, seamless automatic time scaling, and flexible batch processing capabilities with increased efficiency and scalability.

Solution overview

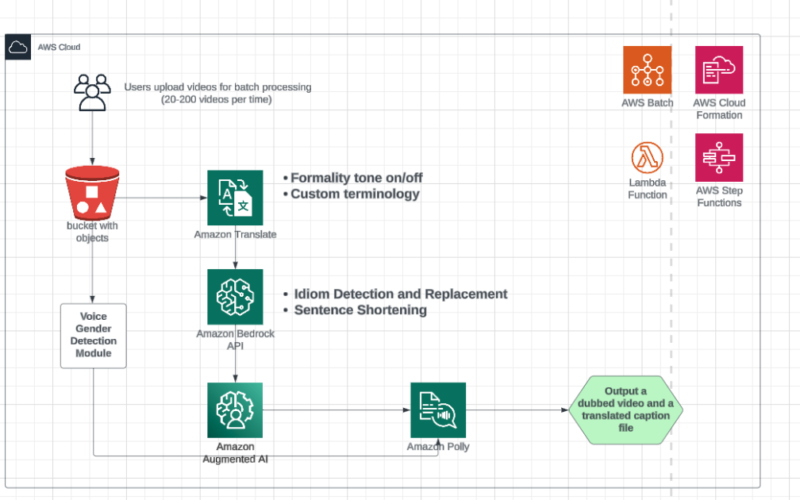

The following diagram illustrates the solution architecture. The inputs of the solution are specified by the user, including the folder path containing the original video and caption file, target language, and toggles for idiom detector and formality tone. You can specify these inputs in an Excel template and upload the Excel file to a designated Amazon Simple Storage Service (Amazon S3) bucket. This will launch the whole pipeline. The final outputs are a dubbed video file and a translated caption file.

We use Amazon Translate to translate the video caption, and Amazon Bedrock to enhance the translation quality and enable automatic time scaling to synchronize audio and video. We use Amazon Augmented AI for editors to review the content, which is then sent to Amazon Polly to generate synthetic voices for the video. To assign a gender expression that matches the speaker, we developed a model to predict the gender expression of the speaker.

In the backend, AWS Step Functions orchestrates the preceding steps as a pipeline. Each step is run on AWS Lambda or AWS Batch. By using the infrastructure as code (IaC) tool, AWS CloudFormation, the pipeline becomes reusable for dubbing new foreign languages.

In the following sections, you will learn how to use the unique features of Amazon Translate for setting formality tone and for custom terminology. You will also learn how to use Amazon Bedrock to further improve the quality of video dubbing.

Why choose Amazon Translate?

We chose Amazon Translate to translate video captions based on three factors.

- Amazon Translate supports over 75 languages. While the landscape of large language models (LLMs) has continuously evolved in the past year and continues to change, many of the trending LLMs support a smaller set of languages.

- Our translation professional rigorously evaluated Amazon Translate in our review process and affirmed its commendable translation accuracy. Welocalize benchmarks the performance of using LLMs and machine translations and recommends using LLMs as a post-editing tool.

- Amazon Translate has various unique benefits. For example, you can add custom terminology glossaries, while for LLMs, you might need fine-tuning that can be labor-intensive and costly.

Use Amazon Translate for custom terminology

Amazon Translate allows you to input a custom terminology dictionary, ensuring translations reflect the organization’s vocabulary or specialized terminology. We use the custom terminology dictionary to compile frequently used terms within video transcription scripts.

Here’s an example. In a documentary video, the caption file would typically display “(speaking in foreign language)” on the screen as the caption when the interviewee speaks in a foreign language. The sentence “(speaking in foreign language)” itself doesn’t have proper English grammar: it lacks the proper noun, yet it’s commonly accepted as an English caption display. When translating the caption into German, the translation also lacks the proper noun, which can be confusing to German audiences as shown in the code block that follows.

Because this phrase “(speaking in foreign language)” is commonly seen in video transcripts, we added this term to the custom terminology CSV file translation_custom_terminology_de.csv with the vetted translation and provided it in the Amazon Translate job. The translation output is as intended as shown in the following code.

Set formality tone in Amazon Translate

Some documentary genres tend to be more formal than others. Amazon Translate allows you to define the desired level of formality for translations to supported target languages. By using the default setting (Informal) of Amazon Translate, the translation output in German for the phrase, “[Speaker 1] Let me show you something,” is informal, according to a professional translator.

By adding the Formal setting, the output translation has a formal tone, which fits the documentary’s genre as intended.

Use Amazon Bedrock for post-editing

In this section, we use Amazon Bedrock to improve the quality of video captions after we obtain the initial translation from Amazon Translate.

Idiom detection and replacement

Idiom detection and replacement is vital in dubbing English videos to accurately convey cultural nuances. Adapting idioms prevents misunderstandings, enhances engagement, preserves humor and emotion, and ultimately improves the global viewing experience. Hence, we developed an idiom detection function using Amazon Bedrock to resolve this issue.

You can turn the idiom detector on or off by specifying the inputs to the pipeline. For example, for science genres that have fewer idioms, you can turn the idiom detector off. While, for genres that have more casual conversations, you can turn the idiom detector on. For a 25-minute video, the total processing time is about 1.5 hours, of which about 1 hour is spent on video preprocessing and video composing. Turning the idiom detector on only adds about 5 minutes to the total processing time.

We have developed a function bedrock_api_idiom to detect and replace idioms using Amazon Bedrock. The function first uses Amazon Bedrock LLMs to detect idioms in the text and then replace them. In the example that follows, Amazon Bedrock successfully detects and replaces the input text “well, I hustle” to “I work hard,” which can be translated correctly into Spanish by using Amazon Translate.

Sentence shortening

Third-party video dubbing tools can be used for time-scaling during video dubbing, which can be costly if done manually. In our pipeline, we used Amazon Bedrock to develop a sentence shortening algorithm for automatic time scaling.

For example, a typical caption file consists of a section number, timestamp, and the sentence. The following is an example of an English sentence before shortening.

Original sentence:

A large portion of the solar energy that reaches our planet is reflected back into space or absorbed by dust and clouds.

Here’s the shortened sentence using the sentence shortening algorithm. Using Amazon Bedrock, we can significantly improve the video-dubbing performance and reduce the human review effort, resulting in cost saving.

Shortened sentence:

A large part of solar energy is reflected into space or absorbed by dust and clouds.

Conclusion

This new and constantly developing pipeline has been a revolutionary step for MagellanTV because it efficiently resolved some challenges they were facing that are common within Media & Entertainment companies in general. The unique localization pipeline developed by Mission Cloud creates a new frontier of opportunities to distribute content across the world while saving on costs. Using generative AI in tandem with brilliant solutions for idiom detection and resolution, sentence length shortening, and custom terminology and tone results in a truly special pipeline bespoke to MagellanTV’s growing needs and ambitions.

If you want to learn more about this use case or have a consultative session with the Mission team to review your specific generative AI use case, feel free to request one through AWS Marketplace.

About the Authors

Na Yu is a Lead GenAI Solutions Architect at Mission Cloud, specializing in developing ML, MLOps, and GenAI solutions in AWS Cloud and working closely with customers. She received her Ph.D. in Mechanical Engineering from the University of Notre Dame.

Na Yu is a Lead GenAI Solutions Architect at Mission Cloud, specializing in developing ML, MLOps, and GenAI solutions in AWS Cloud and working closely with customers. She received her Ph.D. in Mechanical Engineering from the University of Notre Dame.

Max Goff is a data scientist/data engineer with over 30 years of software development experience. A published author, blogger, and music producer he sometimes dreams in A.I.

Max Goff is a data scientist/data engineer with over 30 years of software development experience. A published author, blogger, and music producer he sometimes dreams in A.I.

Marco Mercado is a Sr. Cloud Engineer specializing in developing cloud native solutions and automation. He holds multiple AWS Certifications and has extensive experience working with high-tier AWS partners. Marco excels at leveraging cloud technologies to drive innovation and efficiency in various projects.

Marco Mercado is a Sr. Cloud Engineer specializing in developing cloud native solutions and automation. He holds multiple AWS Certifications and has extensive experience working with high-tier AWS partners. Marco excels at leveraging cloud technologies to drive innovation and efficiency in various projects.

Yaoqi Zhang is a Senior Big Data Engineer at Mission Cloud. She specializes in leveraging AI and ML to drive innovation and develop solutions on AWS. Before Mission Cloud, she worked as an ML and software engineer at Amazon for six years, specializing in recommender systems for Amazon fashion shopping and NLP for Alexa. She received her Master of Science Degree in Electrical Engineering from Boston University.

Yaoqi Zhang is a Senior Big Data Engineer at Mission Cloud. She specializes in leveraging AI and ML to drive innovation and develop solutions on AWS. Before Mission Cloud, she worked as an ML and software engineer at Amazon for six years, specializing in recommender systems for Amazon fashion shopping and NLP for Alexa. She received her Master of Science Degree in Electrical Engineering from Boston University.

Adrian Martin is a Big Data/Machine Learning Lead Engineer at Mission Cloud. He has extensive experience in English/Spanish interpretation and translation.

Adrian Martin is a Big Data/Machine Learning Lead Engineer at Mission Cloud. He has extensive experience in English/Spanish interpretation and translation.

Ryan Ries holds over 15 years of leadership experience in data and engineering, over 20 years of experience working with AI and 5+ years helping customers build their AWS data infrastructure and AI models. After earning his Ph.D. in Biophysical Chemistry at UCLA and Caltech, Dr. Ries has helped develop cutting-edge data solutions for the U.S. Department of Defense and a myriad of Fortune 500 companies.

Ryan Ries holds over 15 years of leadership experience in data and engineering, over 20 years of experience working with AI and 5+ years helping customers build their AWS data infrastructure and AI models. After earning his Ph.D. in Biophysical Chemistry at UCLA and Caltech, Dr. Ries has helped develop cutting-edge data solutions for the U.S. Department of Defense and a myriad of Fortune 500 companies.

Andrew Federowicz is the IT and Product Lead Director for Magellan VoiceWorks at MagellanTV. With a decade of experience working in cloud systems and IT in addition to a degree in mechanical engineering, Andrew designs builds, deploys, and scales inventive solutions to unique problems. Before Magellan VoiceWorks, Andrew architected and built the AWS infrastructure for MagellanTV’s 24/7 globally available streaming app. In his free time, Andrew enjoys sim racing and horology.

Andrew Federowicz is the IT and Product Lead Director for Magellan VoiceWorks at MagellanTV. With a decade of experience working in cloud systems and IT in addition to a degree in mechanical engineering, Andrew designs builds, deploys, and scales inventive solutions to unique problems. Before Magellan VoiceWorks, Andrew architected and built the AWS infrastructure for MagellanTV’s 24/7 globally available streaming app. In his free time, Andrew enjoys sim racing and horology.

Qiong Zhang, PhD, is a Sr. Partner Solutions Architect at AWS, specializing in AI/ML. Her current areas of interest include federated learning, distributed training, and generative AI. She holds 30+ patents and has co-authored 100+ journal/conference papers. She is also the recipient of the Best Paper Award at IEEE NetSoft 2016, IEEE ICC 2011, ONDM 2010, and IEEE GLOBECOM 2005.

Qiong Zhang, PhD, is a Sr. Partner Solutions Architect at AWS, specializing in AI/ML. Her current areas of interest include federated learning, distributed training, and generative AI. She holds 30+ patents and has co-authored 100+ journal/conference papers. She is also the recipient of the Best Paper Award at IEEE NetSoft 2016, IEEE ICC 2011, ONDM 2010, and IEEE GLOBECOM 2005.

Cristian Torres is a Sr. Partner Solutions Architect at AWS. He has 10 years of experience working in technology performing several roles such as: Support Engineer, Presales Engineer, Sales Specialist and Solutions Architect. He works as a generalist with AWS services focusing on Migrations to help strategic AWS Partners develop successfully from a technical and business perspective.

Cristian Torres is a Sr. Partner Solutions Architect at AWS. He has 10 years of experience working in technology performing several roles such as: Support Engineer, Presales Engineer, Sales Specialist and Solutions Architect. He works as a generalist with AWS services focusing on Migrations to help strategic AWS Partners develop successfully from a technical and business perspective.

Source link

lol