04

Jun

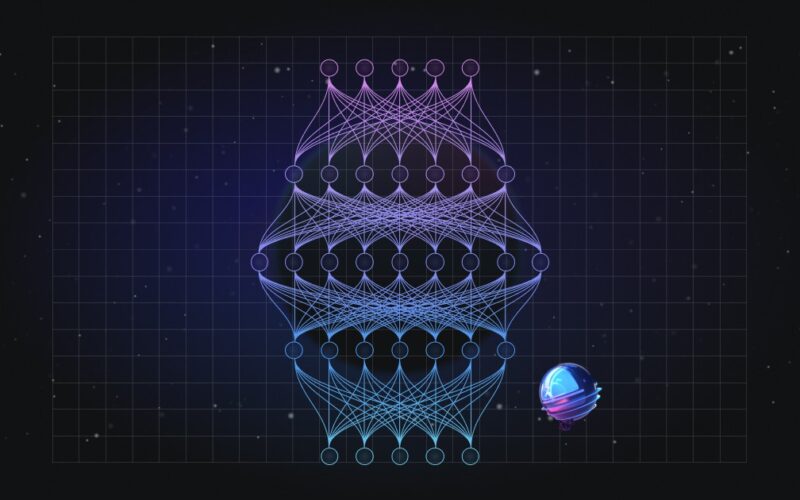

[Submitted on 31 May 2024] View a PDF of the paper titled How In-Context Learning Emerges from Training on Unstructured Data: On the Role of Co-Occurrence, Positional Information, and Noise Structures, by Kevin Christian Wibisono and 1 other authors View PDF HTML (experimental) Abstract:Large language models (LLMs) like transformers have impressive in-context learning (ICL) capabilities; they can generate predictions for new queries based on input-output sequences in prompts without parameter updates. While many theories have attempted to explain ICL, they often focus on structured training data similar to ICL tasks, such as regression. In practice, however, these models are trained…