20

Nov

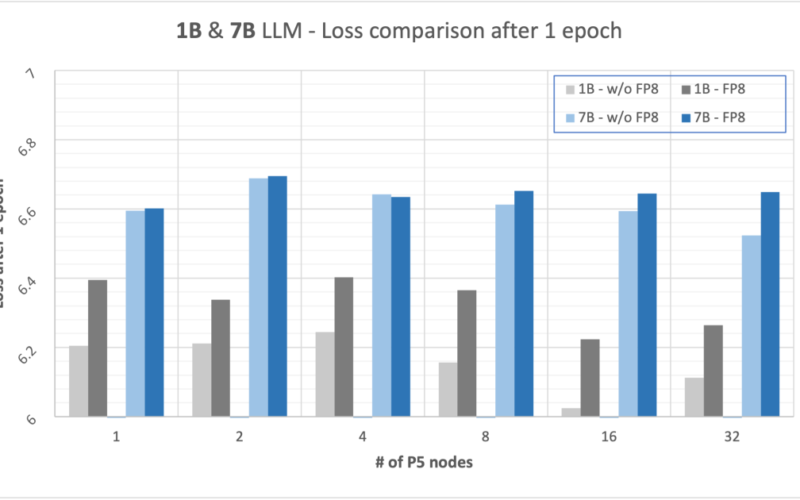

Large language models (LLMs) are AI systems trained on vast amounts of text data, enabling them to understand, generate, and reason with natural language in highly capable and flexible ways. LLM training has seen remarkable advances in recent years, with organizations pushing the boundaries of what’s possible in terms of model size, performance, and efficiency. In this post, we explore how FP8 optimization can significantly speed up large model training on Amazon SageMaker P5 instances. LLM training using SageMaker P5 In 2023, SageMaker announced P5 instances, which support up to eight of the latest NVIDIA H100 Tensor Core GPUs. Equipped…