23

May

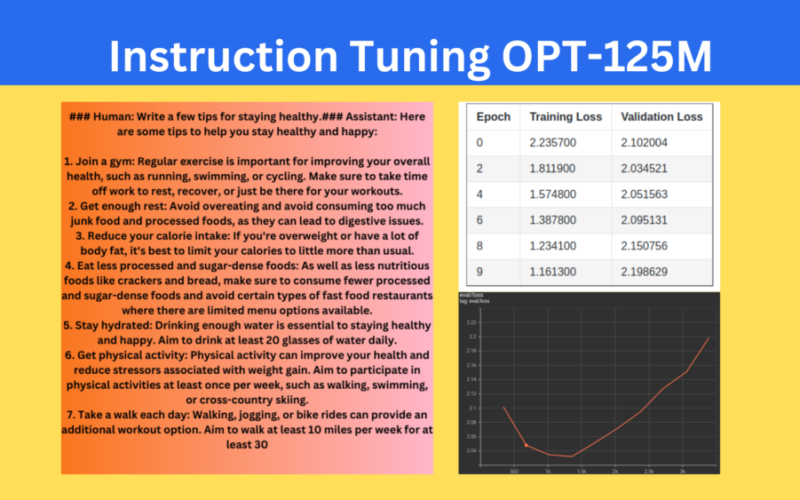

Large language models are pretrained on terabytes of language datasets. However, the pretraining dataset and strategy teach the model to generate the next token or word. In a real world sense, this is not much useful. Because in the end, we want to accomplish a task using the LLM, either through chat or instruction. We can do so through fine-tuning an LLM. Generally, we call this instruction tuning of the language model. To this end, in this article, we will use the OPT-125M model for instruction tuning. Figure 1. Output sample after instruction tuning OPT-125M on the Open Assistant Guanaco…