Don’t miss OpenAI, Chevron, Nvidia, Kaiser Permanente, and Capital One leaders only at VentureBeat Transform 2024. Gain essential insights about GenAI and expand your network at this exclusive three day event. Learn More

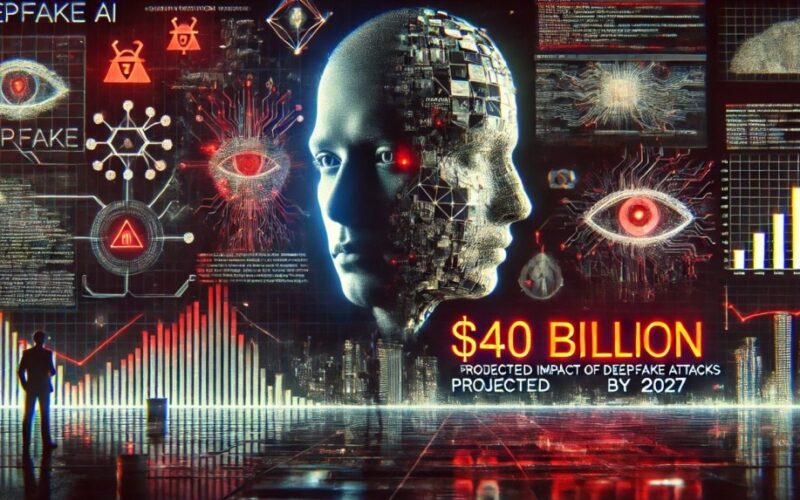

Now one of the fastest-growing forms of adversarial AI, deepfake-related losses are expected to soar from $12.3 billion in 2023 to $40 billion by 2027, growing at an astounding 32% compound annual growth rate. Deloitte sees deep fakes proliferating in the years ahead, with banking and financial services being a primary target.

Deepfakes typify the cutting edge of adversarial AI attacks, achieving a 3,000% increase last year alone. It’s projected that deep fake incidents will go up by 50% to 60% in 2024, with 140,000-150,000 cases globally predicted this year.

The latest generation of generative AI apps, tools and platforms provides attackers with what they need to create deep fake videos, impersonated voices, and fraudulent documents quickly and at a very low cost. Pindrops’ 2024 Voice Intelligence and Security Report estimates that deep fake fraud aimed at contact centers is costing an estimated $ 5 billion annually. Their report underscores how severe a threat deep fake technology is to banking and financial services

Bloomberg reported last year that “there is already an entire cottage industry on the dark web that sells scamming software from $20 to thousands of dollars.” A recent infographic based on Sumsub’s Identity Fraud Report 2023 provides a global view of the rapid growth of AI-powered fraud.

Countdown to VB Transform 2024

Join enterprise leaders in San Francisco from July 9 to 11 for our flagship AI event. Connect with peers, explore the opportunities and challenges of Generative AI, and learn how to integrate AI applications into your industry. Register Now

Source: Statista, How Dangerous are Deepfakes and Other AI-Powered Fraud? March 13, 2024

Enterprises aren’t prepared for deepfakes and adversarial AI

Adversarial AI creates new attack vectors no one sees coming and creates a more complex, nuanced threatscape that prioritizes identity-driven attacks.

Unsurprisingly, one in three enterprises don’t have a strategy to address the risks of an adversarial AI attack that would most likely start with deepfakes of their key executives. Ivanti’s latest research finds that 30% of enterprises have no plans for identifying and defending against adversarial AI attacks.

The Ivanti 2024 State of Cybersecurity Report found that 74% of enterprises surveyed are already seeing evidence of AI-powered threats. The vast majority, 89%, believe that AI-powered threats are just getting started. Of the majority of CISOs, CIOs and IT leaders Ivanti interviewed, 60% are afraid their enterprises are not prepared to defend against AI-powered threats and attacks. Using a deepfake as part of an orchestrated strategy that includes phishing, software vulnerabilities, ransomware and API-related vulnerabilities is becoming more commonplace. This aligns with the threats security professionals expect to become more dangerous due to gen AI.

Source: Ivanti 2024 State of Cybersecurity Report

Attackers concentrate deep fake efforts on CEOs

VentureBeat regularly hears from enterprise software cybersecurity CEOs who prefer to stay anonymous about how deepfakes have progressed from easily identified fakes to recent videos that look legitimate. Voice and video deepfakes appear to be a favorite attack strategy of industry executives, aimed at defrauding their companies of millions of dollars. Adding to the threat is how aggressively nation-states and large-scale cybercriminal organizations are doubling down on developing, hiring and growing their expertise with generative adversarial network (GAN) technologies. Of the thousands of CEO deepfake attempts that have occurred this year alone, the one targeting the CEO of the world’s biggest ad firm shows how sophisticated attackers are becoming.

In a recent Tech News Briefing with the Wall Street Journal, CrowdStrike CEO George Kurtz explained how improvements in AI are helping cybersecurity practitioners defend systems while also commenting on how attackers are using it. Kurtz spoke with WSJ reporter Dustin Volz about AI, the 2024 U.S. election, and threats posed by China and Russia.

“The deepfake technology today is so good. I think that’s one of the areas that you really worry about. I mean, in 2016, we used to track this, and you would see people actually have conversations with just bots, and that was in 2016. And they’re literally arguing or they’re promoting their cause, and they’re having an interactive conversation, and it’s like there’s nobody even behind the thing. So I think it’s pretty easy for people to get wrapped up into that’s real, or there’s a narrative that we want to get behind, but a lot of it can be driven and has been driven by other nation states,” Kurtz said.

CrowdStrike’s Intelligence team has invested a significant amount of time in understanding the nuances of what makes a convincing deep fake and what direction the technology is moving to attain maximum impact on viewers.

Kurtz continued, “And what we’ve seen in the past, we spent a lot of time researching this with our CrowdStrike intelligence team, is it’s a little bit like a pebble in a pond. Like you’ll take a topic or you’ll hear a topic, anything related to the geopolitical environment, and the pebble gets dropped in the pond, and then all these waves ripple out. And it’s this amplification that takes place.”

CrowdStrike is known for its deep expertise in AI and machine learning (ML) and its unique single-agent model, which has proven effective in driving its platform strategy. With such deep expertise in the company, it’s understandable how its teams would experiment with deep fake technologies.

“And if now, in 2024, with the ability to create deepfakes, and some of our internal guys have made some funny spoof videos with me and it just to show me how scary it is, you could not tell that it was not me in the video. So I think that’s one of the areas that I really get concerned about,” Kurtz said. “There’s always concern about infrastructure and those sort of things. Those areas, a lot of it is still paper voting and the like. Some of it isn’t, but how you create the false narrative to get people to do things that a nation-state wants them to do, that’s the area that really concerns me.”

Enterprises need to step up to the challenge

Enterprises are running the risk of losing the AI war if they don’t stay at parity with attackers’ fast pace of weaponizing AI for deepfake attacks and all other forms of adversarial AI. Deepfakes have become so commonplace that the Department of Homeland Security has issued a guide, Increasing Threats of Deepfake Identities.

Source link lol