AI and While You Were Out IRL. The speed and breadth of AI R&D these days is mind-boggling! This w/e I’ve been immersed IRL joys, including being trapped in airplanes, trains and automobiles. (Apologies for publishing this a day later than usual.) This issue is a bit like an AP News bulletin on what happened in AI when I was AWK.

The latest version of DeepSeek-Coder is now the top open model for coding. DeepSeek-Coder-v2 is an open-source Mixture-of-Experts (MoE) code language model that achieves performance comparable to GPT4-Turbo in code-specific tasks. Repo & paper: DeepSeek-Coder-V2: Breaking the Barrier of Closed-Source Models in Code Intelligence.

The new Hermes merge model beats Llama-3 70B. Hermes 2 Theta Llama-3 70B is an Instruct, fine-tuned model that merges Hermes 2 Pro and Meta’s Llama-3. The merge model matches GPT-4 on MT Bench and surpasses Llama-3 70B Instruct in all benchmarks. Read more here: Hermes 2 Theta Llama-3 70B.

This new method generates high-quality 3Ds from one single image. A novel image-to-3D framework for efficiently generating high-quality 3D meshes from single-view images, featuring state-of-the-art generation fidelity and strong generalisability. Paper, demo & code here> Unique3D: High-Quality and Efficient 3D Mesh Generation from a Single Image.

This is the first scalable, reliable method for auto generating instruction-following training data. The Qwen team at Alibaba introduced AutoIF a new approach that is set to revolutionise, instruction following and the way data is generated for Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). Paper: Self-play with Execution Feedback: Improving Instruction-following Capabilities of Large Language Models.

A new, open source, large-scale instruct dataset to lower barriers of SFT. Building large scale, high-quality instruct datasets is very expensive and only available to Tech Giants. To break that barrier, the BAAI just announced Infinity Instruct, a project that aims to develop open, large-scale, high-quality instruction datasets. Checkout: Infinity Instruct Dataset Project.

A new method that extends the length of GenAI videos. Most existing GenAI video models -including Open AI SORA- can only generate short video clips. Researchers at Alibaba just introduced ExVideo, a novel post-tuning method for video synthesis, that allows to produce longer videos up to 128 frames at a lower cost. Paper, demo, tech report: ExVideo: Extending Video- Enhancing the capability of video generation models.

MSFT open-sources a new vision foundation model that is small and powerful. Microsoft just introduced Florence-2, a VLM that has strong zero-shot and fine-tuning capabilities across all vision tasks. Despite its small size, it pars with models many times larger. The power of the model is not based on the architecture but in the large-scale FLD-5B training dataset. Blog review, paper, and notebooks here: Florence-2: Open Source Vision Foundation Model by Microsoft.

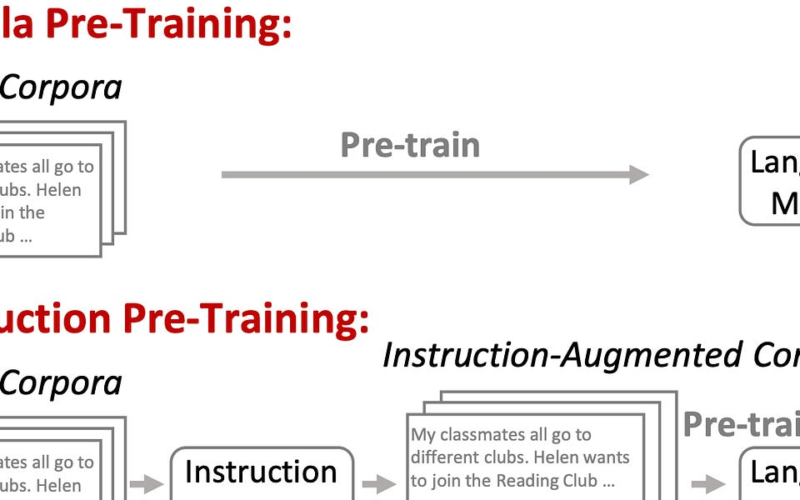

MSFT introduces a new method that enhances base model pre-training. This new method called Instruction Pre-Training 1) enhances generalisation, 2) improves pre-training efficiency, and 3) improves tasks performance. Paper and models: Instruction Pre-Training: Language Models are Supervised Multitask Learners.

Antrophic intros Claude 3.5 Sonnet… people swear it’s the best model in the planet. I’ve been using Claude for a while and really love it. The new Sonnet 3.5 is free and super powerful. The vision capabilities look impressive, as well as the agentic coding capabilities including unit testing. I really like the Artifacts dedicated window alongside the chat, sort of a dynamic workspace. The team at Vellum compared Claude 3.5 Sonnet vs. GPT-4o. In parallel, the awesome Jeremy, just introduced Claudette, a new friend that makes Claude 3.5 Sonnet even nicer. That is cool stuff.

So much AI stuff happening! Have a nice week.

-

Hyper-Relational Graphs: The Key to More Intelligent RAG Systems

-

DeepMind – V2A Soundtrack AI Generation for Generative Video

-

[interactive viz] How AI is Creating Havoc in Global Power Systems

-

TextGrad: Automatic ”Differentiation” via Text (code, paper, tutorials)

-

PlanRAG: Plan-then-RAG for LLMs as Decision Makers (repo, paper)

-

Stanford et al. – The Largest Study on How to Optimise Prompts in LM Programs

-

From Pixels to Prose: A Dataset with 16M Dense Image Captions

-

ShareGPT4Video: 4.8M Multi-modal Video Captions by GPT4-Vision

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.

Source link

lol