[Submitted on 19 Jun 2024]

View a PDF of the paper titled M3T: Multi-Modal Medical Transformer to bridge Clinical Context with Visual Insights for Retinal Image Medical Description Generation, by Nagur Shareef Shaik and Teja Krishna Cherukuri and Dong Hye Ye

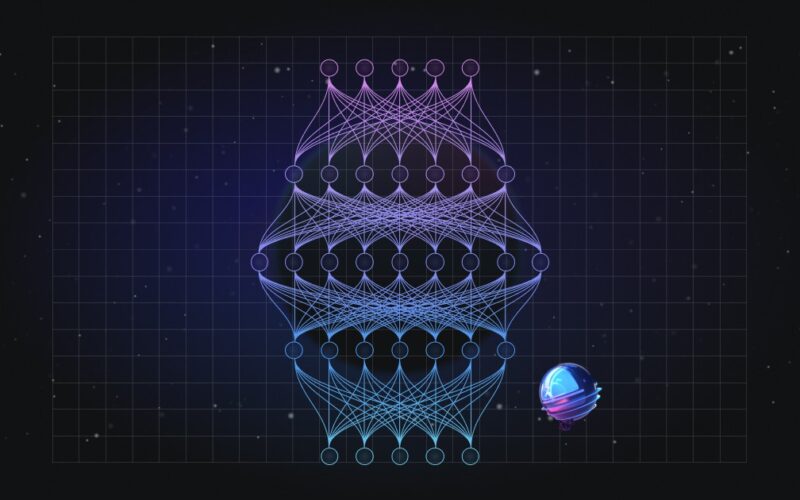

Abstract:Automated retinal image medical description generation is crucial for streamlining medical diagnosis and treatment planning. Existing challenges include the reliance on learned retinal image representations, difficulties in handling multiple imaging modalities, and the lack of clinical context in visual representations. Addressing these issues, we propose the Multi-Modal Medical Transformer (M3T), a novel deep learning architecture that integrates visual representations with diagnostic keywords. Unlike previous studies focusing on specific aspects, our approach efficiently learns contextual information and semantics from both modalities, enabling the generation of precise and coherent medical descriptions for retinal images. Experimental studies on the DeepEyeNet dataset validate the success of M3T in meeting ophthalmologists’ standards, demonstrating a substantial 13.5% improvement in BLEU@4 over the best-performing baseline model.

Submission history

From: Nagur Shareef Shaik [view email]

[v1]

Wed, 19 Jun 2024 00:46:48 UTC (4,642 KB)

Source link

lol