NVIDIA researchers are presenting new visual generative AI models and techniques at the Computer Vision and Pattern Recognition (CVPR) conference this week in Seattle. The advancements span areas like custom image generation, 3D scene editing, visual language understanding, and autonomous vehicle perception.

“Artificial intelligence, and generative AI in particular, represents a pivotal technological advancement,” said Jan Kautz, VP of learning and perception research at NVIDIA.

“At CVPR, NVIDIA Research is sharing how we’re pushing the boundaries of what’s possible — from powerful image generation models that could supercharge professional creators to autonomous driving software that could help enable next-generation self-driving cars.”

Among the over 50 NVIDIA research projects being presented, two papers have been selected as finalists for CVPR’s Best Paper Awards – one exploring the training dynamics of diffusion models and another on high-definition maps for self-driving cars.

Additionally, NVIDIA has won the CVPR Autonomous Grand Challenge’s End-to-End Driving at Scale track, outperforming over 450 entries globally. This milestone demonstrates NVIDIA’s pioneering work in using generative AI for comprehensive self-driving vehicle models, also earning an Innovation Award from CVPR.

One of the headlining research projects is JeDi, a new technique that allows creators to rapidly customise diffusion models – the leading approach for text-to-image generation – to depict specific objects or characters using just a few reference images, rather than the time-intensive process of fine-tuning on custom datasets.

Another breakthrough is FoundationPose, a new foundation model that can instantly understand and track the 3D pose of objects in videos without per-object training. It set a new performance record and could unlock new AR and robotics applications.

NVIDIA researchers also introduced NeRFDeformer, a method to edit the 3D scene captured by a Neural Radiance Field (NeRF) using a single 2D snapshot, rather than having to manually reanimate changes or recreate the NeRF entirely. This could streamline 3D scene editing for graphics, robotics, and digital twin applications.

On the visual language front, NVIDIA collaborated with MIT to develop VILA, a new family of vision language models that achieve state-of-the-art performance in understanding images, videos, and text. With enhanced reasoning capabilities, VILA can even comprehend internet memes by combining visual and linguistic understanding.

NVIDIA’s visual AI research spans numerous industries, including over a dozen papers exploring novel approaches for autonomous vehicle perception, mapping, and planning. Sanja Fidler, VP of NVIDIA’s AI Research team, is presenting on the potential of vision language models for self-driving cars.

The breadth of NVIDIA’s CVPR research exemplifies how generative AI could empower creators, accelerate automation in manufacturing and healthcare, while propelling autonomy and robotics forward.

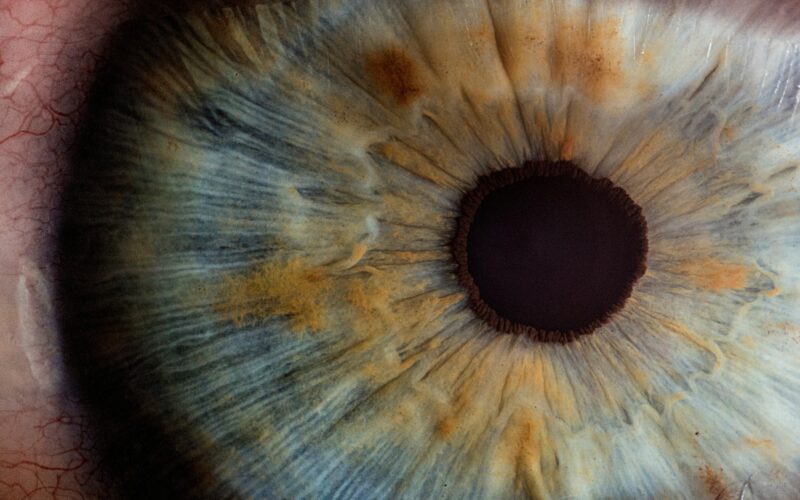

(Photo by v2osk)

See also: NLEPs: Bridging the gap between LLMs and symbolic reasoning

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Source link

lol