(agsandrew/Shutterstock)

We are now in an era where large foundation models (large-scale, general-purpose neural networks pre-trained in an unsupervised manner on large amounts of diverse data) are transforming fields like computer vision, natural language processing, and, more recently, time-series forecasting. These models are reshaping time series forecasting by enabling zero-shot forecasting, allowing predictions on new, unseen data without retraining for each dataset. This breakthrough significantly cuts development time and costs, streamlining the process of creating and fine-tuning models for different tasks.

The power of machine learning (ML) methods in time series forecasting first gained prominence during the M4 and M5 forecasting competitions, where ML-based models significantly outperformed traditional statistical methods for the first time. In the M5 competition (2020), advanced models like LightGBM, DeepAR, and N-BEATS demonstrated the effectiveness of incorporating exogenous variables—factors like weather or holidays that influence the data but aren’t part of the core time series. This approach led to unprecedented forecasting accuracy.

These competitions highlighted the importance of cross-learning from multiple related series and paved the way for developing foundation models specifically designed for time series analysis. They also spurred interest in machine learning models for time series forecasting as ML models are increasingly overtaking statistical methods due to their ability to recognize complex temporal patterns and integrate exogenous variables. (Note: Statistical methods still often outperform ML models for short-term univariate time series forecasting.)

Timeline of Foundational Forecasting Models

In October 2023, TimeGPT-1, designed to generalize across diverse time series datasets without requiring specific training for each dataset, was published as one of the first foundation forecasting models. Unlike traditional forecasting methods, foundation forecasting models leverage vast amounts of pre-training data to perform zero-shot forecasting. This breakthrough allows businesses to avoid the lengthy and costly process of training and tuning models for specific tasks, offering a highly

Multiple series forecasting with TimeGPT-1 (Credit: TimeGPT-1 paper)

adaptable solution for industries dealing with dynamic and evolving data.

Then, in February 2024, Lag-Llama was released. It specializes in long-range forecasting by focusing on lagged dependencies, which are temporal correlations between past values and future outcomes in a time series. Lagged dependencies are especially important in domains like finance and energy, where current trends are often heavily influenced by past events over extended periods. By efficiently capturing these dependencies, Lag-Llama improves forecasting accuracy in scenarios where longer time horizons are critical.

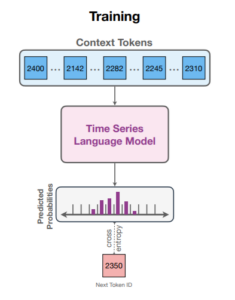

In March 2024, Chronos, a simple yet highly effective framework for pre-trained probabilistic time series models, was introduced. Chronos tokenizes time series values—converting continuous numerical data into discrete categories—through scaling and quantization. This allows it to apply transformer-based language models, typically used for text generation, to time series data. Transformers excel at identifying patterns in sequences, and by treating time series as a sequence of tokens, Chronos enables these models to predict future values effectively. Chronos is based on the T5 model family (ranging from 20M to 710M parameters) and was pre-trained on public and synthetic datasets. Benchmarking across 42 datasets showed that Chronos significantly outperforms other methods on familiar datasets and excels in zero-shot performance on new data. This versatility makes Chronos a powerful tool for forecasting in industries like retail, energy, and healthcare, where it generalizes well across diverse data sources.

In April 2024, Google launched TimesFM, a decoder-only foundation model pre-trained on 100 billion real-world time points. Unlike full transformer models that use both an encoder and decoder, TimesFM focuses on generating predictions one step at a time based solely on past inputs, making it ideal for time series forecasting. Foundation models like TimesFM differ from traditional transformer models, which typically require task-specific training and are less versatile across different domains. TimesFM’s ability to provide accurate out-of-the-box predictions in retail, finance, and natural sciences makes it highly valuable, as it eliminates the need for extensive retraining on new time series data.

Chronos training (Image credit: Chronos: Learning the Language of Time Series)

In May 2024, Salesforce introduced Moirai, an open source foundation forecasting model designed to support probabilistic zero-shot forecasting and handle exogenous features. Moirai tackles challenges in time series forecasting, such as cross-frequency learning, accommodating multiple variates, and managing varying distributional properties. Built on the Masked Encoder-based Universal Time Series Forecasting Transformer (MOIRAI) architecture, it leverages the Large-Scale Open Time Series Archive (LOTSA), which includes more than 27 billion observations across nine domains. With techniques like Any-Variate Attention and flexible parametric distributions, Moirai delivers scalable, zero-shot forecasting on diverse datasets without requiring task-specific retraining, marking a significant step toward universal time series forecasting.

IBM’s Tiny Time Mixers (TTM), released in June 2024, offer a lightweight alternative to traditional time series foundation models. Instead of using the attention mechanism of transformers, TTM is an MLP-based model that relies on fully connected neural networks. Innovations like adaptive patching and resolution prefix tuning allow TTM to generalize effectively across diverse datasets while handling multivariate forecasting and exogenous variables. Its efficiency makes it ideal for low-latency environments with limited computational resources.

AutoLab’s MOMENT, also released in May 2024, is a family of open source foundation models designed for general-purpose time series analysis. MOMENT addresses three major challenges in pre-training on time series data: the lack of large cohesive public time series repositories, the diverse characteristics of time series data (such as variable sampling rates and resolutions), and the absence of established benchmarks for evaluating models. To address these, AutoLab introduced the Time Series Pile, a collection of public time series data across multiple domains, and developed a benchmark to evaluate MOMENT on tasks like short- and long-horizon forecasting, classification, anomaly detection, and imputation. With minimal fine-tuning, MOMENT delivers impressive zero-shot performance on these tasks, offering scalable, general-purpose time series models.

Together, these models represent a new frontier in time series forecasting. They offer industries across the board the ability to generate more accurate forecasts, identify intricate patterns, and improve decision-making, all while reducing the need for extensive, domain-specific training.

Future of Time Series and Language Models: Combining Text Data with Sensor Data

Looking ahead, combining time series models with language models is unlocking exciting innovations. Models like Chronos, Moirai, and TimesFM are pushing the boundaries of time series forecasting, but the next frontier is blending traditional sensor data with unstructured text for even better results.

Take the automobile industry—combining sensor data with technician reports and service notes through NLP to get a complete view of potential maintenance issues. In healthcare, real-time patient monitoring is paired with doctors’ notes to predict health outcomes for earlier diagnoses. Retail and rideshare companies use social media and event data alongside time series forecasts to better predict ride demand or sales spikes during major events.

By combining these two powerful data types, industries like IoT, healthcare, and logistics are gaining a deeper, more dynamic understanding of what’s happening—and what’s about to happen—leading to smarter decisions and more accurate predictions.

About the author: Anais Dotis-Georgiou is a Developer Advocate for InfluxData with a passion for making data beautiful with the use of Data Analytics, AI, and Machine Learning. She takes the data that she collects, does a mix of research, exploration, and engineering to translate the data into something of function, value, and beauty. When she is not behind a screen, you can find her outside drawing, stretching, boarding, or chasing after a soccer ball.

Advocate for InfluxData with a passion for making data beautiful with the use of Data Analytics, AI, and Machine Learning. She takes the data that she collects, does a mix of research, exploration, and engineering to translate the data into something of function, value, and beauty. When she is not behind a screen, you can find her outside drawing, stretching, boarding, or chasing after a soccer ball.

Related Items:

InfluxData Touts Massive Performance Boost for On-Prem Time-Series Database

Understanding Open Data Architecture and Time Series Data

It’s About Time for InfluxData

Anais Dotis-Georgiou, Chronos, foundation model, GenAI, language models, large language model, Moirai, time series, time-series model, TimeGPT-1, TimesFM, Tiny Time Mixers

Source link

lol