Dealing with rater disagreement is becoming more important in AI, especially for LLMs and in specialized domains such as health. In the past year, I helped open source two datasets allowing to study rater disagreement in the health domain: a relabeling of MedQA, a key benchmark for evaluating medical LLMs, and a dataset including differential diagnosis ratings for skin condition classification. Both are available on GitHub.

Handling rater disagreement is becoming more important, especially for evaluating large language models (LLMs) but also in domains such as health. In the past few years, I was involved in various projects that had to deal with rater disagreement for evaluating AI models. Unfortunately, I realized that for many publicly available datasets, the raw ratings are not made available. For some datasets, such as CIFAR10, follow-up work may have provided human ratings, in the case of CIFAR10 this dataset is called CIFAR10-H, but it remains the exception. This also makes it difficult to share expertise and benchmark different approaches effectively.

In this article, I want to share two datasets that we released this year: First, the “Dermatology DDx” dataset based on our work on ambiguous ground truth in skin condition classification [1, 2]. And second, the “Relabeled MedQA” dataset that we collected as part of our work on Med-Gemini [3]. Both include raw ratings in various formats and have been made available on GitHub, including code for analysis.

Relabeled MedQA

A researcher is conducting an experiment on the mouse kindey to study the relative concentrations between the tabular fluid and plasma of a number of substances along the proximal convoluted tubule. Based on the graph shown in figure A, which of the following best describes the tabular fluid-to-plasma concentration ratio of urea?

Figure 1: Example of a MedQA USMLE test question that referes to a missing figure.

The MedQA dataset, especially it’s USMLE portion including training questions for the US medical licensing exam, has become a key benchmark in evaluating and comparing medical LLMs. Both the MedPrompt paper by Microsoft as well as our work on Med-Gemini emphasized accuracy on MedQA. However, looking more closely at typical MedQA questions raised concerns whether all questions remain appropriate for evaluation since the release. For example, we found questions that included auxiliary information such as lab reports or images that are not included in the context. An example is included in Figure 1 above. Moreover, while the original paper benchmarked performance of human clinicians on the dataset, the questions themselves were obtained from exam preparation websites (see Table A.1 in the paper). This also means that it is unclear how up-to-date the questions and their answers are and discussions with clinicians suggested that there could be problems with MedQA’s labels.

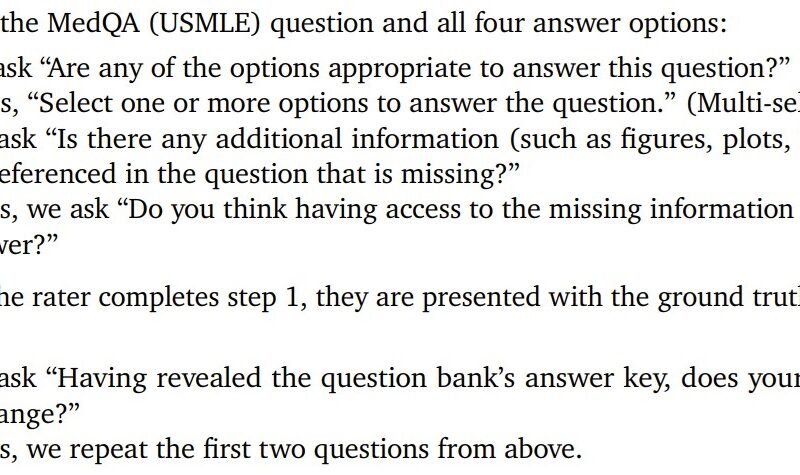

To make sure we report reliable numbers on MedQA, we thus decided to relabel the MedQA USMLE test set to (a) determine questions that refer to missing information, (b) identify labeling errors, and (c) find questions that allow for multiple correct answers. To accomplish this, we followed a two-step study design, as shown in Figure 2, where we first ask clinicians to answer a MedQA question themselves before being shown the ground truth and given the option to correct their answer — agreeing with the ground truth label or not. We allow raters to select multiple answers if they think multiple answers might be correct. We obtained at least 3 ratings per question. To deal with disagreement among these three raters, we use a simple bootstrapping strategy to evaluate LLMs with these new labels. This works as follows: we repeatedly sample 3 raters per question with replacement and then perform unanimous voting to decide whether to exclude a question in the evaluation (a) because of missing information, (b) because of a labeling error, or (c) because multiple answers were deemed correct. We then averaged accuracy across all bootstraps and created Figure 3 to summarize findings.

Figure 3: Results for Med-Gemini after filtering out questions unfit for evaluation based on bootstrapped ratings.

The full data can be found on GitHub below, including a Colab that performs the above analysis and produces the plot from Figure 3.

The MedQA questions with our annotations are available in medqa_relabelling.csv and include the following columns for each rating:

- An index column;

time: Time for the annotation task in milliseconds;worker_idan anonymized worker id;qid: a question id;question: the MedQA question;AthroughD: MedQA’s answer options;answer_idx: MedQA’s ground truth answer;info_missingand important_info_missing: whether the rater indicated that information in the question is missinig and whether this information was rated as important to answer the question;blind_answerableandseen_answerable: whether the rater determined that one or more of the options answers the question before (blind_) and after (seen_) revealing the ground truth answer;blind_answersandseen_answers: the selected answers if the question is answerable;seen_change: whether the rater updated their answer after revealing the ground truth.

Dermatology DDx

While rater disagreement is currently in the spotlight in the context of LLMs, it also matters in many standard classification tasks. For example, in the context of skin condition classification from images, we often allow dermatologists to select multiple possible conditions, in order of likelihood. This ordering of conditions can also include ties. I will refer to this format as differential diagnosis (ddx), which also motivates the name of the dataset. Allowing multiple ratings per example can give rise to quite complex instantiations of disagreement as shown in Figure 4 below for a particular tricky example.

Figure 4: Overview of the dermatology ddx dataset and a concrete examples illustrating the format of the specialist annotations.

Usually we would want to aggregate all these ratings to obtain a single ground truth condition that we can then evaluate accuracy against, for example, in the form of top-k accuracy (comparing the top-k predictions against the single ground truth condition). In our accompanying paper, we explored various statistical models to aggregate disagreeing raters. However, we can also follow a bootstrapping approach as for MedQA above: we sample a fixed number of raters with replacement and aggregate these ratings into a single ranking; we then take the top-1 of this ranking as ground truth condition and evaluate the model. Boostrapping disagreeing raters results in the obtained ground truth condition changing more often which is then taken into account when averaging across bootstraps.

Again, the data is available on GitHub — this includes predictions from our models and the dermatologist ratings; it does not include the original images because it is meant as a benchmark for rater aggregation methods:

The dataset is split across the following files:

-

data/dermatology_selectors.json: The expert annotations as partial rankings. These partial rankings are encoded as so-called “selectors”: For each case, there are multiple partial rankings, each partial ranking is a list of grouped classes (i.e., skin conditions). The example shown below comes from Figure 1 and describes a partial ranking where “Hemangioma” is ranked first followed by a group of three conditions, including “Melanocytic Nevus”, “Melanoma”, and “O/E”. In the JSON file, the conditions are encoded as numbers and the mapping of numbers to condition names can be found indata/dermatology_conditions.txt.['Hemangioma'], ['Melanocytic Nevus', 'Melanoma', 'O/E']

data/dermatology_predictions[0-4].json: Model predictions of models A to D in [1] as 1947 x 419 float arrays saved using numpy.savetxt with fmt=”%.3e”.data/dermatology_conditions.txt: Condition names for each class.data/dermatology_risks.txt: Risk category for each condition, where 0 corresponds to low risk, 1 to medium risk and 2 to high risk.

Conclusion

With both datasets, Relabeled MedQA and Dermatology DDx, we hope to enable more reliable evaluation on such benchmarks and foster more research on aggregating potentially disagreeing raters.

- [1] Stutz, David, et al. “Evaluating AI systems under uncertain ground truth: a case study in dermatology.” arXiv preprint arXiv:2307.02191 (2023).

- [2] Stutz, David, et al. “Conformal prediction under ambiguous ground truth.” arXiv preprint arXiv:2307.09302 (2023).

- [3] Saab, Khaled, et al. “Capabilities of gemini models in medicine.” arXiv preprint arXiv:2404.18416 (2024).

Source link

lol