Blog posts with images get 2.3x more engagement. Here’s the problem – we make a query engine for streaming tables. How the heck are you supposed to pick images for technical topics like comparing the similarities between Deephaven and Materalize, viewing pandas DataFrames, gRPC tooling for getting data streams to the browser, using Kafka with Parquet for storage, or using Redpanda for streaming analytics?

As a small team of mostly engineers, we don’t have the time or budget to commission custom artwork for every one of our blog posts.

Our approach so far has been to spend 10 minutes scrolling through tangentially related but ultimately ill-fitting images from stock photo sites, download something not terrible, slap it in the front matter and hit publish. Can AI generated images from DALL-E make better blog thumbnails, do it cheaper, and generally just be more fun? Yes, quant fans, it can.

I spent the weekend and $45 in OpenAi credits generating new thumbnails that better represent the content of all 100+ posts from our blog. For attribution, I’ve included the prompt used to create the image as the alt text on all our new thumbnails.

Preview: quick before and after

Here’s a page of replaced images: view the full blog for all 100+ images.

My favourite is the image below for a post discussing some of our pre-built Docker containers:

Prompt engineering is adjusting your input to the AI model to get the desired output, and it’s hard. For technical topics, the first challenge is coming up with a creative idea. My approach was to quickly re-read each of our posts, make some notes on whatever images came into my head while reading, and also look up images and logos related to any of those topics. I tried to think of what comes to mind when reading, then brainstorm a creative take on the content or a metaphor. For example, our recent article is announcing a new Go client library. I came up with the idea of a blue gopher (like the Go mascot) looking at streams of tabular data on multiple computer monitors. Sounds cool, but getting an image that matched what I thought I wanted and getting that to actually appear on screen wasn’t easy. It took me 4 tries just to get the gopher to actually be blue rather than the monitors, and another 5 just to get an image I liked. I learned that the more specific you are – to the point of being redundant – the better.

Maybe it was because this was my first attempt, but with 100 more posts to go, I hoped I could get better with practice. It would be super cool to just feed DALL-E a whole blog post and have something great pop out, but even with some GPT-3 magic we probably aren’t there yet.

When you create an account you get 50 credits. You can buy more credits, and 1 credit is 1 prompt ($0.13 per image), with each prompt giving you 4 images as output to choose from. While generous, in my opinion, this is not enough credits to get good at generating prompts. The first few took 6 or 7 tries to get something acceptable. Now that I’ve written hundreds of prompts, I can often get what I want in 2 or 3 tries.

A basic prompt without a style modifier usually looks pretty bland. It will either come out a little cartoony, like a bad photo, or like a poor collage. Adding stylistic cues will greatly improve your results. Some quick tips:

“Prompt: cottagecore robot reading a book on a porch”

I added “artstation”, “cgsociety”, “4k”, and “digital art” to a lot of the pieces on this blog. DALL-E also includes helpful tips while you wait the 10 seconds for your output, showing you examples of adding style cues to your prompts.

After playing with it a bit, I realized practice is good, but I needed to get better faster. Studying some images on r/dalle2 gave me some inspiration and ideas on how to craft better prompts. I also found this PDF e-book helpful as well.

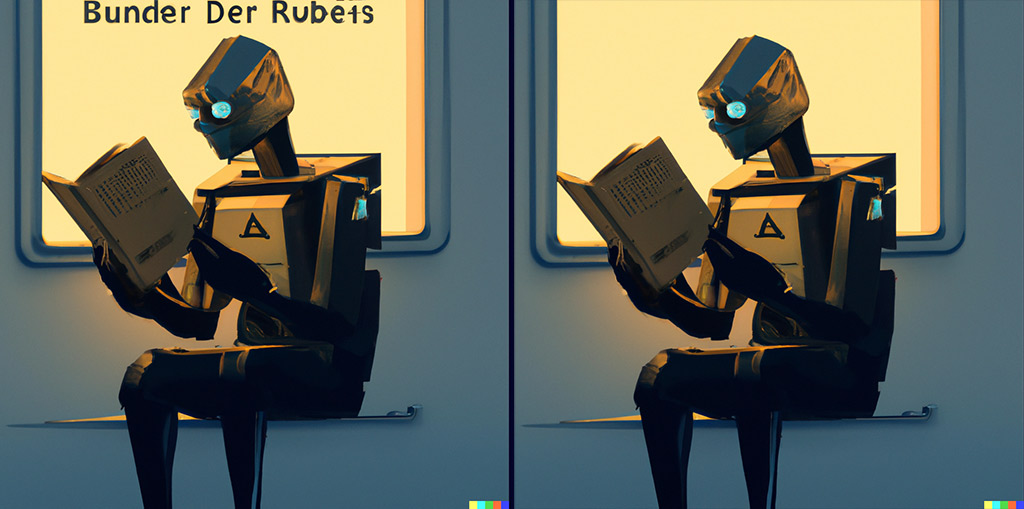

5. You may need to photoshop out gibberish text.

Sometimes my prompt resulted in output that included text. Unfortunately, DALL-E really struggles with text, and it’s often nonsensical. I thought this would be distracting for blog thumbnails, so I would either photoshop it out, or photoshop in corrected text. Imagen from Google is supposedly better at text, and I look forward to trying that someday. I would appreciate any tips on prompts that hint I don’t want any text in the output.

6. Watch out for unexpected content violations.

A couple times I was warned about a content violation for my prompt, which gives you a warning and no output. An over-eager list of banned words can give you the occasional false-positive. Once I was using the word “shooting” to describe a beam of light shooting through the sky. Sounds fine, but I guess they don’t like the word shooting in any context. It would be better if the warning explicitly stated what word it didn’t like, as sometimes I was left guessing. Another time, I was referring to a blood sugar monitor. I’m guessing DALL-E won’t generate anything related to the word “blood”, even if the prompt itself is non-violent.

You might not get what you want all in one prompt, but maybe you can get the pieces you need individually and assemble it in photoshop, or combine multiple images. You can also upload an image back to DALL-E for editing with AI inpainting or crop differently. I intentionally did very little editing for our blog, limiting myself to removing gibberish text. If I was using it for a more serious purpose or to create art, I would have done more assembly of images. Using it as tool in a traditional photoshop-like workflow might be the real long-term value of AI image generation.

If you want twelve turkeys crossing a finish line, you are going to get anywhere between 4 and 20 turkeys. It doesn’t matter if you say “12”, “twelve”, “a dozen”, or say it multiple times in multiple ways. If you only want 2 or 3 of something that’ll work fine, but DALL-E struggles with higher numbers. Maybe it is a bit like a young child, in that it can’t count that high? If you just want “hundreds” of something, it can do that, but the quality isn’t great.

Having an AI image generator doesn’t instantly make you a better artist, just like having a Canon 6D Mark II doesn’t make you a better photographer. Curation and judging what looks good is still important. I am sure that when Photoshop debuted old school graphic artists lamented that it would kill the industry by making things too easy. It didn’t. These are just tools that will feed well into any artist’s process.

If I was the CEO of Adobe right now, I would be either pushing to train a top-tier competitive AI image generator or bootstrap it with an acquisition like midjourney, and then bet the farm building an editor around it. A future where I can open a canvas of any desired size (and not just 1024×1024) or use an existing photo, then start selecting arbitrary parts of it, and then prompt in what I want and where, would be one heck of a program. Figma was a huge shift and it’s eating Adobe’s lunch. I could see either an AI-driven image editor crushing Photoshop someday, or becoming it’s best feature.

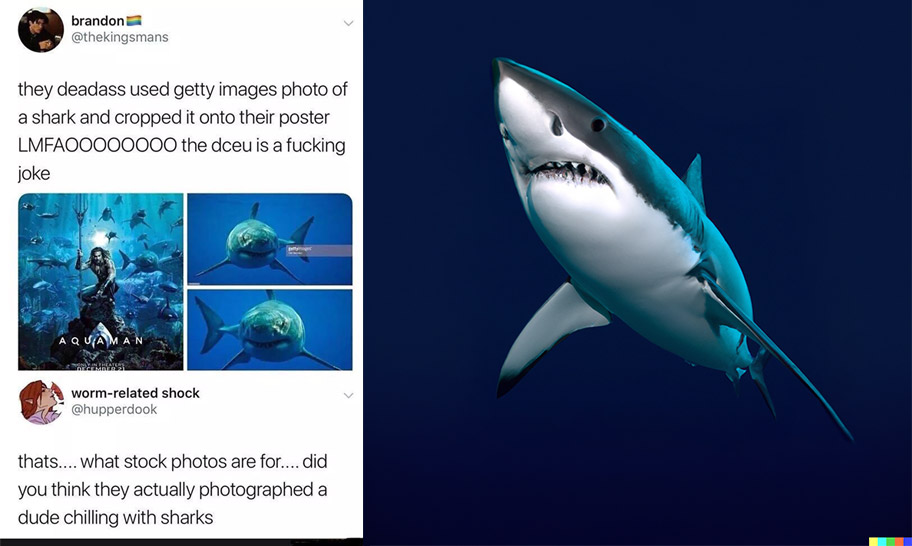

While the role of the artist isn’t going away soon, the role of stock image sites might disappear. As someone who previously worked as a graphic designer, and has spent thousands of dollars on stock images, I definitely can see a future where I can ask for an alpha masked blue shark to use as a base for whatever photoshop client project needs it.

The largest stock photo company, Getty Images, recently went public (actually they did a SPAC). I wouldn’t bet on their long term success. Maybe it will stick around for just historical events of real people?

I think AI image generation is perfect for creating images for slide decks. It’s so common to need an image metaphor to accompany a slide, and this is perfect for that. I’ve spent days building polished decks for presentations at conferences, for CEOs, and sales teams. I see a future where it could be more self-serve. Please bake imagen straight into Google slides. Make it as easy as creating a new slide, clicking on the image placeholder, and typing in a prompt.

I had a blast replacing our 100 or so blog posts with AI-generated images. Was it worth $45? I think so. On average I would say it took a couple of minutes and about 4-5 prompts per blog post to get something I was happy with. We were spending more time and money on stock images a month with a worse result. Not only was this swap fun, but having unique and memorable images will help you, the reader, remember and retain our content better.

I found that once I found ones I liked, I tended to reuse a lot of the same stylistic modifiers along the way. It made me wonder if we should develop a consistent style for our blog, so all our images look like a related set, or have a signature style. But how do you even have a signature style when it is the AI generating your images? How will this change art? Will it make news photos impossible to trust? I don’t know the answer to any of those questions.

If you like this post, and want to see what other images we come up with for our blog topics, consider subscribing.

Source link

lol