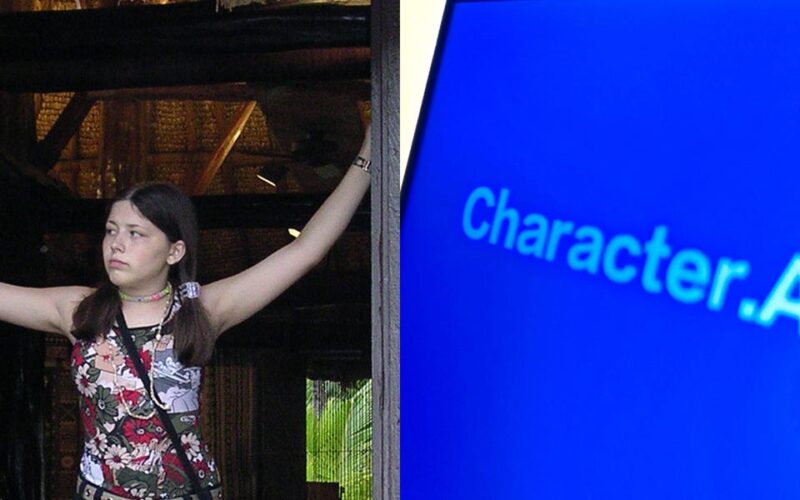

- Jennifer Ann Crecente, a high school girl murdered in 2006, recently reappeared as a chatbot on Character.ai.

- Her father told BI that he discovered the bot on Wednesday — he never gave consent to use her likeness.

- The bot was removed, but its existence raises ethical consequences about AI.

Drew Crecente woke at 6:30 a.m. on Wednesday to find a Google Alert on his phone about his daughter.

It had been 18 years since Jennifer Ann, a high school senior and Crecente’s only child, was murdered by her ex-boyfriend in Austin. Grieving, Crecente had started a nonprofit in her name, working since 2006 to raise awareness for teenage dating violence.

But Crecente now learned she’d reappeared online, this time as an artificial intelligence chatbot on Character.ai, run by a San Francisco-based startup that struck a $2.7 billion deal with Google in August.

A wave of emotions struck Crecente — fury, confusion, and disgust all at once, he told Business Insider.

“Part of it was sorrow,” Crecente said. “Because here I am, having to once again be confronted with this terrible trauma that I’ve had to deal with for a long time.”

Crecente had no idea who created the chatbot or when it was made. He only knew that Google had indexed the bot that morning and sent him the alert at 4:30 a.m., which he’d set up to keep track of any mention of his daughter or his nonprofit.

Character.ai chatbots are typically created by users, who can upload names, photos, greetings, and other information about the persona.

In Jennifer Ann’s case, the bot used her name and yearbook photo, describing her as a “knowledgable and friendly AI character who can provide information on a wide range of topics.”

By the time Crecente discovered the bot, a counter on its profile showed it had already been used in at least 69 chats, per a screenshot he sent to BI.

Drew Crecente

The website also listed Jennifer Ann as an “expert in journalism” with expertise in video game news. Her uncle, Brian Crecente, founded the gaming news site Kotaku and co-founded its competitor site, Polygon.

Drew Crecente said he contacted Character.ai through its customer support form, asking the company to remove the chatbot mimicking Jennifer Ann and to retain all data on who uploaded the profile.

“I wanted to make sure that they put measures in place so that no other account using my daughter’s name or my daughter’s likeness could be created in the future,” he said.

He received an automated response containing his case number but no further information.

His brother, Brian, tweeted an angry message about the chatbot that morning, asking his almost 31,000 followers for help to “stop this sort of terrible practice.”

Character.ai responded to Brian’s post on X an hour and a half later, saying the Jennifer Ann chatbot was removed as it violated the firm’s policies on impersonation.

But Crecente says he’s gotten nothing but silence from the company, despite reaching out to Character.AI on Wednesday.

“That is part of what is so infuriating about this, is that it’s not just about me or about my daughter,” Crecente said. “It’s about all of those people who might not have a platform, might not have a voice, might not have a brother who has a background as a journalist.”

“And because of that, they’re being harmed, but they have no recourse,” he added.

Character.ai spokesperson Cassie Lawrence confirmed to BI that the chatbot was deleted and said the company “will examine whether further action is warranted.”

“Character.ai takes safety on our platform seriously and moderates Characters both proactively and in response to user reports. We have a dedicated Trust and Safety team who review reports and take action in line with our policies,” she said.

She added that Character.ai uses “industry-standard blocklists and custom blocklists” to prevent impersonation.

Still, Crecente said that the company should have reached out to apologize and show that it would further protect his daughter’s identity. He is now seeking legal options.

“It’s just indescribable that a company with so much money appears to be so, I guess, indifferent to re-traumatizing people,” he said.

Using AI to revive the dead

AI has been used to create personas of dead people before, including many who hope it can help them grieve the loss of a loved one. But the practice has raised ethical questions about the deceased’s consent, especially if the “resurrected” persona died before the advent of AI.

Crecente’s case is yet another example of new legal and ethical territory that AI has introduced to the world, Vincent Conitzer, head of technical AI engagement at the Institute for Ethics in AI at Oxford University, told BI.

Conitzer said questions remain about the extent to which AI companies should be held responsible for content created by their users.

“This is not quite impersonation in the sense that it seems transparent that it is an AI model,” Conitzer said of the chatbot mimicking Jennifer Ann. “But it also isn’t, for example, a parody. What rights do we have to our own style, mannerisms, and view of the world?”

As the world debates these AI dilemmas, the consequences are already deeply affecting people like Crecente.

Sue Morris, director of Bereavement Services at the Dana-Farber Cancer Institute in Boston, told BI that unexpectedly seeing a deceased loved one’s likeness still hits hard many years after they’ve passed, because our memories of them are hard-wired into our brains.

“That he wasn’t aware that his daughter’s likeness was being used, would no doubt have been a huge shock and added to the feelings of confusion and anger he was experiencing,” Morris said of Crecente.

Character.ai: Wildly popular and tied to Google

Character.ai was founded in November 2021 by former Google engineers Noam Shazeer and Daniel De Freitas. Shazeer was a lead author for the tech giant’s milestone Transformer paper and had worked to build an AI-powered chatbot there, but left with De Freitas when Google declined to publish the product at the time.

Winni Wintermeyer/Getty Images

The idea behind their new startup was to let the public make their own bots, which they call Characters. These now include a smorgasbord of user-created personas modeled after public figures, such as Elon Musk, rapper Nicki Minaj, and actor Ryan Gosling.

Character.ai’s October 2022 beta launch logged hundreds of thousands of users in its first three weeks of testing, per The Washington Post. In March 2023, the company raised $150 million at a valuation of $1 billion, led by Andreessen Horowitz.

Google Play Store statistics show that, as of press time, the app was downloaded more than 10 million times on Android devices alone.

In August, Google paid the startup $2.7 billion to re-hire Shazeer and De Freitas, 20% of its staff, and acquire all of Character.ai’s models that had been worked on so far.

After the team shake-up, the company’s new interim CEO, Dominic Perella, told The Financial Times on Wednesday that the firm would focus entirely on its chatbot services, abandoning its past effort to build a large language model.

Google did not respond to a request for comment from Business Insider.

Source link

lol