Hi all. This is a late addendum to my last post

As I have found out, like the Allies had for those river crossings at Operation Market Garden, stateful sets are not as trivial as they initially appear: most guides will just tell you what’s their purpose and how to get them running, which leaves a false impression that synchronized data replication across pods happens automagically (sic)

Well, it doesn’t

Crossing that River. No Matter the Costs

That special type of deployment will only give you guarantees regarding the order of pods creation and deletion, their naming scheme and which persistent volume they will be bound to. Anything else is on the application logic. You may even violate the principle of using only the first pod for writing and the other ones for reading

When it comes to more niche applications like Conduit, I will probably have to code my own replication solution at some point, but for more widely used software like PostgreSQL there are solutions already available, thankfully

I came across articles by Bibin Wilson & Shishir Khandelwal and Albert Weng (we’ve seen him here before) detailing how to use a special variant of the database image to get replication working. Although a bit outdated, due to the Docker registry used I’m pretty sure that’s based on the PostgreSQL High Availability Helm chart

I don’t plan on covering Helm here as I think it adds complexity over already quite complex K8s manifests. Surely it might be useful for large-scale stuff, but let’s keep things simple here. I have combined knowledge from the articles with the updated charts in order to created a trimmed-down version of the required manifests (it would be good to add liveliness and readiness probes though):

apiVersion: v1

kind: ConfigMap

metadata:

name: postgres-config

labels:

app: postgres

data:

BITNAMI_DEBUG: "false" # Set to "true" for more debug information

POSTGRESQL_VOLUME_DIR: /bitnami/postgresql

PGDATA: /bitnami/postgresql/data

POSTGRESQL_LOG_HOSTNAME: "true" # Set to "false" for less debug information

POSTGRESQL_LOG_CONNECTIONS: "false" # Set to "true" for more debug information

POSTGRESQL_CLIENT_MIN_MESSAGES: "error"

POSTGRESQL_SHARED_PRELOAD_LIBRARIES: "pgaudit, repmgr" # Modules being used for replication

REPMGR_LOG_LEVEL: "NOTICE"

REPMGR_USERNAME: repmgr # Replication user

REPMGR_DATABASE: repmgr # Replication information database

---

apiVersion: v1

kind: ConfigMap

metadata:

name: postgres-scripts-config

labels:

app: postgres

data:

# Script for pod termination

pre-stop.sh: |-

#!/bin/bash

set -o errexit

set -o pipefail

set -o nounset

# Debug section

exec 3>&1

exec 4>&2

# Process input parameters

MIN_DELAY_AFTER_PG_STOP_SECONDS=$1

# Load Libraries

. /opt/bitnami/scripts/liblog.sh

. /opt/bitnami/scripts/libpostgresql.sh

. /opt/bitnami/scripts/librepmgr.sh

# Load PostgreSQL & repmgr environment variables

. /opt/bitnami/scripts/postgresql-env.sh

# Auxiliary functions

is_new_primary_ready() {

return_value=1

currenty_primary_node="$(repmgr_get_primary_node)"

currenty_primary_host="$(echo $currenty_primary_node | awk '{print $1}')"

info "$currenty_primary_host != $REPMGR_NODE_NETWORK_NAME"

if [[ $(echo $currenty_primary_node | wc -w) -eq 2 ]] && [[ "$currenty_primary_host" != "$REPMGR_NODE_NETWORK_NAME" ]]; then

info "New primary detected, leaving the cluster..."

return_value=0

else

info "Waiting for a new primary to be available..."

fi

return $return_value

}

export MODULE="pre-stop-hook"

if [[ "${BITNAMI_DEBUG}" == "true" ]]; then

info "Bash debug is on"

else

info "Bash debug is off"

exec 1>/dev/null

exec 2>/dev/null

fi

postgresql_enable_nss_wrapper

# Prepare env vars for managing roles

readarray -t primary_node < <(repmgr_get_upstream_node)

primary_host="${primary_node[0]}"

# Stop postgresql for graceful exit.

PG_STOP_TIME=$EPOCHSECONDS

postgresql_stop

if [[ -z "$primary_host" ]] || [[ "$primary_host" == "$REPMGR_NODE_NETWORK_NAME" ]]; then

info "Primary node need to wait for a new primary node before leaving the cluster"

retry_while is_new_primary_ready 10 5

else

info "Standby node doesn't need to wait for a new primary switchover. Leaving the cluster"

fi

# Make sure pre-stop hook waits at least 25 seconds after stop of PG to make sure PGPOOL detects node is down.

# default terminationGracePeriodSeconds=30 seconds

PG_STOP_DURATION=$(($EPOCHSECONDS - $PG_STOP_TIME))

if (( $PG_STOP_DURATION < $MIN_DELAY_AFTER_PG_STOP_SECONDS )); then

WAIT_TO_PG_POOL_TIME=$(($MIN_DELAY_AFTER_PG_STOP_SECONDS - $PG_STOP_DURATION))

info "PG stopped including primary switchover in $PG_STOP_DURATION. Waiting additional $WAIT_TO_PG_POOL_TIME seconds for PG pool"

sleep $WAIT_TO_PG_POOL_TIME

fi

---

apiVersion: v1

kind: Secret

metadata:

name: postgres-secret

data:

POSTGRES_PASSWORD: cG9zdGdyZXM= # Default user(postgres)'s password

REPMGR_PASSWORD: cmVwbWdy # Replication user's password

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres-state

spec:

serviceName: postgres-service

replicas: 2

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

securityContext: # Container is not run as root

fsGroup: 1001

runAsUser: 1001

runAsGroup: 1001

containers:

- name: postgres

lifecycle:

preStop: # Routines to run before pod termination

exec:

command:

- /pre-stop.sh

- "25"

image: docker.io/bitnami/postgresql-repmgr:16.2.0

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 5432

name: postgres-port

envFrom:

- configMapRef:

name: postgres-config

env:

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: postgres-secret

key: POSTGRES_PASSWORD

- name: REPMGR_PASSWORD

valueFrom:

secretKeyRef:

name: postgres-secret

key: REPMGR_PASSWORD

# Write the pod name (from metadata field) to an environment variable in order to automatically generate replication addresses

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# Repmgr configuration

- name: REPMGR_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: REPMGR_PARTNER_NODES # All pods being synchronized (has to reflect the number of replicas)

value: postgres-state-0.postgres-service.$(REPMGR_NAMESPACE).svc.cluster.local,postgres-state-1.postgres-service.$(REPMGR_NAMESPACE).svc.cluster.local

- name: REPMGR_PRIMARY_HOST # Pod with write access. Everybody else replicates it

value: postgres-state-0.postgres-service.$(REPMGR_NAMESPACE).svc.cluster.local

- name: REPMGR_NODE_NAME # Current pod name

value: $(POD_NAME)

- name: REPMGR_NODE_NETWORK_NAME

value: $(POD_NAME).postgres-service.$(REPMGR_NAMESPACE).svc.cluster.local

volumeMounts:

- name: postgres-db

mountPath: /bitnami/postgresql

- name: postgres-scripts

mountPath: /pre-stop.sh

subPath: pre-stop.sh

- name: empty-dir

mountPath: /tmp

subPath: tmp-dir

- name: empty-dir

mountPath: /opt/bitnami/postgresql/conf

subPath: app-conf-dir

- name: empty-dir

mountPath: /opt/bitnami/postgresql/tmp

subPath: app-tmp-dir

- name: empty-dir

mountPath: /opt/bitnami/repmgr/conf

subPath: repmgr-conf-dir

- name: empty-dir

mountPath: /opt/bitnami/repmgr/tmp

subPath: repmgr-tmp-dir

- name: empty-dir

mountPath: /opt/bitnami/repmgr/logs

subPath: repmgr-logs-dir

volumes:

- name: postgres-scripts

configMap:

name: postgres-scripts-config

defaultMode: 0755 # Access permissions (owner can execute processes)

- name: empty-dir # Use a fake directory for mounting unused but required paths

emptyDir: {}

volumeClaimTemplates: # Description of volume claim created for each replica

- metadata:

name: postgres-db

spec:

storageClassName: nfs-small

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

---

apiVersion: v1

kind: Service

metadata:

name: postgres-service

labels:

app: postgres

spec:

type: ClusterIP # Default service type

clusterIP: None # Do not get a service-wide address

selector:

app: postgres

ports:

- protocol: TCP

port: 5432

targetPort: postgres-port

As the Bitnami container runs as a non-root user for security reasons, requires a “postgres” administrator name for the database and uses a different path structure, you won’t be able to mount the data from the original PostgreSQL deployment without messing around with some configuration. So, unless you absolutely need the data, just start from scratch by deleting the volumes, maybe doing a backup first

Notice how our ClusterIP is set to not have an shared address, making it a headless service, so that it only serves the purpose of exposing the target port of each individual pod, still accessible via <pod name>.<service-name>:<container port number>. We do that as our containers here are not meant to be accessed in a random or load-balanced manner

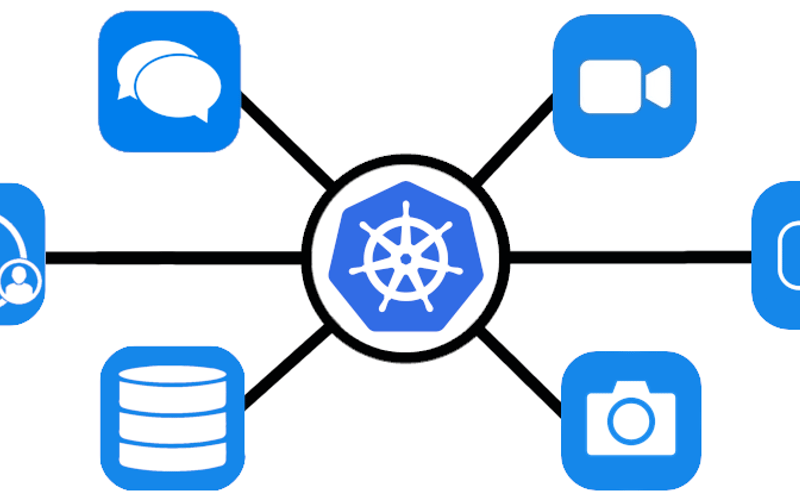

If you’re writing your own application, it’s easy to define different addresses for writing to and reading from a replicated database, respecting the role of each copy. But a lot of useful software already around assumes a single connection is needed, and there’s no simple way to get around that. That’s why you need specific intermediaries or proxies like Pgpool-II for PostgreSQL, that can appear to applications as a single entity, redirecting queries to the appropriate backend database depending on it’s contents:

(From the PostgreSQL-HA documentation)

The simplified configuration for this extra middleware component is provided below:

apiVersion: v1

kind: ConfigMap

metadata:

name: postgres-proxy-config

labels:

app: postgres-proxy

data:

BITNAMI_DEBUG: "true"

PGPOOL_BACKEND_NODES: 0:postgres-state-0.postgres-service:5432,1:postgres-state-1.postgres-service:5432

PGPOOL_SR_CHECK_USER: repmgr

PGPOOL_SR_CHECK_DATABASE: repmgr

PGPOOL_POSTGRES_USERNAME: postgres

PGPOOL_ADMIN_USERNAME: pgpool

PGPOOL_AUTHENTICATION_METHOD: scram-sha-256

PGPOOL_ENABLE_LOAD_BALANCING: "yes"

PGPOOL_DISABLE_LOAD_BALANCE_ON_WRITE: "transaction"

PGPOOL_ENABLE_LOG_CONNECTIONS: "no"

PGPOOL_ENABLE_LOG_HOSTNAME: "yes"

PGPOOL_NUM_INIT_CHILDREN: "25"

PGPOOL_MAX_POOL: "8"

PGPOOL_RESERVED_CONNECTIONS: "3"

PGPOOL_HEALTH_CHECK_PSQL_TIMEOUT: "6"

---

apiVersion: v1

kind: Secret

metadata:

name: postgres-proxy-secret

data:

PGPOOL_ADMIN_PASSWORD: cGdwb29s

---

apiVersion: v1

kind: Secret

metadata:

name: postgres-users-secret

data:

usernames: dXNlcjEsdXNlcjIsdXNlcjM=

passwords: cHN3ZDEscHN3ZDIscHN3ZDM=

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres-proxy-deploy

labels:

app: postgres-proxy

spec:

replicas: 1

selector:

matchLabels:

app: postgres-proxy

template:

metadata:

labels:

app: postgres-proxy

spec:

securityContext:

fsGroup: 1001

runAsGroup: 1001

runAsUser: 1001

containers:

- name: postgres-proxy

image: docker.io/bitnami/pgpool:4

imagePullPolicy: "IfNotPresent"

envFrom:

- configMapRef:

name: postgres-proxy-config

env:

- name: PGPOOL_POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: postgres-secret

key: POSTGRES_PASSWORD

- name: PGPOOL_SR_CHECK_PASSWORD

valueFrom:

secretKeyRef:

name: postgres-secret

key: REPMGR_PASSWORD

- name: PGPOOL_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: postgres-proxy-secret

key: PGPOOL_ADMIN_PASSWORD

- name: PGPOOL_POSTGRES_CUSTOM_USERS

valueFrom:

secretKeyRef:

name: postgres-users-secret

key: usernames

- name: PGPOOL_POSTGRES_CUSTOM_PASSWORDS

valueFrom:

secretKeyRef:

name: postgres-users-secret

key: passwords

ports:

- name: pg-proxy-port

containerPort: 5432

volumeMounts:

- name: empty-dir

mountPath: /tmp

subPath: tmp-dir

- name: empty-dir

mountPath: /opt/bitnami/pgpool/etc

subPath: app-etc-dir

- name: empty-dir

mountPath: /opt/bitnami/pgpool/conf

subPath: app-conf-dir

- name: empty-dir

mountPath: /opt/bitnami/pgpool/tmp

subPath: app-tmp-dir

- name: empty-dir

mountPath: /opt/bitnami/pgpool/logs

subPath: app-logs-dir

volumes:

- name: empty-dir

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: postgres-proxy-service

labels:

app: postgres-proxy

spec:

type: LoadBalancer # Let it be accessible inside the local network

selector:

app: postgres-proxy

ports:

- protocol: TCP

port: 5432

targetPort: pg-proxy-port

I bet most of it is self explanatory by now. Just pay extra attention to the NUM_INIT_CHILDREN, MAX_POLL and RESERVED_CONNECTIONS variables and the relationship between them, as their default values may not be appropriate at all for your application and result in too many connection refusals (Been there. Done that). Moreover, users other than administrator and replicator are blocked from access unless you add them to the custom lists of usernames and passwords, in the format user1,user2,user3,.. and pswd1,pswd2,pswd3,..., here provided as base64-encoded secrets

With all that configured, we can finally (this time I really mean it) deploy a useful, stateful and replicated application:

$ kubectl get all -n choppa -l app=postgres

NAME READY STATUS RESTARTS AGE

pod/postgres-state-0 1/1 Running 0 40h

pod/postgres-state-1 1/1 Running 0 40h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/postgres-service ClusterIP None <none> 5432/TCP 40h

$ kubectl get all -n choppa -l app=postgres-proxy

NAME READY STATUS RESTARTS AGE

pod/postgres-proxy-deploy-74bbdd9b9d-j2tsn 1/1 Running 0 40h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/postgres-proxy-service LoadBalancer 10.43.217.63 192.168.3.10,192.168.3.12 5432:30217/TCP 40h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/postgres-proxy-deploy 1/1 1 1 40h

NAME DESIRED CURRENT READY AGE

replicaset.apps/postgres-proxy-deploy-74bbdd9b9d 1 1 1 40h

(Some nice usage of component labels for selection)

You should be able to view your database you the postgres user the same way we did last time. After informing the necessary custom users to Pgpoll, now not only I can get my Telegram bridge back running (using the proxy address for the connection string), but also install WhatsApp and Discord ones. Although they’re written in Go rather than Python, configuration is very similar, with the relevant parts below:

apiVersion: v1

kind: ConfigMap

metadata:

name: whatsapp-config

labels:

app: whatsapp

data:

config.yaml: |

# Homeserver details.

homeserver:

# The address that this appservice can use to connect to the homeserver.

address: https://talk.choppa.xyz

# The domain of the homeserver (also known as server_name, used for MXIDs, etc).

domain: choppa.xyz

# ...

# Application service host/registration related details.

# Changing these values requires regeneration of the registration.

appservice:

# The address that the homeserver can use to connect to this appservice.

address: http://whatsapp-service:29318

# The hostname and port where this appservice should listen.

hostname: 0.0.0.0

port: 29318

# Database config.

database:

# The database type. "sqlite3-fk-wal" and "postgres" are supported.

type: postgres

# The database URI.

# SQLite: A raw file path is supported, but `file:<path>?_txlock=immediate` is recommended.

# https://github.com/mattn/go-sqlite3#connection-string

# Postgres: Connection string. For example, postgres://user:password@host/database?sslmode=disable

# To connect via Unix socket, use something like postgres:///dbname?host=/var/run/postgresql

uri: postgres://whatsapp:mautrix@postgres-proxy-service/matrix_whatsapp?sslmode=disable

# Maximum number of connections. Mostly relevant for Postgres.

max_open_conns: 20

max_idle_conns: 2

# Maximum connection idle time and lifetime before they're closed. Disabled if null.

# Parsed with https://pkg.go.dev/time#ParseDuration

max_conn_idle_time: null

max_conn_lifetime: null

# The unique ID of this appservice.

id: whatsapp

# Appservice bot details.

bot:

# Username of the appservice bot.

username: whatsappbot

# Display name and avatar for bot. Set to "remove" to remove display name/avatar, leave empty

# to leave display name/avatar as-is.

displayname: WhatsApp bridge bot

avatar: mxc://maunium.net/NeXNQarUbrlYBiPCpprYsRqr

# Whether or not to receive ephemeral events via appservice transactions.

# Requires MSC2409 support (i.e. Synapse 1.22+).

ephemeral_events: true

# Should incoming events be handled asynchronously?

# This may be necessary for large public instances with lots of messages going through.

# However, messages will not be guaranteed to be bridged in the same order they were sent in.

async_transactions: false

# Authentication tokens for AS <-> HS communication. Autogenerated; do not modify.

as_token: <same as token as in registration.yaml>

hs_token: <same hs token as in registration.yaml>

# ...

# Config for things that are directly sent to WhatsApp.

whatsapp:

# Device name that's shown in the "WhatsApp Web" section in the mobile app.

os_name: Mautrix-WhatsApp bridge

# Browser name that determines the logo shown in the mobile app.

# Must be "unknown" for a generic icon or a valid browser name if you want a specific icon.

# List of valid browser names: https://github.com/tulir/whatsmeow/blob/efc632c008604016ddde63bfcfca8de4e5304da9/binary/proto/def.proto#L43-L64

browser_name: unknown

# Proxy to use for all WhatsApp connections.

proxy: null

# Alternative to proxy: an HTTP endpoint that returns the proxy URL to use for WhatsApp connections.

get_proxy_url: null

# Whether the proxy options should only apply to the login websocket and not to authenticated connections.

proxy_only_login: false

# Bridge config

bridge:

# ...

# Settings for handling history sync payloads.

history_sync:

# Enable backfilling history sync payloads from WhatsApp?

backfill: true

# ...

# Shared secret for authentication. If set to "generate", a random secret will be generated,

# or if set to "disable", the provisioning API will be disabled.

shared_secret: generate

# Enable debug API at /debug with provisioning authentication.

debug_endpoints: false

# Permissions for using the bridge.

# Permitted values:

# relay - Talk through the relaybot (if enabled), no access otherwise

# user - Access to use the bridge to chat with a WhatsApp account.

# admin - User level and some additional administration tools

# Permitted keys:

# * - All Matrix users

# domain - All users on that homeserver

# mxid - Specific user

permissions:

"*": relay

"@ancapepe:choppa.xyz": admin

"@ancompepe:choppa.xyz": user

# Settings for relay mode

relay:

# Whether relay mode should be allowed. If allowed, `!wa set-relay` can be used to turn any

# authenticated user into a relaybot for that chat.

enabled: false

# Should only admins be allowed to set themselves as relay users?

admin_only: true

# ...

# Logging config. See https://github.com/tulir/zeroconfig for details.

logging:

min_level: debug

writers:

- type: stdout

format: pretty-colored

registration.yaml: |

id: whatsapp

url: http://whatsapp-service:29318

as_token: <same as token as in config.yaml>

hs_token: <same hs token as in config.yaml>

sender_localpart: SH98XxA4xvgFtlbx1NxJm9VYW6q3BdYg

rate_limited: false

namespaces:

users:

- regex: ^@whatsappbot:choppa.xyz$

exclusive: true

- regex: ^@whatsapp_.*:choppa.xyz$

exclusive: true

de.sorunome.msc2409.push_ephemeral: true

push_ephemeral: true

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: whatsapp-deploy

spec:

replicas: 1

selector:

matchLabels:

app: whatsapp

template:

metadata:

labels:

app: whatsapp

spec:

containers:

- name: whatsapp

image: dock.mau.dev/mautrix/whatsapp:latest

imagePullPolicy: "IfNotPresent"

command: [ "/usr/bin/mautrix-whatsapp", "-c", "/data/config.yaml", "-r", "/data/registration.yaml", "--no-update" ]

ports:

- containerPort: 29318

name: whatsapp-port

volumeMounts:

- name: whatsapp-volume

mountPath: /data/config.yaml

subPath: config.yaml

- name: whatsapp-volume

mountPath: /data/registration.yaml

subPath: registration.yaml

volumes:

- name: whatsapp-volume

configMap:

name: whatsapp-config

---

apiVersion: v1

kind: Service

metadata:

name: whatsapp-service

spec:

publishNotReadyAddresses: true

selector:

app: whatsapp

ports:

- protocol: TCP

port: 29318

targetPort: whatsapp-port

apiVersion: v1

kind: ConfigMap

metadata:

name: discord-config

labels:

app: discord

data:

config.yaml: |

# Homeserver details.

homeserver:

# The address that this appservice can use to connect to the homeserver.

address: https://talk.choppa.xyz

# The domain of the homeserver (also known as server_name, used for MXIDs, etc).

domain: choppa.xyz

# What software is the homeserver running?

# Standard Matrix homeservers like Synapse, Dendrite and Conduit should just use "standard" here.

software: standard

# The URL to push real-time bridge status to.

# If set, the bridge will make POST requests to this URL whenever a user's discord connection state changes.

# The bridge will use the appservice as_token to authorize requests.

status_endpoint: null

# Endpoint for reporting per-message status.

message_send_checkpoint_endpoint: null

# Does the homeserver support https://github.com/matrix-org/matrix-spec-proposals/pull/2246?

async_media: false

# Should the bridge use a websocket for connecting to the homeserver?

# The server side is currently not documented anywhere and is only implemented by mautrix-wsproxy,

# mautrix-asmux (deprecated), and hungryserv (proprietary).

websocket: false

# How often should the websocket be pinged? Pinging will be disabled if this is zero.

ping_interval_seconds: 0

# Application service host/registration related details.

# Changing these values requires regeneration of the registration.

appservice:

# The address that the homeserver can use to connect to this appservice.

address: http://discord-service:29334

# The hostname and port where this appservice should listen.

hostname: 0.0.0.0

port: 29334

# Database config.

database:

# The database type. "sqlite3-fk-wal" and "postgres" are supported.

type: postgres

# The database URI.

# SQLite: A raw file path is supported, but `file:<path>?_txlock=immediate` is recommended.

# https://github.com/mattn/go-sqlite3#connection-string

# Postgres: Connection string. For example, postgres://user:password@host/database?sslmode=disable

# To connect via Unix socket, use something like postgres:///dbname?host=/var/run/postgresql

uri: postgres://discord:mautrix@postgres-proxy-service/matrix_discord?sslmode=disable

# Maximum number of connections. Mostly relevant for Postgres.

max_open_conns: 20

max_idle_conns: 2

# Maximum connection idle time and lifetime before they're closed. Disabled if null.

# Parsed with https://pkg.go.dev/time#ParseDuration

max_conn_idle_time: null

max_conn_lifetime: null

# The unique ID of this appservice.

id: discord

# Appservice bot details.

bot:

# Username of the appservice bot.

username: discordbot

# Display name and avatar for bot. Set to "remove" to remove display name/avatar, leave empty

# to leave display name/avatar as-is.

displayname: Discord bridge bot

avatar: mxc://maunium.net/nIdEykemnwdisvHbpxflpDlC

# Whether or not to receive ephemeral events via appservice transactions.

# Requires MSC2409 support (i.e. Synapse 1.22+).

ephemeral_events: true

# Should incoming events be handled asynchronously?

# This may be necessary for large public instances with lots of messages going through.

# However, messages will not be guaranteed to be bridged in the same order they were sent in.

async_transactions: false

# Authentication tokens for AS <-> HS communication. Autogenerated; do not modify.

as_token: <same as token as in registration.yaml>

hs_token: <same hs token as in registration.yaml>

# Bridge config

bridge:

# ...

# The prefix for commands. Only required in non-management rooms.

command_prefix: '!discord'

# Permissions for using the bridge.

# Permitted values:

# relay - Talk through the relaybot (if enabled), no access otherwise

# user - Access to use the bridge to chat with a Discord account.

# admin - User level and some additional administration tools

# Permitted keys:

# * - All Matrix users

# domain - All users on that homeserver

# mxid - Specific user

permissions:

"*": relay

"@ancapepe:choppa.xyz": admin

"@ancompepe:choppa.xyz": user

# Logging config. See https://github.com/tulir/zeroconfig for details.

logging:

min_level: debug

writers:

- type: stdout

format: pretty-colored

registration.yaml: |

id: discord

url: http://discord-service:29334

as_token: <same as token as in config.yaml>

hs_token: <same hs token as in config.yaml>

sender_localpart: KYmI12PCMJuHvD9VZw1cUzMlV7nUezH2

rate_limited: false

namespaces:

users:

- regex: ^@discordbot:choppa.xyz$

exclusive: true

- regex: ^@discord_.*:choppa.xyz$

exclusive: true

de.sorunome.msc2409.push_ephemeral: true

push_ephemeral: true

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: discord-deploy

spec:

replicas: 1

selector:

matchLabels:

app: discord

template:

metadata:

labels:

app: discord

spec:

containers:

- name: discord

image: dock.mau.dev/mautrix/discord:latest

imagePullPolicy: "IfNotPresent"

command: [ "/usr/bin/mautrix-discord", "-c", "/data/config.yaml", "-r", "/data/registration.yaml", "--no-update" ]

ports:

- containerPort: 29334

name: discord-port

volumeMounts:

- name: discord-volume

mountPath: /data/config.yaml

subPath: config.yaml

- name: discord-volume

mountPath: /data/registration.yaml

subPath: registration.yaml

volumes:

- name: discord-volume

configMap:

name: discord-config

---

apiVersion: v1

kind: Service

metadata:

name: discord-service

spec:

publishNotReadyAddresses: true

selector:

app: discord

ports:

- protocol: TCP

port: 29334

targetPort: discord-port

Logging into your account will still change depending on the service being bridged. As always, consult the official documentation

We may forget for a while the multiple opened messaging windows just to communicate with our peers. That river has been crossed!

Source link

lol