Industry 4.0 is buzzing word where manufacturing and information technology have joined hands together for digitalization. The People, machines and systems communicate in near real time with help of improvement in IoT field to enable autonomous production.

One of the leading countries in digitalization has taken the leap in implementation of smart manufacturing with all trending technologies. This country has 2700 engineering firms manufacturing essential good in various industries like medical, aerospace and semi-conductors.

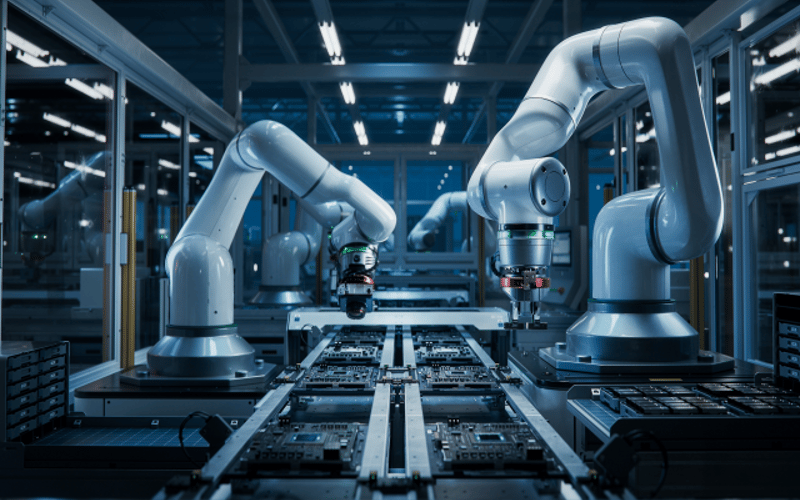

The disruption in digital technologies had paved the way for dark factories which is termed as fully automated lights-out environment and is reality with Xiaomi’s dark factory. We term this as factory of future and with advancement of Robotics and established software like MOM (Manufacturing operations management), the complete lights-out factory conceptualization to implementation is very much possible.

Businesses where human interferences are dangerous can reap the benefits of complete automation. Imagine scenarios of oil rig where spilling has to be measured in intricate places where sending humans is risk or consider scenarios where the condition to monitor causes health hazards for human. We can utilize robots to measure, monitor and intervene. Let us go through few use cases where dark factory concept can be applied.

Most of scenarios involving Inspection cases where Robots can be helpful are as follows:

- Identification of defects, corrosion, oil spill from large rigs

- Damages

- Erosion

- Broken parts causing production inefficiency in the lines

- Rust and many such defects depending on the product.

Safety surveillance with Robots involves below scenarios:

- Intrusion detection – falling of objects from dark factory

- Trespassing danger zone

- Spill of harmful liquids and gases with temperature differences

- Machineries loose/damaged parts which causes harmful impacts to complete factory unit

Below is the model Robot from Boston Dynamics that can be utilized for these use cases of dark factory implementation:

Photo credits to Boston Dynamics

Let’s see how we implement this in AWS and details of the solution architecture.

Spot from Boston Dynamics can capture local imagery with either its standard robot cameras, or with other custom cameras as per our preference.

The captured imagery data can be inferenced by CV (Computer Vision) ML model. Detections from the ML inference can be stored in robot’s storage, until Spot reaches an area where a network connection is accessible. Technologies used for implementation are AWS IoT Greengrass 2.0 and Amazon SageMaker Edge Manager. ML models are deployed to a Spot that operates with intermittent network connectivity to Edge since it will be travelling to intricate places.

Data from Spot camera feeds are continuously streamed through device gateway to AWS IoT Core. We have considered edge gateway that connects to cameras and the device data are directed to AWS IoT Core. Edge gateway has AWS Greengrass runtime and lambda functions, and the photos are transferred from Edge’s storage to AWS S3 through lambda functions.

The feeds and real-time photos are stored in time series folder in AWS S3 and the location of photos along with metadata are stored in DynamoDB. Admin dashboards are created using Grafana where alerts as well as quality of images that has been predicted can be constantly viewed by the administrator.

Historical data about the manufacturing site with millions of photos are captured. The real time photos that are stored in S3 as well the historical images provided by our customer in S3 are analyzed with AWS Glue. The curated data is stored in AWS S3 for labelling and AWS SageMaker labeling job is created where AWS GroudTruth is utilized for labeling. The labeled data is stored again in S3. This data is split into training (80%) and test (20%) data. The CV algorithm which was custom built for our scenarios are trained with data stored in S3 using GPU. Once the training is completed, the model is tested for accuracy with test data.

The model predictions are validated and once the desired accuracy is achieved, the model is published to Spot for inferencing. The models are inferenced, and results are sent to AWS IoT Core.

Source link

lol