Hello

Time to forget about simple demo containers and struggle [not so much] with the configuration details of fully-featured applications. Today we not only put Kubernetes to production-level usage, but find out about some strengths and weaknesses of distributed computing

Notes on storage

Code nowadays, more than ever, works consuming and producing huge amounts of data, that usually has (or “have”, since “data” is plural for “datum”?) to be kept for later. It’s no surprise then that the capacity to store information is one of the most valuable commodities in IT, always at risk of being depleted due to poor optimization

In the containerized world, where application instances can be created and recreated dynamically, extra steps have to be taken for reusing disk space and avoiding duplication whenever possible. That’s why K8s adopts a layered approach: deployments don’t create Persistent Volumes, roughly equivalent to root folders, on their own, but set their storage requirements via Persistent Volume Claims

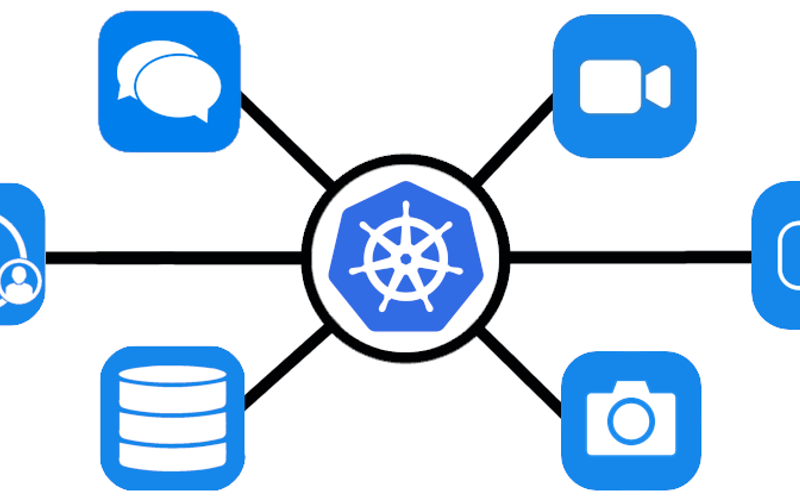

Long story short, administrators either create the necessary volumes manually or let the claims be managed by a Storage Class, to provide volumes on demand. Like ingresses have multiple controller implementations, depending on the backend, storage classes allow for different providers, offering a variety of local and remote (including cloud) data resources:

(Screenshot from Nana’s tutorial)

Although local is an easy to use option, it quickly betrays the purpose of container orchestration: tying storage to a specific machine’s disk path prevents multiple pod replicas from being distributed among multiple nodes for load balancing. Besides, if we wish to avoid cloud providers (like Google or AWS) to have full control of our data, we’re left with [local] network storage solutions like NFS

If you remember the first article, my setup consists of one USB hard disk (meant for persistent volumes) connected to each node board. That’s not ideal for NFS, as decoupling storage from the K8s cluster, using a dedicated computer, would prevent node crashes compromising data availability to the rest. Still, I can at least make the devices share disks with each other

In order to expose external drive’s partition to the network, begin by ensuring that they’re mounted during initialization by editing /etc/fstab:

# Finding the partition's UUID (assuming a EXT4-formatted disk)

[ancapepe@ChoppaServer-1 ~]$ lsblk -f

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS

sda

└─sda1 ext4 1.0 69c7df98-0349-4c47-84ce-514a1699ccf1 869.2G 0%

mmcblk0

├─mmcblk0p1 vfat FAT16 BOOT_MNJRO 42D4-5413 394.8M 14% /boot

└─mmcblk0p2 ext4 1.0 ROOT_MNJRO 83982167-1add-4b6a-ad63-3479493a4510 44.4G 18% /var/lib/kubelet/pods/ae500dcf-b765-4f37-be8c-ee20fbb3ea1b/volume-subpaths/welcome-volume/welcome/0

/

zram0 [SWAP]

# Creating the mount point for the external partition

[ancapepe@ChoppaServer-1 ~]$ sudo mkdir /storage

# Adding line with mount configuration to fstab

[ancapepe@ChoppaServer-1 ~]$ echo 'UUID=69c7df98-0349-4c47-84ce-514a1699ccf1 /storage ext4 defaults,noatime 0 2' | sudo tee -a /etc/fstab

# Check the contents

[ancapepe@ChoppaServer-1 ~]$ cat /etc/fstab

# Static information about the filesystems.

# See fstab(5) for details.

# <file system> <dir> <type> <options> <dump> <pass>

PARTUUID=42045b6f-01 /boot vfat defaults,noexec,nodev,showexec 0 0

PARTUUID=42045b6f-02 / ext4 defaults 0 1

UUID=69c7df98-0349-4c47-84ce-514a1699ccf1 /storage ext4 defaults,noatime 0 2

Reboot and check if you can access the contents of the /storage folder (or whatever path you’ve chosen). Proceed by setting up the NFS server:

# Install required packages (showing the ones for Manjaro Linux)

[ancapepe@ChoppaServer-1 ~]$ sudo pacman -S nfs-utils rpcbind

# ...

# Installation process ...

# ...

# Add disk sharing configurations (Adapt to you local network address range)

[ancapepe@ChoppaServer-1 ~]$ echo '/storage 192.168.3.0/24(rw,sync,no_wdelay,no_root_squash,insecure)' | sudo tee -a /etc/exports

# Check the contents

[ancapepe@ChoppaServer-1 ~]$ cat /etc/exports

# /etc/exports - exports(5) - directories exported to NFS clients

#

# Example for NFSv3:

# /srv/home hostname1(rw,sync) hostname2(ro,sync)

# Example for NFSv4:

# /srv/nfs4 hostname1(rw,sync,fsid=0)

# /srv/nfs4/home hostname1(rw,sync,nohide)

# Using Kerberos and integrity checking:

# /srv/nfs4 *(rw,sync,sec=krb5i,fsid=0)

# /srv/nfs4/home *(rw,sync,sec=krb5i,nohide)

#

# Use `exportfs -arv` to reload.

/storage 192.168.3.0/24(rw,sync,no_wdelay,no_root_squash,insecure)

# Update exports based on configuration

[ancapepe@ChoppaServer-1 ~]$ sudo exportfs -arv

# Enable and start related initialization services

[ancapepe@ChoppaServer-1 ~]$ systemctl enable --now rpcbind nfs-server

# Check if shared folders are visible

[ancapepe@ChoppaServer-1 ~]$ showmount localhost -e

Export list for localhost:

/storage 192.168.3.0/24

(Remember that your mileage may vary)

Phew, and that’s just the first part of it! Not only you have to do the same process for every machine whose storage you wish to share, but afterwards it’s necessary to install a NFS provisioner to the K8s cluster. Here I have used nfs-subdir-external-provisioner, not as-it-is, but modifying 2 manifest files to my liking:

- Modified

deploy/rbac.yaml(Access permissions):

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-storage

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-storage

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-storage

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-storage

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-storage

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

(Here just set the namespace to your liking)

- Modified

deploy/deployment.yaml(Storage classes):

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-storage

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

# 1TB disk on Odroid N2+

- name: nfs-client-provisioner-big

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-root-big

mountPath: /persistentvolumes # CAN'T CHANGE THIS PATH

env: # Environment variables

- name: PROVISIONER_NAME

value: nfs-provisioner-big

- name: NFS_SERVER # Hostnames don't resolve in setting env vars

value: 192.168.3.10 # Looking for a way to make it more flexible

- name: NFS_PATH

value: /storage

# 500GB disk on Raspberry Pi 4B

- name: nfs-client-provisioner-small

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-root-small

mountPath: /persistentvolumes # CAN'T CHANGE THIS PATH

env: # Environment variables

- name: PROVISIONER_NAME

value: nfs-provisioner-small

- name: NFS_SERVER

value: 192.168.3.11

- name: NFS_PATH

value: /storage

volumes:

- name: nfs-root-big

nfs:

server: 192.168.3.10

path: /storage

- name: nfs-root-small

nfs:

server: 192.168.3.11

path: /storage

---

# 1TB disk on Odroid N2+

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-big

namespace: choppa

provisioner: nfs-provisioner-big

parameters:

archiveOnDelete: "false"

reclaimPolicy: Retain

---

# 500GB disk on Raspberry Pi 4B

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-small

namespace: choppa

provisioner: nfs-provisioner-small

parameters:

archiveOnDelete: "false"

reclaimPolicy: Retain

(Make sure that all namespace settings match the RBAC rules. Also notice that, the way the provisioner works, I had to create on storage class for each shared disk)

If everything goes according to plan, applying a test like the one below will make volumes appear to meet the claims and the SUCCESS file to be written on each one:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim-big

spec:

storageClassName: nfs-big

accessModes:

- ReadWriteMany # Concurrent access

resources:

requests:

storage: 1Mi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim-small

spec:

storageClassName: nfs-small

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

---

kind: Pod

apiVersion: v1

metadata:

name: test-pod-big

spec:

containers:

- name: test-pod-big

image: busybox:stable

command: # Replace the default command for the image by this

- "/bin/sh"

args: # Custom command arguments

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: test-volume

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: test-volume

persistentVolumeClaim:

claimName: test-claim-big

---

kind: Pod

apiVersion: v1

metadata:

name: test-pod-small

spec:

containers:

- name: test-pod-small

image: busybox:stable

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: test-volume

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: test-volume

persistentVolumeClaim:

claimName: test-claim-small

(Thanks to Albert Weng for the very useful guide)

Your Very Own Messaging Service

Now that we have real storage at our disposal, some real apps would be nice. One of the first things that come to mind when I think about data I’d like to protect is the history of my private conversations, where most spontaneous interactions happen and most ideas get forgotten

If you’re a old school person wishing to host your own messaging, I bet you’d go for IRC or XMPP. But I’m more of a late millennial, so it’s easier to get drawn to fancy stuff like Matrix, a protocol for federated communication

“And what’s federation?”, you may ask. Well, to this day, the best video about it I’ve seen is the one from Simply Explained. Even though it’s focused on Mastodon and the Fediverse (We’ll get there eventually), the same concepts apply:

As any open protocol, the Matrix specification has a number of different implementations, most famously Synapse (Python) and Dendrite (Go). I’ve been, however, particularly endeared to Conduit, a lightweight Matrix server written in Rust. Here I’ll show the deployment configuration as recommended by its maintainer, Timo Kösters:

kind: PersistentVolumeClaim # Storage requirements component

apiVersion: v1

metadata:

name: conduit-pv-claim

namespace: choppa

labels:

app: conduit

spec:

storageClassName: nfs-big # The used storage class (1TB drive)

accessModes:

- ReadWriteOnce # For synchronous access, as we don't want data corruption

resources:

requests:

storage: 50Gi # Asking for a ~50 Gigabytes volume

---

apiVersion: v1

kind: ConfigMap # Read-only data component

metadata:

name: conduit-config

namespace: choppa

labels:

app: conduit

data:

CONDUIT_CONFIG: '' # Leave empty to inform that we're not using a configuration file

CONDUIT_SERVER_NAME: 'choppa.xyz' # The server name that will appear after registered usernames

CONDUIT_DATABASE_PATH: '/var/lib/matrix-conduit/' # Database directory path. Uses the permanent volume

CONDUIT_DATABASE_BACKEND: 'rocksdb' # Recommended for performance, sqlite alternative

CONDUIT_ADDRESS: '0.0.0.0' # Listen on all IP addresses/Network interfaces

CONDUIT_PORT: '6167' # Listening port for the process inside the container

CONDUIT_MAX_CONCURRENT_REQUESTS: '200' # Network usage limiting options

CONDUIT_MAX_REQUEST_SIZE: '20000000' # (in bytes, ~20 MB)

CONDUIT_ALLOW_REGISTRATION: 'true' # Allow other people to register in the server (requires token)

CONDUIT_ALLOW_FEDERATION: 'true' # Allow communication with other instances

CONDUIT_ALLOW_CHECK_FOR_UPDATES: 'true'

CONDUIT_TRUSTED_SERVERS: '["matrix.org"]'

CONDUIT_ALLOW_WELL_KNOWN: 'true' # Generate "/.well-known/matrix/{client,server}" endpoints

CONDUIT_WELL_KNOWN_CLIENT: 'https://talk.choppa.xyz' # return value for "/.well-known/matrix/client"

CONDUIT_WELL_KNOWN_SERVER: 'talk.choppa.xyz:443' # return value for "/.well-known/matrix/server"

---

apiVersion: v1

kind: Secret # Read-only encrypted data

metadata:

name: conduit-secret

namespace: choppa

data:

CONDUIT_REGISTRATION_TOKEN: bXlwYXNzd29yZG9yYWNjZXNzdG9rZW4= # Registration token/password in base64 format

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: conduit-deploy

namespace: choppa

spec:

replicas: 1 # Keep it at 1 as the database has an access lock

strategy:

type: Recreate # Wait for replica to be terminated completely befor creating a new one

selector:

matchLabels:

app: conduit

template:

metadata:

labels:

app: conduit

spec:

containers:

- name: conduit

image: ancapepe/conduit:next # fork of matrixconduit/matrix-conduit:next

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 6167 # Has to match the configuration above

name: conduit-port

envFrom: # Take all data from a config file

- configMapRef: # As environment variables

name: conduit-config

env: # Set environment variables one by one

- name: CONDUIT_REGISTRATION_TOKEN

valueFrom:

secretKeyRef: # Take data from a secret

name: conduit-secret # Secret source

key: CONDUIT_REGISTRATION_TOKEN # Data entry key

volumeMounts:

- mountPath: /var/lib/matrix-conduit/ # Mount the referenced volume into this path (database)

name: rocksdb

volumes:

- name: rocksdb # Label the volume for this deployment

persistentVolumeClaim:

claimName: conduit-pv-claim # Reference volumen create by the claim

---

apiVersion: v1

kind: Service

metadata:

name: conduit-service

namespace: choppa

spec:

selector:

app: conduit

ports:

- protocol: TCP

port: 8448 # Using the default port for federation (not required)

targetPort: conduit-port

One thing you should know is that the K8s architecture is optimized for stateless applications, which don’t store changing information within themselves and whose output depend solely on input from the user or auxiliary processes. Conduit, on the contrary, is tightly coupled with its high-performance database, RocksDB, and has stateful behavior. That’s why we need to take extra care by not replicating our process in order to prevent data races when accessing storage

There’s actually a way to run multiple replicas of a stateful pod, that requires different component types to create one volume copy for each container and keep all them in sync. But let’s keep it “simple” for now. More on that in a future chapter

We introduce here a new component type, Secret, intended for sensible information such as passwords and access tokens. It requires that all data is set in a Base64-encoded format for obfuscation, which could be achieved with:

$ echo -n mypasswordoraccesstoken | base64

bXlwYXNzd29yZG9yYWNjZXNzdG9rZW4=

You might also have noticed that I jumped the gun a bit and acquired a proper domain for myself (so that my Matrix server looks nice, of course). I’ve found one available at a promotional price on Dynadot, which now seems even more like a good decision, considering how easy is to bend Cloudflare. Conveniently, the registrar also provides a DNS service, so… 2 for the price of one:

The CNAME record is basically an alias: the subdomain talk.choppa.xyz is simply redirected to choppa.xyz and just works as a hint for our reverse proxy from the previous chapter:

apiVersion: networking.k8s.io/v1

kind: Ingress # Component type

metadata:

name: proxy # Component name

namespace: choppa # You may add the default namespace for components as a paramenter

annotations:

cert-manager.io/cluster-issuer: letsencrypt

kubernetes.io/ingress.class: traefik

status:

loadBalancer: {}

spec:

ingressClassName: traefik

tls:

- hosts:

- choppa.xyz

- talk.choppa.xyz

secretName: certificate

rules: # Routing rules

- host: choppa.xyz # Expected domain name of request, including subdomain

http: # For HTTP or HTTPS requests

paths: # Behavior for different base paths

- path: / # For all request paths

pathType: Prefix

backend:

service:

name: welcome-service # Redirect to this service

port:

number: 8080 # Redirect to this internal service port

- path: /.well-known/matrix/ # Paths for Conduit delegation

pathType: ImplementationSpecific

backend:

service:

name: conduit-service

port:

number: 8448

- path: /_matrix/

pathType: Prefix

backend:

service:

name: conduit-service

port:

number: 8448

- host: talk.choppa.xyz # Conduit server subdomain

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: conduit-service

port:

number: 8448

If your CONDUIT_SERVER_NAME differs from the [sub]domain where your server is hosted, other Matrix federated instances will look for the actual address in Well-known URIs. Normally you’d have to serve them manually, but Conduit has a convenient automatic delegation feature that helps your set it up with the CONDUIT_WELL_KNOWN_* env variables

Currently, at version

0.8, automatically delegation via env vars is not working properly. Timo has helped me debug it (Thanks!) at Conduit’s Matrix room and I have submitted a patch. Let’s hope it gets fixed for0.9. Meanwhile, you may use the custom image I’m referencing in the configuration example

With ALL that said, let’s apply the deployment and hope for the best:

With your instance running, you can now use Matrix clients [that support registration tokens] like Element or Nheko to register your user, login and have fun!

See you soon

Source link

lol