(Peshkova/Shutterstock)

As companies begin adopting AI, they’re taking a closer look at their data and ramping up data governance programs. That’s the good news. But upon closer inspection of their data, they’re finding that it’s not up to the task of creating trustworthy AI. That’s the bad news coming out a new report from Precisely.

Precisely commissioned Drexel University’s LeBow College of Business to research and write its latest “2025 Outlook: Data Integrity Trends and Insights” report, which explores the state of data integrity and AI readiness.

Some of the report’s conclusions may seem counterintuitive at first. For instance, the study, which is based on a survey of 565 data and analytics leaders, found that 76% of organizations say data-driven decision-making is a leading goal for their data programs. However, 67% of survey respondents say they don’t completely trust the data their organization uses for decision-making.

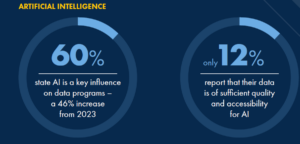

The report also found that 60% of organizations state that AI is a key influence on data programs, which is a massive increase from the 2023 report. But on the other hand, only 12% of survey respondents report that their data is AI-ready, indicating a disconnect between the data and AI aspirations of companies and the reality on the ground.

Tendü Yogurtçu, CTO Precisely, says she was not surprised that trust in data is going down as investments in data are going up.

“I think these AI initiatives are really creating more focus. How are we going to leverage our data? How are we going to start? Which use case will have use of AI?” she tells Big Data Wire in an interview. “And it’s driving a little bit of more of look into data maturity and foundation and leading to that distrust.”

It’s disappointing that the increased focus on data management and governance is not translating into better quality, Yogurtçu adds.

“I think trust and transparency will be critical for increased AI adoption,” she says, “and lack of data governance is just the opposite of that trust and transparency.”

Other factors driving the lack of trust that organizations have in their data are the large investments that companies are making into data products and the push to modernize data platforms in the cloud, Yogurtçu says. Both of those initiatives require strong data foundations, which just don’t widely exist in the real world, she says.

The survey also found that, while 76% of organizations say data-driven decision-making is a top goal for their data programs, 67% still don’t completely trust the data they rely on for these decisions. That’s an increase from the 55% who lacked complete trust in their data in 2023, according to Yogurtçu.

It may seem as if we’re moving backward with regard to data quality and data trustworthiness over time. But another possibility–something that Yogurtçu agrees is probably more likely–is that the data quality itself may be staying relatively constant, but companies are just becoming more aware of the data quality issues that have always been there as a result of the greater scrutiny that comes with better data management programs.

“We are seeing that AI is heavily impacting how they think about data management and how they think about data integrity overall, and I think that’s leading to how do I make sure that I have my data governance in place,” she says.

It’s clear that generative AI is driving much of the interest in improving data management. That raises an interesting question: What would companies think of their data quality if ChatGPT never happened? Yogurtçu says it has changed priorities.

“I think if ChatGPT didn’t happen, if it wasn’t in the picture, we wouldn’t have this sense of urgency for data governance,” she says. “I think this is now creating a sense of urgency.”

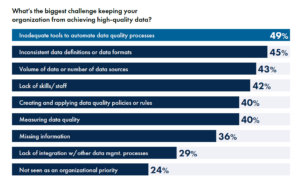

The main impediments to high data quality cited by the report include inadequate tools for automating data quality processes, inconsistent data definitions or data formats, the volume of data or number of data sources, a lack of skills or staff, the ability to create or apply data quality policies or rules, measuring data quality, missing information, lack of integration with other data management processes, and a lack of institutional priorities.

“One of the things that we see happening is due to a lot of data silos, they are not able to even have visibility to how that data travels, what happens between multiple transformations or journey through that data pipelines and the data integrity, quality, or observability journey,” Yogurtçu says. “They are not able to basically see what’s happening to their data.”

The report find a majority of organizations are planning to use data for AI, with data analysis by far the top AI use case, followed by customer service and support, content writing, workflow copilots, fraud and threat detection, customer segmentation and personalization, and code writing as the top use cases.

The main barriers to achieving those AI goals include data quality, as previous stated, but also a lack of technical infrastructure, the level of employee skills in AI, availability of financial resources, organizational culture in support of AI, governance, and business strategy alignment, the report states.

The 32-page report goes into great detail on many aspects of data integrity and data trust, including how it’s changed over the years, as well as how US organizations compare to international firms.

For instance, 60% of survey respondents indicated data quality is a top priority for 2024, compared to 54% in 2023. Similarly, data governance is a top priority for 57% of organizations this year, compared to 41% last year. Data catalogs was reported as a priority for 25% of survey respondents this year, while data replication garnered an 18% share; both of those choices are new to the report in 2024. Data meshes and data fabrics were cited as “key influential trends” by 18% of the survey respondents in 2024, up five percentage points from last year.

Precisely’s report includes a section on location analytics, which is one of the company’s product specialties. The percentage of survey respondents indicating location analytics (or spatial analytics) as a priority went up from 13% in 2023 to 21% in 2024. Spatial analytics requires a keen attention to detail, Precisely says.

“Location data must be of the highest integrity to fully harness its potential for enhanced analytics, reporting, and more informed decision-making,” the company says in the report. “Achieving this integrity requires tools to clean up existing information and derive new location-based attributes through spatial analytics and data enrichment.”

Improving location intelligence is one of the fastest growing priorities for improving the integrity of data and thereby improving quality of insights derived from that data, Yogurtçu says.

“For example, for an insurance company, let’s say that you want to do a risk assessment and price accordingly,” she says. “You need to have the location and property boundaries and a lot of attributes related to that location–How far it is from wildfire zone? How far it is from flood zone? How far it is from schools? Is it close to water supply?–a bunch of attributes that you would like to enrich on top of that location.”

This location data is becoming even more important for AI as companies begin adopting large action models (LAMs) alongside retrieval augmented generation (RAG) techniques to automate specific tasks, she says. But it doesn’t get very far without good data.

“Data quality remains one of the top challenges. And data governance adoption has risen,” Yogurtçu says. “And we are also seeing that increased investments for location insights and data enrichment.”

You can download Precisely’s report here.

Related Items:

Legacy Data Architectures Holding GenAI Back, WEKA Report Finds

Focus on the Fundamentals for GenAI Success

On the Origin of Business Insight in a Data-Rich World

Source link

lol