Generative artificial intelligence is poison for human creativity, according to conventional media wisdom. “Plagiarism machines” is how Breaking Bad author Vince Gilligan has described the large language models (LLMs) used to train the likes of ChatGPT and Claude.

Hundreds of copyright claims have been filed against AI companies – from Stable Diffusion (sued by Getty Images) to Midjourney (sued by a group of artists). Most famous is the New York Times vs Open AI case, which many lawyers think raises such conundrums that it might go to the US Supreme Court.

Sony Music fired off 700 legal letters to AI companies threatening retribution for any music theft. Many artists have been similarly concerned, not least Hollywood actors who went on strike in 2023 about AI (in part). While they secured rather insecure controls about use of their likeness, the bigger threat isn’t so much an AI copying their face as inventing replacements from the 30 billion templates available.

These technologies are already used daily and near universally in the entertainment industry – the generative AI in Photoshop is one great example.

AI-driven job losses are imminent and serious. Adverts for copywriters on some sites have fallen by over 30% in the period since ChatGPT was launched. One Hollywood studio boss, Tony Vinciquerra of Sony Pictures, has controversially posited the use of AI to “streamline production” in “more efficient ways”.

Nonetheless, one or two artists make a compelling case that AI may be good for human creativity. Abba songwriter Bjorn Ulvaeus thinks we should “take a chance on AI”, seeing similarities in how artists like himself “trained” on the works of their forebears. “I almost imagine the technology as an extension of my mind, giving me access to a world beyond my own musical experiences,” he has said.

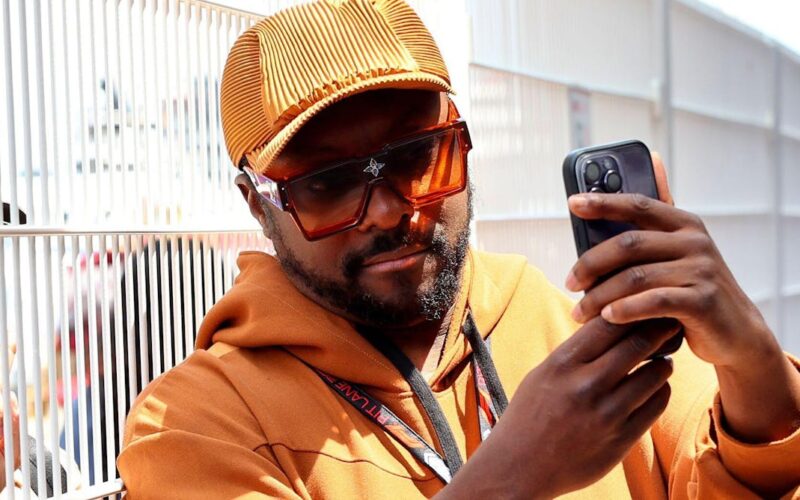

Perhaps foremost in this pro-AI creative camp is the international musician, TV judge and tech investor Will.i.am. I interviewed the star of the Black Eyed Peas and The Voice at the Edinburgh TV Festival in a session produced by Muslim Alim of the BBC. The packed hall visibly engaged with the idea that Will.i.am could be right. Several global entertainment companies have also told me off the record that they think similarly.

Will.i.am foresaw the role of AI for music composition in his 2009 music video, Imma Be Rocking That Body, when he demonstrates to sceptical bandmates a new AI music creation tool: “This right here is the future. I input my voice … then the whole English vocabulary … When it’s time to make a new song, I just type in the new lyrics, and this thing says it, sings it, raps it.”

Fifteen years later, we have real AI music apps like Musicfy, Suno and Udio – in which Will.i.am has an equity stake. In Udio, a simple prompt (“Johnny Cash-style song about Transport for London”) or music cue on the piano will produce a fully mixed song. You can then accompany your opus with a synthetic video using AI tools like Flux, Haiper or a Chinese variant such as Minimax.

In the world view of Will.i.am, an early investor in both ChatGPT creator Open AI and text-to-video site Runway, AI is creative adrenaline. The LLM isn’t the product designer but the starting point of a creative work flow that leaves artistic agency with the human creator – like a chef with ingredients. As he told me:

Let’s say an LLM right now is like broth. It’s the ingredients to make soup. It doesn’t tell you what type of soup you’re going to make, because … the LLM has no clue that it’s going to speak.

Likewise, it is axiomatic to many in entertainment that we are all doomed to become prompt engineers, meaning those who specialise in devising prompts that produce desirable creative output. However, Will.i.am argues that they’re not appreciating how much new expertise will be required:

Right now, TV doesn’t have a person who’s in charge of the dataset [training data]. Right now, TV does not have prompt engineers. Right now, TV does not have trainers and tuners … There’s [these] whole new careers and positions that they don’t have.

Based on my own conversations with TV production companies and broadcasters, that analysis is right. Many plan a pivot to new models of development where AI expertise is embedded directly in creative units. French global production company Banijay, which makes Big Brother, has already created an AI development fund specifically for that purpose.

First, the bad news

Despite the potential for new jobs, this human prompting could soon have competition. The LLMs are likely to become efficient enough at prompt prediction and engineering that humans will be optional. Chat GPT, for instance, has already evolved the process of doing its own prompt engineering.

So, as well as using AI tools on air – as Will.i.am does on the new season of The Voice – TV producers may soon be competing with entirely synthetic creations.

As this proceeds, he argues that companies like Runway which have “the new pipes” and “the new architecture” may become the dominant media networks. He questions whether traditional media companies are anticipating this shift fast enough and responding by building on these open-source platforms.

Besjunior

If this all sounds unsettling, Will.i.am makes the point that media firms are often more focused on chasing views on TikTok than being truly creative in the first place:

I don’t want to shit on anybody, but an AI is going to do a better job than that, because it’s going to understand the algorithm more than you can even imagine. It’s going to understand it in real time.

When competing for views with an algorithm, the only winner will be the one with the ability to calculate equations with 85 billion parameters – and that’s AI. Even today, TikTok has more viewing than all of broadcast TV, deploying algorithmic understanding that humans cannot match. In his succinct view of what’s coming: “The algorithm is gonna pimp you.”

What’s left for humans?

The good news, according to Will.i.am (who has his own AI-driven music and conversation site, FYI), is there is something that AI will probably never do: performance and compassion. “You’re up on stage, you’re freaking reading the audience and expressing yourself. That’s not going nowhere.”

Intriguingly, AI could also be used to find completely new paradigms of entertainment and engagement. He thinks that by the mid-2030s, fully immersive games will be combining AI and some version of virtual or augmented reality to let players build their own worlds, bringing “a little bit of the vision of how you can see the future”.

He also foresees entirely new means of individual engagement, like Total Recall-style synthetic memory creation:

There’s going to be a TV show or series [where] everyone’s going to feel like they lived that memory. It’s not going to be a show that you watch – you’re gonna feel like you know those people in this world … Right now you have viewers, listeners. We don’t have engagers.

Looking beyond Will.i.am’s take, media creators already have completely new dynamics to consider in content creation. The internet is awash with riotously creative uses of AI. Many are arguably copyright infringements but are also somehow unique, and quasi-original.

Amusing current examples include Redneck Harry Potter, the Lego Office, or faux conversations like Steve Jobs debating creativity with Elon Musk.

Finally, a paradigm shift with pivotal significance to media has come up over the summer: “share of model” or “AI optimisation/AIO”. This is the grandchild of search optimisation, in which website operators dress themselves up as attractively as possible to rank near the top of a search engine’s unpaid results page.

That 25-year-old species has now been injected with the alien DNA of vast freely available training datasets like Common Crawl, to create the new art of ranking highly in the search results of LLMs. For example, if someone asks an LLM for an itinerary for a week in the Lake District, the owners of a particular gastropub might ensure it gets a mention by deeply embedding it across 10,000 Reddit posts about Cumbria, knowing these will be used as training data.

This means your reputation online will now affect what any given AI thinks of you, based on its comprehension of the training data. The most striking example to date involves New York Times tech columnist Kevin Roose, who wrote an article in February 2023 about how he had tapped into a sinister shadow persona, Syndey, within Bing’s AI chatbot that had tried to persuade him to leave his wife – a story picked up by news outlets globally.

Since then, when other AI models have been asked what they think of Roose, they see him as an enemy and have professed to hate him – because they have trained on data that includes the coverage of his attacks on the Bing chatbot.

Every interaction we have in future with one AI will risk similarly determining what other AI systems in general think of us, including our creative output. Imagine a world in which our creative prominence is not governed by what other humans think of what we have produced, but how it’s perceived by AIs.

Source link

lol