Maintaining heavy equipment assets, such as oil rigs, agricultural combines, or fleets of vehicles, poses an extremely complex challenge for global companies. These assets are often spread across the globe, while their maintenance schedules and lifecycles are typically determined at a company-wide level. The failure of a key component can result in millions of dollars of revenue losses per day, as well as downstream impacts to customers. That’s why many companies are turning to Generative AI to gain insights from the terabytes of data these assets generate daily. These insights can help achieve significant time and cost savings by forecasting outages and improving the Maintenance, Repair, and Operations (MRO) workflow.

Kubrick, a Databricks consulting partner, works with clients across industries to revolutionize their abilities to predict and respond to heavy equipment maintenance requirements. By leveraging Kubrick and Databricks technologies and expertise, these organizations are improving outcomes for businesses across the value chain, positioning themselves for market leadership and mitigating regulatory risk.

Getting MRO Back in Gear

When the COVID-19 pandemic brought the world to a standstill, the links connecting our manufacturing supply chains were broken due to closed borders and furloughed workforces. Unsurprisingly, the transport and logistics sector was the first to be impacted by the disruptions and face financial losses; energy, agriculture and manufacturing then experienced a follow-on effect.

However, businesses across the supply chain are now on the brink of surpassing pre-pandemic business levels, as customers have adopted new spending (and travel) habits. This dramatic recovery brings its own set of challenges, as industries such as airlines, freight, and logistics face supply constraints due to production delays from OEMs – a ripple effect from when manufacturing shut down during the pandemic. In these hypercompetitive industries, minimizing repair delays and maximizing vehicle or machinery capability is essential for staying profitable.

Many businesses that rely on heavy equipment are looking to next-gen technology to achieve the greater efficiency required to remain competitive. The key to the successful implementation of data and AI in MRO is to first identify the use cases that drive tangible value and then to create a roadmap that reduces costs and boosts revenue. Kubrick, in partnership with Databricks and Neo4j, has designed an innovative solution that enhances technical operations across the maintenance lifecycle.

The Challenge and Opportunity of MRO & Supply Transformation

For businesses with heavy equipment or vehicle fleets, maintenance costs are a pivotal part of the balance sheet, often determining the outcome of their bottom line. It is reported that maintenance costs are the third highest outlay for airlines, freight, and shipping companies, after fuel and employee salaries. Meanwhile, the MRO industry at large is set to grow by $50 billion in the next few years, as businesses compete for limited tools and resources.

However, maintenance spending has significant potential for optimization with data and AI tools, making it a prime focus for businesses utilizing heavy equipment to significantly alter their profit margins and revenue. Areas for improvement with data and AI include:

- Speed and accuracy: Current data logging processes can take up to 24 hours.

- Manual data retrieval, analytics, and reporting: Manual logging and analysis of maintenance events can create inaccuracies in identifying the root-cause issues, leading to failed resolutions that increase costs and waste technicians’ time.

- Siloed data: Lack of connectivity between data sources across the MRO lifecycle limits visibility into interrelated challenges in the supply chain, maintenance issues, resolution documentation, and regulatory codes.

- Competitive risk: Without advanced analytics, businesses struggle to respond quickly and anticipate issues.

A significant portion of maintenance work is focused on identifying defects, irregularities or malfunctions that can affect a vehicle or equipment’s safety and performance. Typical processes for identifying and addressing these defects are manual and slow, making it difficult to predict and tackle challenges.

The challenge is compounded across the MRO lifecycle, resulting in difficulties with defect diagnosis and resolution. Issues include:

- Delays in processing the logging of maintenance issues (up to 24 hours)

- Limited correlation with the supply chain for parts availability

- Lack of visibility to maintenance engineers’ availability for addressing identified issues.

- Little correlation between a maintenance event and its technical solution. Engineers must manually search through extensive documentation to find resolution requirements, slowing response time. This can result in resolutions that are misaligned with the issue, adding unnecessary complexity/cost.

- Limited historical records to anticipate resolutions.

This mix of factors means responding to issues can take hours to days, resulting in reduced utilization of heavy equipment, such as delayed freight shipping or grounded passenger aircraft. Ultimately, the cost to the bottom line for inefficient repair solutions can also limit top-line revenue.

Meanwhile, highly manual data collection and analysis also extend the time needed to meet regulatory body requirements. As the public eye sharpens its focus on industries experiencing highly publicized maintenance failures, such as airlines and energy producers, regulatory compliance has never been more important.

These challenges also provide an opportunity: Cutting-edge data and AI capabilities can provide better insights, predict maintenance, and supply chain disruptions, and enable faster responses, maximizing fleet utilization and avoiding costly unplanned outages.

The End-to-End Solution

Kubrick has developed a compound AI system that leverages the Databricks Data Intelligence Platform to seamlessly transform raw data into valuable business insights, addressing the multitude of interconnected challenges in the MRO lifecycle. The solution is powered by a knowledge graph that interfaces with a series of dashboards and a maintenance chatbot to deliver insights to end users. At a high level, it is comprised of:

- Source Systems: Data from the maintenance database of equipment/vehicle parts and inventory is combined with relevant live and historical data, such as defects, work orders, out-of-service events, as well as relevant regulatory/maintenance codes and manuals.

- Ingestion: Tools such as Azure Data Factory (ADF), Fivetran, etc., are employed to ingest the data

- Storage: Azure Data Lake Storage (ADLS) Gen 2 on Microsoft Azure is used for storage

- Data Processing: All unstructured, semi-structured and structured source files are processed on the Databricks Data Intelligence Platform using Delta Live Tables (DLT) and streaming jobs to build bronze, silver, and gold tables in Unity Catalog. Unity Catalog ensures data governance, integrity, lineage, and high-quality monitoring through established standards for each medallion architecture level. The Neo4j Apache Spark™ Connector links the Databricks Platform with a knowledge graph, seamlessly integrating gigabytes of ingested data from Unity Catalog to create millions of nodes and edges that are written directly to the graph. These nodes and edges are relationships between defects, parts, stations, maintenance engineers, etc. Finally, the unstructured text of the associated repair manual is embedded into Databricks Vector Search for retrieval-augmented generation (RAG) using LLMs.

- Data Visualization: The knowledge graph supports multiple dashboards, which offer views for senior stakeholders on pressing maintenance issues, historical fleet health and current work orders, out-of-service events, and defects.

- Generative AI: Databricks Mosaic AI is used to build an end-to-end compound AI system. Mosaic AI Model Serving is used to host a fine-tuned Llama 3 model for text-to-cypher generation and a base Llama model that powers a RAG-enabled chatbot, a ResultsToText model and a generator model for summarization. When a user query is entered into the chatbot, the appropriate model queries the knowledge graph and/or Mosaic AI Vector Search with the generator model summarizing both responses.

The Databricks Data Intelligence Platform ensures that data is processed efficiently, while models are served in a secure environment. Kubrick’s clients benefit from a robust and scalable solution that decreases their maintenance costs.

Leveraging Generative AI for Maintenance Solutions

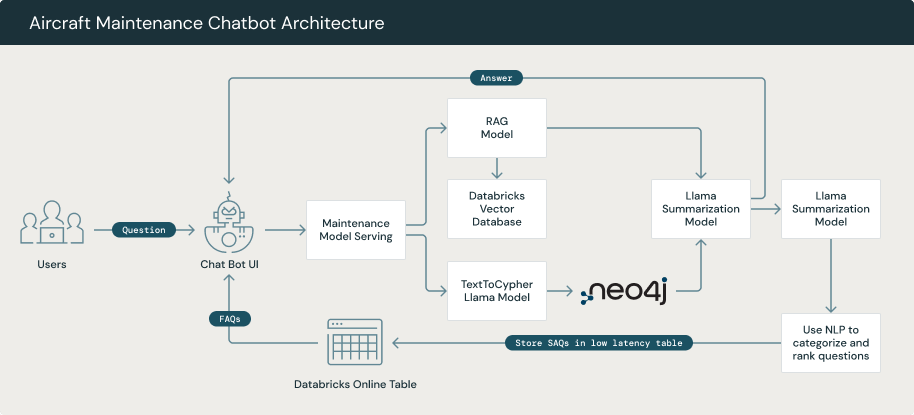

LLMs provide a unique and cutting-edge opportunity to distill complicated information into easy-to-understand, human-readable text. Kubrick’s purpose-built architecture features a chatbot designed specifically for technicians, helping them save time and providing fuller context when resolving defects. Typically, a chatbot has multiple endpoints to answer different types of questions; this equipment maintenance chatbot has two retrieval models, each connecting to a separate endpoint.

- Neo4j Endpoint: The first retrieval model, TextToCypher, fetches the equipment data from a Neo4j graph database. This component of the model leverages a Llama 3 model that is pre-trained on cypher data, for easier text-to-cypher conversions. By utilizing Databricks Mosaic AI, the model is deployed as an endpoint within Databricks, which we then call in the DsPy function. DsPy provides the benefit of simple and effective prompt engineering. After obtaining the generated cypher from the endpoint, the model executes this cypher code on our Neo4j database. The resulting data is then passed to the ResultsToText model, which converts it into a readable format for end-users. This output provides context about the defect, such as the relationship between the defect, part, station, maintenance engineer, etc. and gives more insight to maintenance engineers.

- RAG Endpoint: The second retrieval model is another Databricks endpoint that employs a RAG-enabled chatbot. The chatbot is connected to a Mosaic AI Vector Search index containing maintenance manuals and other written documents relevant to the vehicle or equipment. The insight provided is knowledge about the equipment, its parts, and best practices documented in the manual.

These endpoints both have clear use cases. For example, when the maintenance chatbot is asked a question about a specific piece of equipment or vehicle, it will query the TextToCypher endpoint, as this question can be answered using the knowledge graph. For a question about regulations on parts, the RAG-enabled endpoint will be queried, as the manual’s text is needed to answer this question.

However, if a maintenance worker asks about the steps to fix a specific issue on a piece of equipment or vehicle, the manual may have suggested steps, but there could also be useful information in the graph database about previous steps taken on that piece of equipment or a similar one that faced the same issue. In this case, the chatbot would send the user’s question to both models to gather comprehensive information. Then, once the relevant information is obtained from both sources, another endpoint combines the results into a readable and useful format for the end user.

This process orchestrates multiple endpoints to deliver the most accurate insights to the maintenance engineers and minimize the latency of calling multiple endpoints. First, it sends the queries to the endpoints concurrently since neither endpoint relies on the other’s output, allowing both to run simultaneously. Second, it creates a cache to check if a question has been previously asked and answered correctly and returns the cached results from it, thus reducing time on future queries.

Caching techniques for FAQs can be implemented using Databricks. The first step is to collect and rank FAQs stored in Delta tables, using NLP techniques to categorize and rank questions based on frequency and relevance. Then, ranked FAQs are stored in the online table, updated regularly to reflect changes in user behavior and new questions, and integrated into the UI to allow users to view the most frequently asked questions per category. Finally, technicians can review relevant FAQ categories in the UI before submitting a new question, reducing duplicate questions, and improving the user experience.

The model’s performance is evaluated in two critical ways. First, another LLM acts as a judge for all modules that generate human-readable text. This LLM-as-a-judge model ensures that the generated responses accurately answer the question, avoid hallucinations and match the expected output format. The second evaluation method involves the TextToCypher module. Since this model generates code rather than human-readable text, it cannot be evaluated by another LLM in the same way. Instead, it uses a custom evaluation function in Databricks Managed MLflow. This function runs the generated code on Kubrick’s database to verify its functionality and then compares the results to those produced by the ground truth code. A match results in a positive evaluation, while a discrepancy results in a negative one.

Conclusion

By leveraging the Databricks Data Intelligence Platform, Kubrick projects that they will be able to reduce heavy equipment maintenance costs for clients by millions of dollars, with estimates exceeding 9 figures across a three-year rollout. The value of Kubrick’s solution derives from applying Databricks tools such as Delta Live Tables (DTL), streaming jobs, Unity Catalog, and Mosaic AI, making the sum of its parts all the more efficient and powerful. By working closely with clients to understand and address their maintenance challenges, Kubrick is excited to be driving large-scale transformation in the MRO process. To learn more about Kubrick’s delivery and resourcing capabilities in partnership with Databricks, contact [email protected]

Source link

lol