//php echo do_shortcode(‘[responsivevoice_button voice=”US English Male” buttontext=”Listen to Post”]’) ?>

Ahead of the opening of Computex 2024 here in Taipei, the pre-show keynotes had two clear key themes: one was the so-called rebirth of the PC with a whole raft of AI copilot PCs due to come on the market within weeks; and the second was the importance of Taiwan in this ecosystem through the whole vale chain, from chip to consumer product.

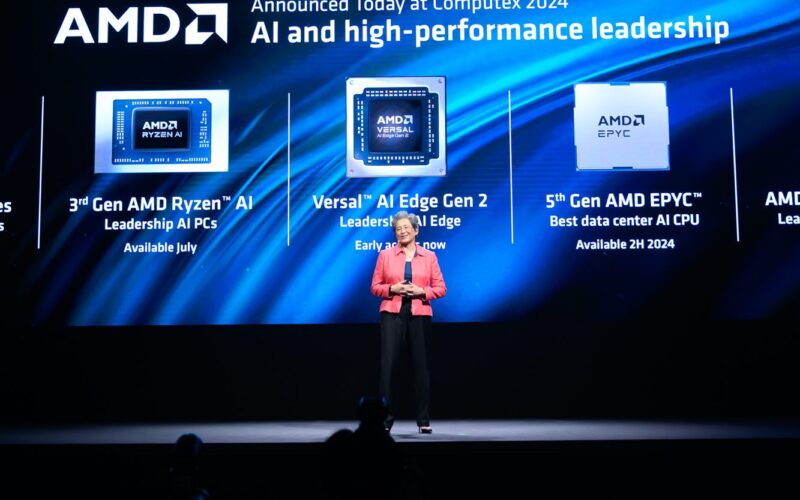

While Nvidia’s Jensen Huang took to the stage on Sunday night, AMD’s Lisa Su kicked off Monday’s keynotes here, followed by Arm’s Rene Haas and Qualcomm’s Cristiano Amon. All the keynote speakers brought on stage—a large number of them PC manufacturers—either live or via pre-recorded video, were there to announce their products or show off early prototypes of their AI copilot PCs.

Watch the video below for a short summary of my thoughts on the keynotes:

AMD’s Su said this was just the beginning of a decade of development of AI computing. Like her rival, Jensen Huang, she announced a yearly cadence of new products and outlined a roadmap to 2025 and beyond.

Su detailed AMD’s next-generation Zen 5 CPU core, which she said was built from the ground up for leadership performance and energy efficiency spanning, from supercomputers and the cloud to PCs. AMD also unveiled the AMD XDNA 2 NPU core architecture that delivers 50 TOPs of AI processing performance and up to 2× projected power efficiency for generative AI workloads compared to the prior generation.

Performance efficiency was a key theme in all the talks. Su said the AMD XDNA 2 architecture-based NPU is the industry’s first and only NPU supporting advanced Block FP16 data type, delivering increased accuracy compared to lower precision data types used by competitive NPUs without sacrificing performance.

Arm’s Haas talked about 100 billion Arm-based devices ready for AI by the end of 2025, with support from its Arm KleidiAI software for AI workloads—this is a set of compute kernels for developers of AI frameworks, across a wide range of devices, with support for key Arm architectural features like NEON, SVE2 and SME2. KleidiAI integrates with popular AI frameworks, such as PyTorch, Tensorflow and MediaPipe, with a view to accelerating the performance of key models including Meta Llama 3 and Phi-3.

Source link

lol