(amiak/Shutterstock)

The data fabric has emerged as an enterprise data management pattern for companies that struggle to provide large teams of users with access to well-managed, integrated, and secured data. Now scientists working at universities and national laboratories are also adopting a data fabric via something called the National Science Data Fabric.

The National Science Data Fabric is a pilot project funded by the National Science Foundation to provide a data fabric that connects research institutions around the country and the world. It was spearheaded two years ago by five researchers, including Valerio Pascucci (University of Utah), Michela Taufer (University of Tennessee, Knoxville), Alex Szalay (Johns Hopkins University), John Allison (University of Michigan, Ann Arbor), and Frank Wuerthwein (San Diego Supercomputing Center).

“We came together as a group of scientists and computer scientists, understanding that there is a need for a fabric for you scientists,” Taufer said during a recorded webinar earlier this year.

The idea behind the NSDF is to introduce “a novel trans-disciplinary approach for integrated data delivery and access to shared storage, networking, computing, and educational resources that will democratize data-driven scientific discovery,” according to the NSDF website. “The NSDF vision is to establish a globally connected infrastructure in which scientific investigation is unhindered by the limitations of extreme data.”

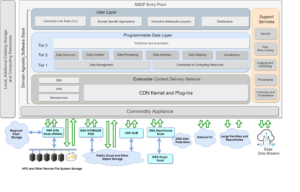

The NSDF provides “a shared, modular, containerized data delivery environment” that “fill[s] the missing middle in our current computational infrastructure.” NSDF images show a single domain-agnostic stack, delivered via an appliance, that blends core data fabric capabilities with connectors to a variety of data storage, compute, and networking resources across participating sites.

The NSDF pilot provides entry to the stack via several storage repositories, including government file systems, regional Ceph stores, Open Science Grid (OSG) StashCache and Origin nodes, Open Storage Network (OSN) storage pods, National Research Platform (NRP) FIONAs, cloud object stores, and edge data streams, according to the NSDF website.

The NSDF stack itself is broken up into several components, including:

- A user layer, consisting of command line tools, domain specific applications, interactive notebooks (like Jupyter), and dashboards;

- A three-tier programmable data layer consisting of data management and computing connections; data discovery, data curation, data processing, data analytics, data mapping, and visualization tools; and workflows and automation;

- An extensible content delivery network consisting of a CDN kernel and plug-ins, exposed via an SDK, APIs, and microservices;

- And support services that deliver core data fabric capabilities, such as a data catalog, security, lineage tracking, provenance, and containers and orchestration.

With the NSDF enabled via this appliance, participating users can tap into local storage and applications, according to the NSDF website. Data is shared via Internet2, the high-speed network that connects various government and university sites with a 100Mbps backbone, with some sites upgraded to the Terabit backbone.

DoubleCloud, a National Science Data Democratization Consortium (NSDDC), is hosting a NSDF Catalog, where users can discover and gain access to petabytes of indexed scientific data. About 65 research institutions have listed their data in the DoubleCloud data catalog, including AWS OpenData, Arizona State University (ASU), University of Virginia, University of the West Indies (UWI), and others.

“Our service indexes scientific data at a fine-granularity at the file or object level to inform data distribution strategies and to improve the experience for users from the consumer perspective, with the goal of allowing end-to-end dataflow optimizations,” DoubleCloud says on the NSDF website.

Since it launched, the NSDF has expanded to a variety of sites and systems, including Jetstream at the University of Arizona, Indiana University and the Texas Advanced Computing Center (TACC) University of Texas, Austin, and; Stampede2 at the TACC center at the University of Texas, Austin; the IBM Cloud site in Dallas, Texas and Ashburn, Virginia; Chameleon at the University of Chicago and TACC; CloudLab at University of Utah, University of Wisconsin-Madison, and Clemson University; Center for High Performance Computing at the University of Utah; CloudBank in various AWS regions; the OSG; Open Storage Network at various institutions; and CYVERSE.

The NSDF pilot is currently supporting several research projects, including IceCube neutrino observatory, which observes deep space from Antarctica; the XenonNT dark matter detector at the Gran Sasso Underground Laboratory in Italy; and the Cornell High Energy Synchrotron Source (CHESS) at Cornell University, among other projects.

You can find more information on the NSDF at nationalsciencedatafabric.org/.

Related Items:

Data Mesh Vs. Data Fabric: Understanding the Differences

All-In-One Data Fabrics Knocking on the Lakehouse Door

Breaking Down Silos, Building Up Insights: Implementing a Data Fabric

Source link

lol