Databricks Workflows is the cornerstone of the Databricks Data Intelligence Platform, serving as the orchestration engine that powers critical data and AI workloads for thousands of organizations worldwide. Recognizing this, Databricks continues to invest in advancing Workflows to ensure it meets the evolving needs of modern data engineering and AI projects.

This past summer, we held our biggest yet Data + AI Summit, where we unveiled several groundbreaking features and enhancements to Databricks Workflows. Recent updates, announced at the Data + AI Summit, include new data-driven triggers, AI-assisted workflow creation, and enhanced SQL integration, all aimed at improving reliability, scalability, and ease of use. We also introduced infrastructure-as-code tools like PyDABs and Terraform for automated management, and the general availability of serverless compute for workflows, ensuring seamless, scalable orchestration. Looking ahead, 2024 will bring further advancements like expanded control flow options, advanced triggering mechanisms, and the evolution of Workflows into LakeFlow Jobs, part of the new unified LakeFlow solution.

In this blog, we’ll revisit these announcements, explore what’s next for Workflows, and guide you on how to start leveraging these capabilities today.

The Latest Improvements to Databricks Workflows

The past year has been transformative for Databricks Workflows, with over 70 new features introduced to elevate your orchestration capabilities. Below are some of the key highlights:

Data-driven triggers: Precision when you need it

- Table and file arrival triggers: Traditional time-based scheduling is not sufficient to ensure data freshness while reducing unnecessary runs. Our data-driven triggers ensure that your jobs are initiated precisely when new data becomes available. We’ll check for you if tables have updated (in preview) or new files have arrived (generally available) and then spin up compute and your workloads when you need them. This ensures that they consume resources only when necessary, optimizing cost, performance, and data freshness. For file arrival triggers specifically, we’ve also eliminated previous limitations on the number of files Workflows can monitor.

- Periodic triggers: Periodic triggers allow you to schedule jobs to run at regular intervals, such as weekly or daily, without having to worry about cron schedules.

AI-assisted workflow creation: Intelligence at every step

- AI-Powered cron syntax generation: Scheduling jobs can be daunting, especially when it involves complex cron syntax. The Databricks Assistant now simplifies this process by suggesting the correct cron syntax based on plain language inputs, making it accessible to users at all levels.

- Integrated AI assistant for debugging: Databricks Assistant can now be used directly within Workflows (in preview). It provides online help when errors occur during job execution. If you encounter issues like a failed notebook or an incorrectly set up task, Databricks Assistant will offer specific, actionable advice to help you quickly identify and fix the problem.

Workflow Management at Scale

- 1,000 tasks per job: As data workflows grow more complex, the need for orchestration that can scale becomes critical. Databricks Workflows now supports up to 1,000 tasks within a single job, enabling the orchestration of even the most intricate data pipelines.

- Filter by favorite job and tags: To streamline workflow management, users can now filter their jobs by favorites and tags applied to those jobs. This makes it easy to quickly locate the jobs you need, e.g. of your team tagged with “Financial analysts”.

- Easier selection of task values: The UI now features enhanced auto-completion for task values, making it easier to pass information between tasks without manual input errors.

- Descriptions: Descriptions allow for better documentation of workflows, ensuring that teams can quickly understand and debug jobs.

- Improved cluster defaults: We’ve improved the defaults for job clusters to increase compatibility and reduce costs when going from interactive development to scheduled execution.

Operational Efficiency: Optimize for performance and cost

- Cost and performance optimization: The new timeline view within Workflows and query insights provide detailed information about the performance of your jobs, allowing you to identify bottlenecks and optimize your Workflows for both speed and cost-effectiveness.

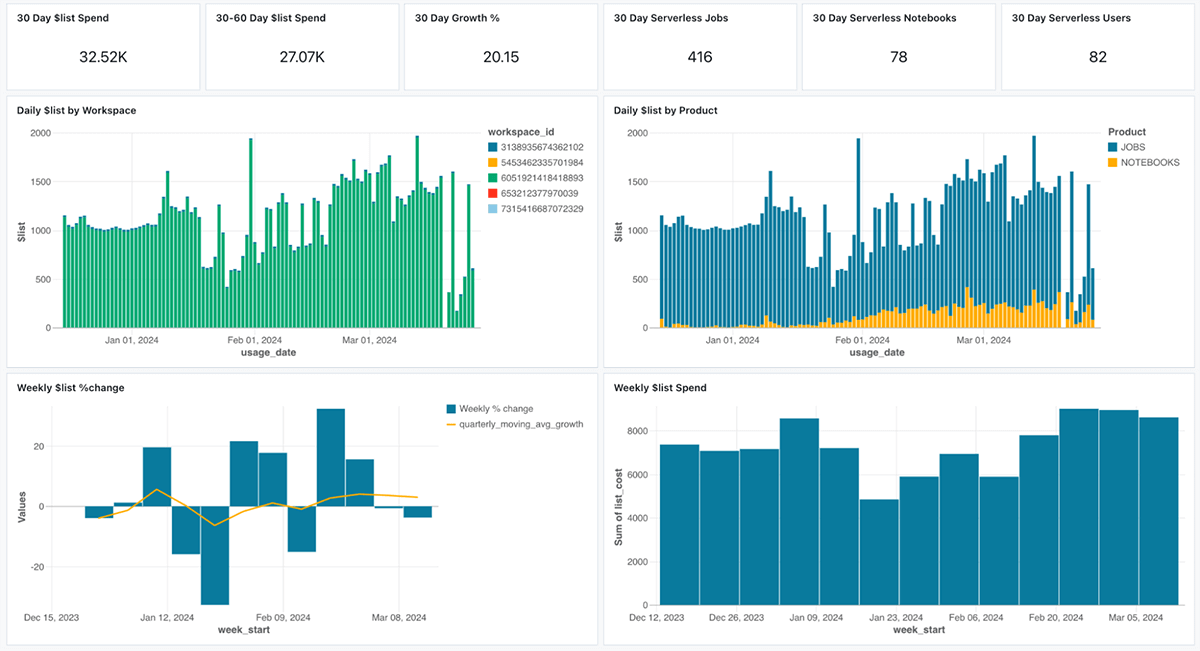

- Cost tracking: Understanding the cost implications of your workflows is crucial for managing budgets and optimizing resource utilization. With the introduction of system tables for Workflows, you can now track the costs associated with each job over time, analyze trends, and identify opportunities for cost savings. We’ve also built dashboards on top of system tables that you can import into your workspace and easily customize. They can help you answer questions such as “Which jobs cost the most last month?” or “Which team is projected to exceed their budget?”. You can also set up budgets and alerts on those.

Enhanced SQL Integration: More Power to SQL Users

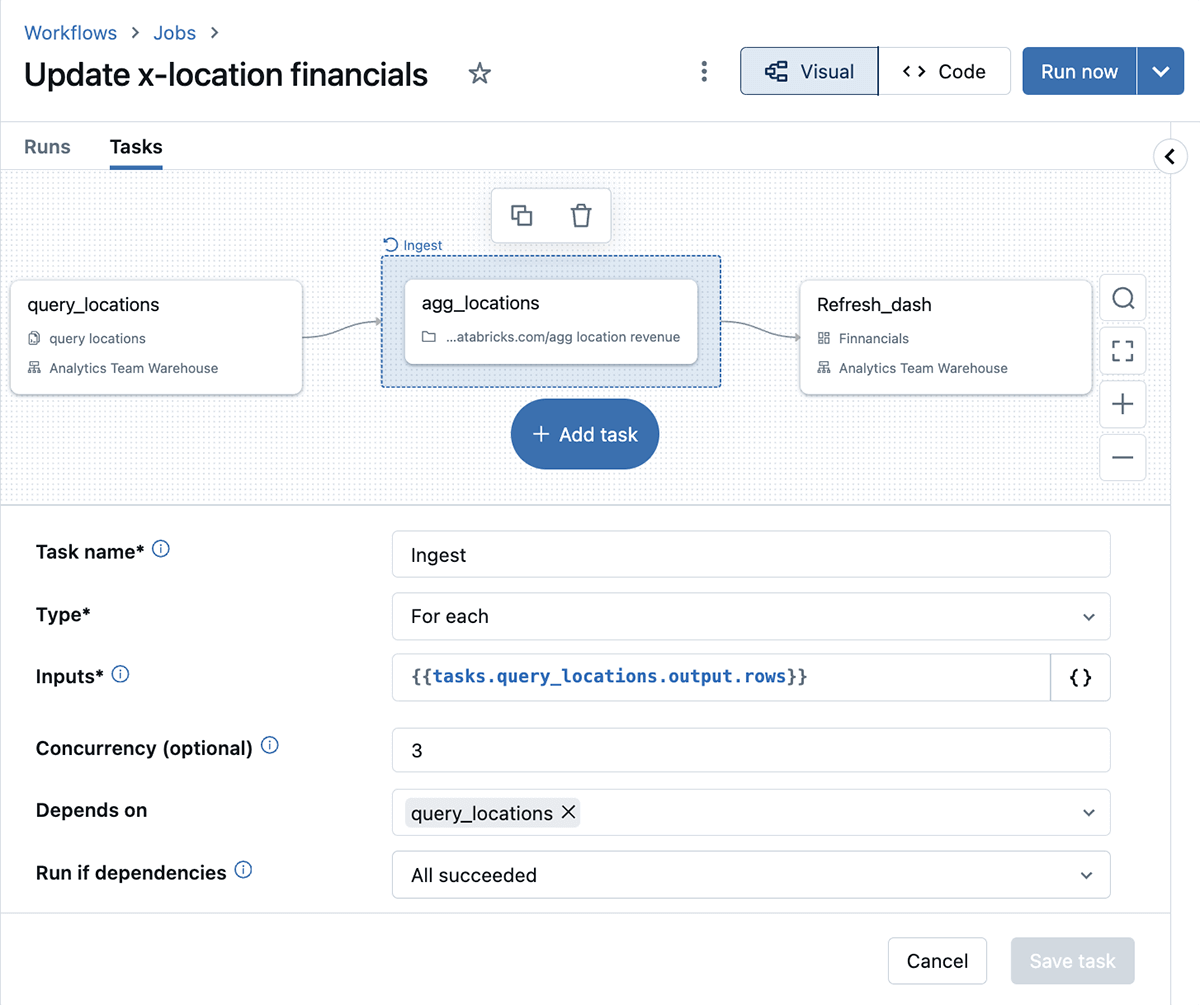

- Task values in SQL: SQL practitioners can now leverage the results of one SQL task in subsequent tasks. This feature enables dynamic and adaptive workflows, where the output of one query can directly influence the logic of the next, streamlining complex data transformations.

- Multi-SQL statement support: By supporting multiple SQL statements within a single task, Databricks Workflows offers greater flexibility in constructing SQL-driven pipelines. This integration allows for more sophisticated data processing without the need to switch contexts or tools.

Serverless compute for Workflows, DLT, Notebooks

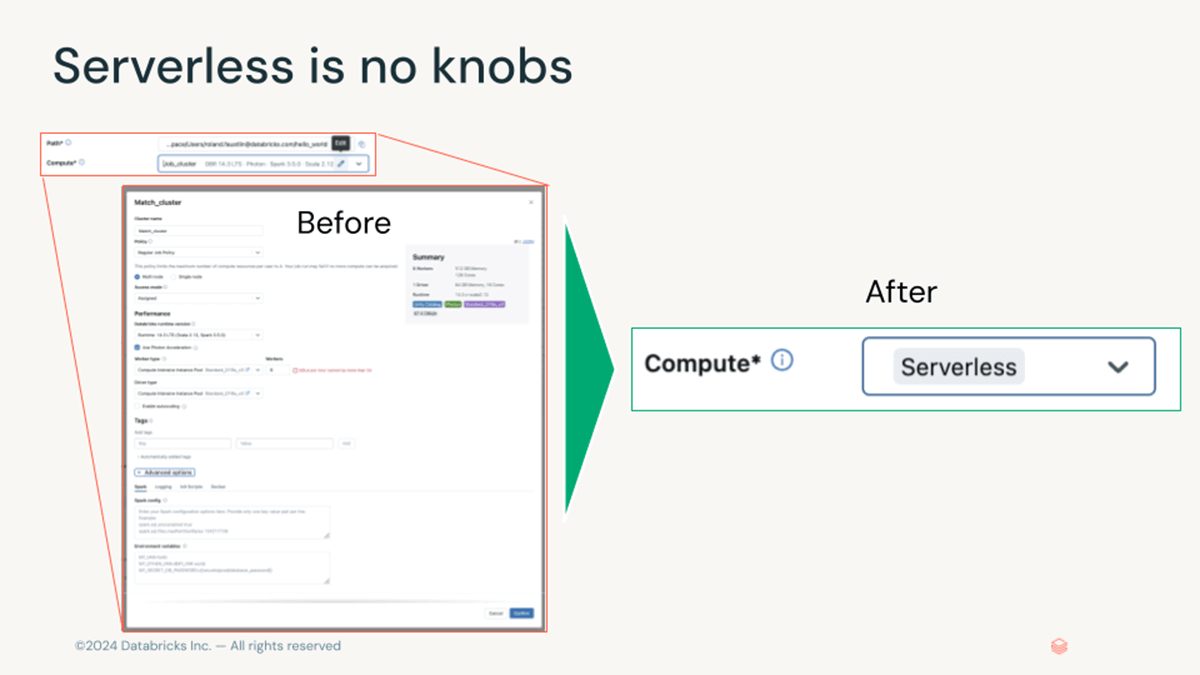

- Serverless compute for Workflows: We were thrilled to announce the general availability of serverless compute for Notebooks, Workflows, and Delta Live Tables at DAIS. This offering was rolled out to most Databricks regions, bringing the benefits of performance-focuses fast startup, scaling, and infrastructure-free management to your workflows. Serverless compute removes the need for complex configuration and is significantly easier to manage than classic clusters.

What’s Next for Databricks Workflows?

Looking ahead, 2024 promises to be another year of significant advancements for Databricks Workflows. Here’s a sneak peek at some of the exciting features and enhancements on the horizon:

Streamlining Workflow Management

The upcoming enhancements to Databricks Workflows are focused on improving clarity and efficiency in managing complex workflows. These changes aim to make it easier for users to organize and execute sophisticated data pipelines by introducing new ways to structure, automate, and reuse job tasks. The overall intent is to simplify the orchestration of complex data processes, allowing users to manage their workflows more effectively as they scale.

Serverless Compute Enhancements

We’ll be introducing compatibility checks that make it easier to identify workloads that would easily benefit from serverless compute. We’ll also leverage the power of the Databricks Assistant to help users transition to serverless compute.

Lakeflow: A unified, intelligent solution for data engineering

During the summit we also introduced LakeFlow, the unified data engineering solution that consists of LakeFlow Connect (ingestion), Pipelines (transformation) and Jobs (orchestration). All of the orchestration improvements we discussed above will become a part of this new solution as we evolve Workflows into LakeFlow Jobs, the orchestration piece of LakeFlow.

Try the Latest Workflows Features Now!

We’re excited for you to experience these powerful new features in Databricks Workflows. To get started:

Source link

lol