Eight of the tech industry’s largest players are teaming up to launch the UALink Promoter Group, a new artificial intelligence hardware initiative detailed today.

The project focuses on developing an industry-standard method of linking together AI chips such as graphics processing units. The goal, the initiative’s backers detailed, is to ease the task of assembling AI clusters that contain a large number of chips. Improved infrastructure scalability is another goal.

The UALink Promoter Group is backed by chipmakers Intel Corp., Advanced Micro Devices Inc. and Broadcom Corp. Two of the industry’s three largest cloud providers, Microsoft Corp. and Google LLC, are participating as well along with Meta Platforms Inc., Cisco Systems Inc. and Hewlett Packard Enterprise Co. It’s seen as a foil to Nvidia Corp.’s dominance in GPUs and all the systems around the chips.

The group plans to incorporate an official industry consortium in the third quarter to oversee the development effort. The UALink Consortium, as the body is set to be called, will release the first round-number iteration of its AI interconnect technology later in the same quarter. The specification will be accessible to the companies that participate in the initiative.

Advanced AI models are typically trained using not one but multiple processors. Each processor runs a separate copy of the neural network being developed and teaches it a small subset of the training dataset. To complete the development process, the chips sync their copies of the neural network, which necessitates a channel through which those chips can exchange data with one another.

That’s the requirement the UALink Consortium’s planned interconnect is meant to address. According to the group, the technology will make it possible to link together up to 1,024 AI accelerators in a single cluster. Additionally, UALink will be capable of connecting such clusters to network switches that can help optimize the flow of data traffic between the individual processors.

The consortium detailed that one of the features in the works is a capability for facilitating “direct loads and stores between the memory attached to accelerators.” Facilitating direct access to AI chips’ memory is a way of speeding up machine learning applications. Nvidia Corp. also provides an implementation of this technique in the form of GPUDirect, a technology available for its data center graphics cards.

Usually, a piece of data that travels from one GPU to another has to make several pit stops before reaching its destination. In particular, the information must travel through the central processing units of the servers that host the graphics cards. Nvidia’s GPUDirect technology makes it possible to bypass the CPU, which allows data to reach its destination faster and thereby speeds up processing.

The UALink Consortium is at least the third industry group focused on AI chips to have launched in the past five years.

AI clusters include not only machine learning accelerators but also CPUs that perform various supporting tasks. In 2019, Intel released an interconnect called CXL for linking AI accelerators to CPUs. It also established an industry consortium to promote the development and adoption of the standard.

CXL is a customized version of the widely used PCIe interconnect for linking together server components. Intel modified the latter technology with several AI-specific optimizations. One of those optimizations allows the interconnected CPUs and GPUs in an AI cluster to share memory with one another, which allows them to exchange data more efficiently.

Last year, Intel teamed up with Arm Holdings plc and several other chipmakers to launch an AI software consortium called the UXL Foundation. The group’s goal is to ease the task of developing AI applications that can run on multiple types of machine learning accelerators. The technology the UXL Foundation is developing to that end is based on oneAPI, a toolkit for building multiprocessor software that was originally developed by Intel.

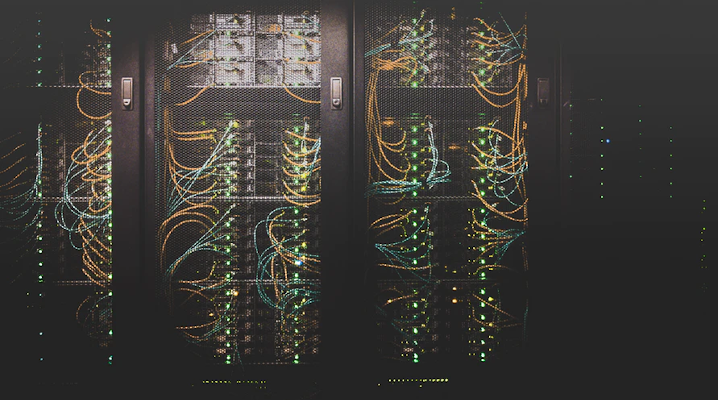

Image: Unsplash

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU

Source link

lol