New Trends in AI-RAG and Graphs. I’ve been doing a bit of research on how to improve RAG with graphs. I’m especially interested in augmenting agentic-RAG with the knowledge graph. A while back, Maya @Neo4j wrote a nice article on The Future of AI: Machine Learning and Knowledge Graphs. I think that makes sense to me. Let me share some new, interesting stuff on RAG and Graphs:

Graph RAG. Unlike RAG approaches that focus solely on text-based entity retrieval, GRAG maintains an acute awareness of graph topology, which is crucial for generating contextually and factually coherent responses. The researchers claim that GRAG significantly outperforms current SOTA RAG methods while effectively mitigating hallucinations. Paper: GRAG: Graph Retrieval-Augmented Generation.

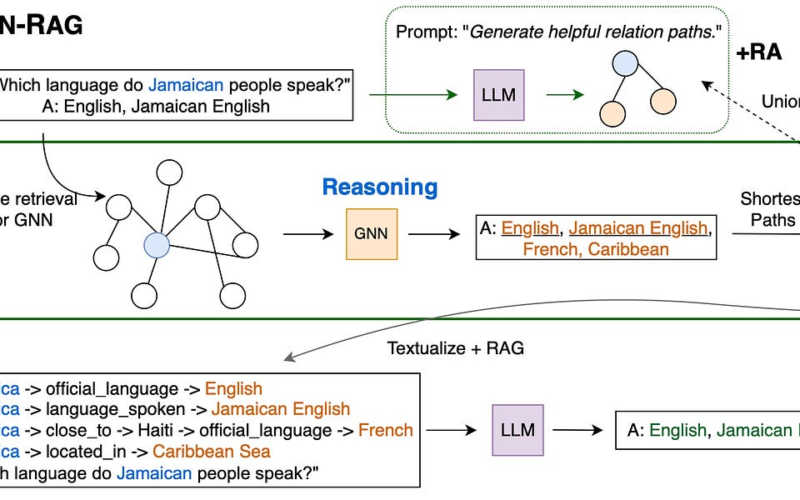

Graph NNs+RAG. This paper introduces a novel method for combining language understanding abilities of LLMs with the reasoning abilities of Graph Neural Nets (GNNs) in a retrieval-augmented generation (RAG) style. The researches claim that GNN-RAG achieves SOTA performance in two widely used KGQA benchmarks, outperforming or matching GPT-4. In addition, the researches say that GNN-RAG excels on multi-hop and multi-entity questions outperforming other approaches by 8.9 – 15.5%. Paper: GNN-RAG: Graph Neural Retrieval for LLM Reasoning

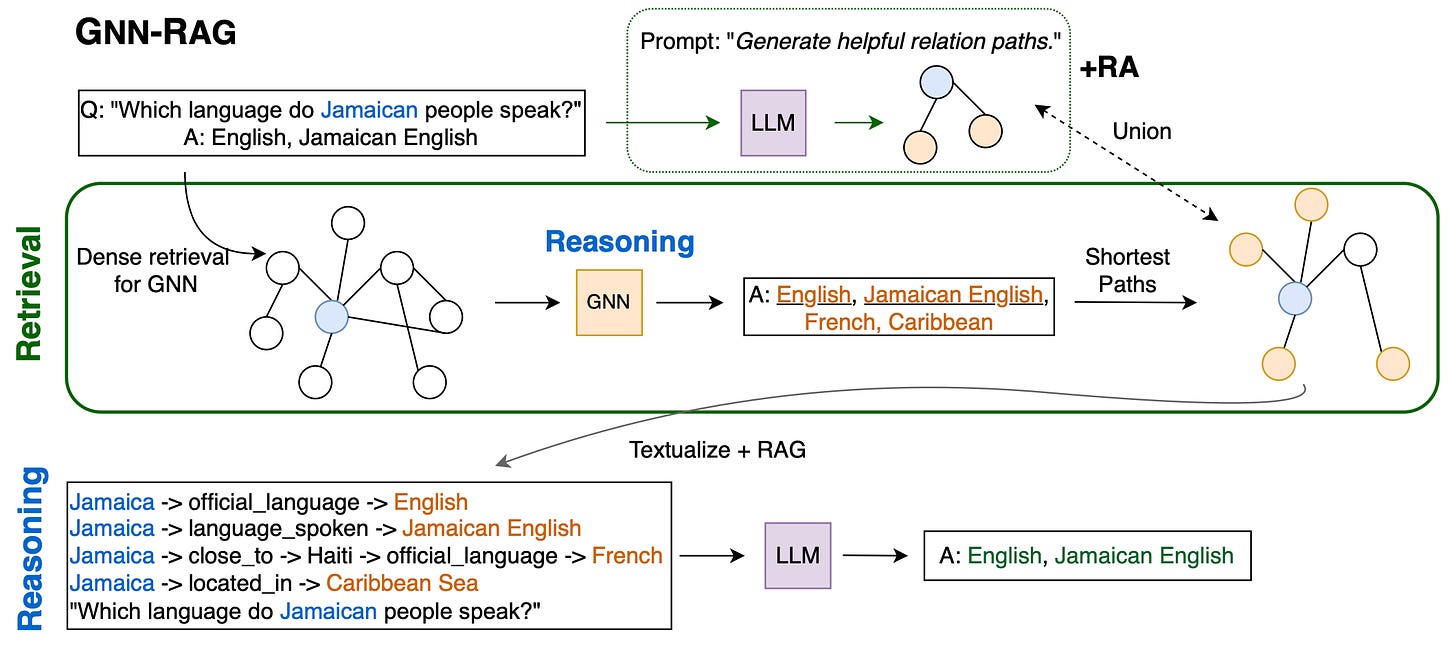

LlamaIndex+Neo4j: The Property Graph Index. Traditional knowledge graph representations like knowledge triples (subject, predicate, object) are limited. They lack the ability to: 1) Assign labels and properties to nodes and relationships, 2) Represent text nodes as vectors, and 3) Perform both vector and symbolic retrieval embeddings. The Property Graph Index solves these issues. By using a labelled property graph representations, it enables far richer modelling, storage and querying of your knowledge graph. Blogpost: Introducing the Property Graph Index: A Powerful New Way to Build Knowledge Graphs with LLMs.

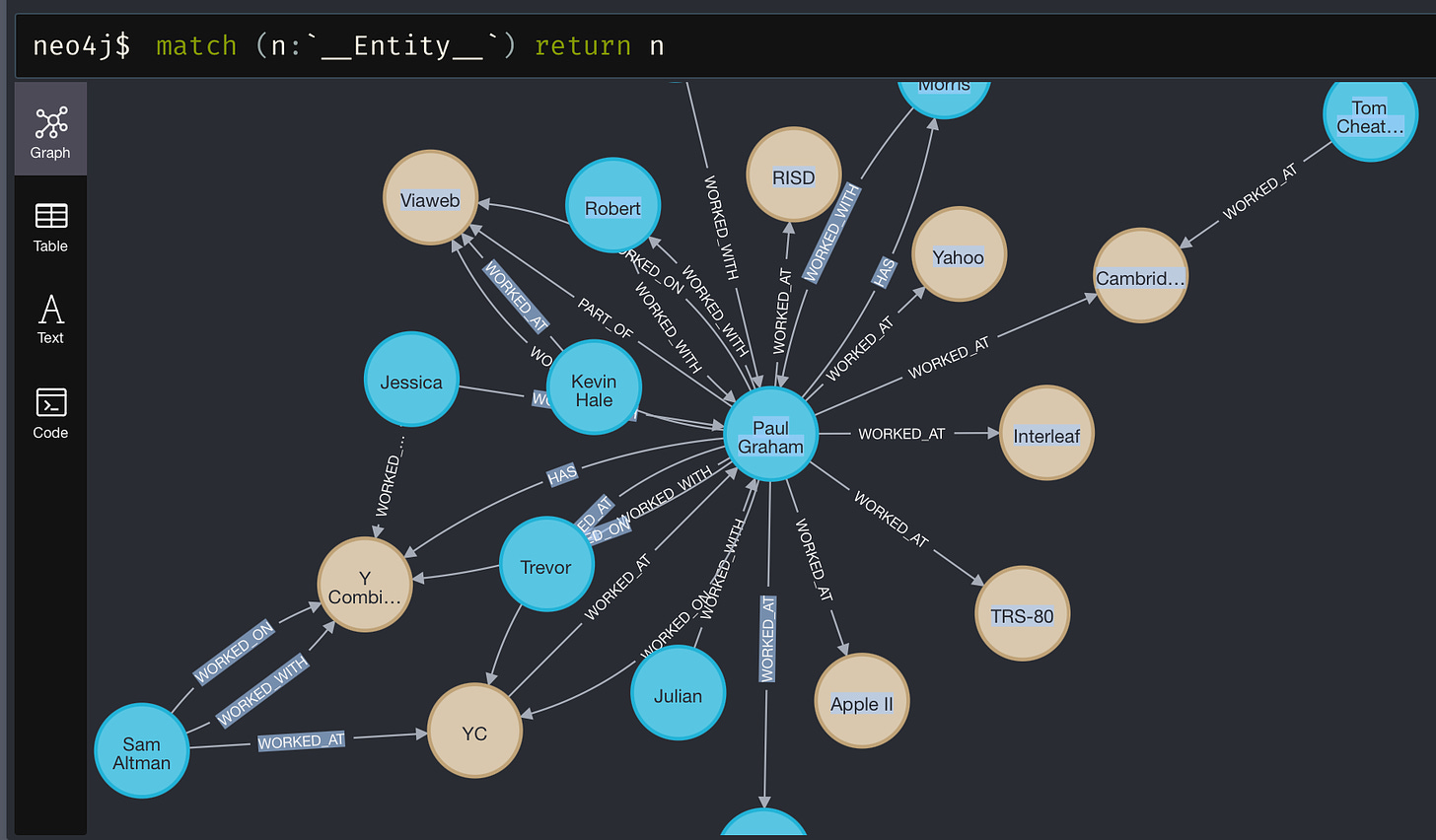

Unifying RAG frameworks with LangGraph. In think this is one of the best, practical implementations of advanced RAG I’ve seen. The idea is to combine three of the most advanced RAG frameworks using LangGraph: Corrective Retrieval Augmented Generation (CRAG), Self-Reflective Retrieval-Augmented Generation (Self-RAG) , and an Adaptive QA framework. A great read! Blogpost: Unifying RAG Frameworks: Harnessing the Power of Adaptive Routing, Corrective Fallback, and Self-Correction using Langchain’s LangGraph

Multi-Agent Collaboration with LangGraph. If you’re building AI agents, this article will show you how to build an optimal autonomous research multi-agent assistant using LangGraph. Blogpost: How to Build the Ultimate AI Automation with Multi-Agent Collaboration.

Have a nice week.

-

What We Learned from a Year of Building with LLMs (Part II & I)

-

Understanding the Cost of Generative AI Models in Production

-

[overview] New Falcon 2.0 11B: Next-gen Open-source LLMs & VLMs

-

ToonCrafter: A New, OSS Generative Cartoons Model (demo, repo, paper)

-

[interactive] FineWeb: Decanting The Web for the Finest Text Data at Scale

-

How to Bootstrap & Aggregate Multiple ReAct Agents with DSPy

-

MusePose: A Pose-Driven Img-2-Vid Framework for Virtual Human Generation

-

N-HiTS — Making DL for Time Series Forecasting More Efficient

-

How to Train & Finetune Embedding Models with Sentence Transformers v3

-

SymbCoT: Faithful Logical Reasoning via Symbolic Chain-of-Thought

-

Automatic Data Curation for S-S Learning: A Clustering-Based Approach

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.

Source link

lol