At DEV, our commitment to open-source development drives our decision-making process, especially regarding the tools and technologies we use. Our platform, which we open source as Forem, reflects this ethos. Forem is an open-source Rails application designed to foster community and content sharing. As a backstop for this approach, the base search is implemented in Postgres to serve small and straightforward use cases. However, to serve a high-traffic global network, it is not an entirely sufficient experience.

Latency is the only objective measure of user experience. If a request loads slower, users are going to leave and/or lose trust in the feature they are accessing. We have always treated this as a top priority, and performance is a must-have in search.

DEV’s core codebase needs to be hackable without third-party dependencies, but our production instance has always relied on this type of infrastructure for certain functionality. We saw an opportunity to enhance the core experience with a tool we trusted could deliver the user experience and scale we needed.

DEV is known as a lightweight, low-latency service accessible around the world. Our search experience backed strictly by Postgres has not been able to scale to meet those needs. As a service that simply uses a single database for read and writes, expensive queries can also be a drag on the whole service.

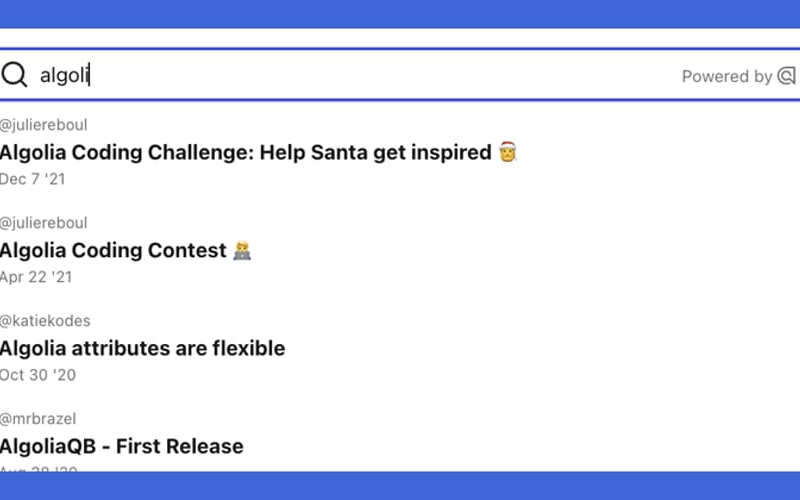

Enter Algolia, which we have implemented as a progressive enhancement on the core experience — in terms of both performance and experience. We integrated Algolia to leverage their product and infrastructure to level up the search experience while still allowing the app to be stood up and run at its core without this dependency.

The Starting Point: Postgres Search

Postgres search functionality has been a reliable foundation for Forem. It offers a robust set of features that are well-suited for many applications, particularly those that prioritize open-source tools. With Postgres, developers can start the Forem app without needing additional services. This setup is ideal for smaller projects or those just getting started, ensuring ease of development and deployment.

The Challenge: Scaling with Traffic and Latency

As DEV’s popularity increased, so did the demands on our infrastructure. We began to experience latency issues that impacted user experience. While Postgres is powerful, handling the scale and speed required for our growing community necessitated a more responsive solution. This is where Algolia came into play.

We want our members to be treated with a great experience around the world, and our Postgres baseline was not offering a scenario where we were able to provide that experience. And while we love Postgres and absolutely consider it the core of our experience, it was not practical to try and entirely deliver the experience we wanted on top of it.

Between the infrastructure considerations and the time-suck of different approaches, it was also not practical for us to pursue another open source library for this task, as it would only have brought us part of the way there. We would be interested in future modularity where this was an option, but in terms of the best tool to reach for in our use case, Algolia made the most sense.

The Solution: Integrating Algolia for Progressive Enhancement

Algolia offers several advantages that align perfectly with our needs:

- Latency Reduction: Algolia’s architecture is optimized for speed, ensuring quick search results even under heavy load.

- Scalability: As our user base grows, Algolia scales effortlessly to meet increased demand.

- Advanced Functionality: Features like typeahead and AI enhancements are easily implemented with Algolia, allowing us to innovate rapidly.

Latency in Detail

In our out-of-the-box functionality, a search query is routed through our Rails application, and into our Postgres database. Depending on the load of the system, bad-case-scenario queries took upwards of 1 second, and bad-case-scenario queries could hang or even time-out.

Typical queries were in the “several hundred millisecond” range which is acceptable, but not ideal.

We found ourselves with a best case scenario of “acceptability”, and no scenario with ideal results. Latency in the Algolia implementation can be as fast as single-digit-milliseconds.

After the change, 1s requests became 50ms requests. And faster requests have also been achieved.

Queries go directly to the Algolia infrastructure without spending any time in our application — saving time for the end user, but also load in on our infrastructure. This also gives us the capacity to implement replication across geographies to ensure minimal latency globally for a service which is accessed around the world.

DEV already runs through edge caching so most initial requests are rendered with minimal latency to our users across the globe, but dynamic requests — ones with variable outputs that are not suitable for caching — can have substantially varying results across different time zones. For some web users, latency from a pure distance perspective can be over 200ms for a single round trip in a best case scenario.

You cannot change the speed of light, but global replication of our Postgres database is not an endeavor we have bandwidth for (maybe someday).

Maintaining Flexibility: Postgres as a Starting Point

One of the key benefits of our approach is that developers can still start the Forem app with Postgres. This maintains our commitment to open-source principles and ensures that the barrier to entry remains low. As projects grow and require enhanced functionality, Algolia can be integrated seamlessly, offering a clear path for progressive enhancement.

Pre-computed indexes are vital to a great search experience, but it is a pattern which is not necessarily practical to try and achieve in-house. However, there are also pitfalls to reaching for third-party tools if they lack effective libraries or you are not thoughtful about how the integration can complicate your business logic.

The algolia-rails gem plugs into Rails models fairly seamlessly, however in order to avoid overloading our model files, we created modules in the form of concerns.

module AlgoliaSearchable

module SearchableArticle

extend ActiveSupport::Concern

included do

include AlgoliaSearch

algoliasearch(**DEFAULT_ALGOLIA_SETTINGS, if: :indexable) do

attribute :user do

{ name: user.name,

username: user.username,

profile_image: user.profile_image_90,

id: user.id,

profile_image_90: user.profile_image_90 }

end

searchableAttributes %w[title tag_list body user]

attribute :title, :tag_list, :reading_time, :score, :featured, :comments_count,

:positive_reactions_count, :path, :main_image, :user_id, :public_reactions_count,

:public_reaction_categories

add_attribute(:published_at) { published_at.to_i }

add_attribute(:readable_publish_date) { readable_publish_date }

add_attribute(:body) { processed_html.first(1000) }

add_attribute(:timestamp) { published_at.to_i }

add_replica("Article_timestamp_desc", per_environment: true) { customRanking ["desc(timestamp)"] }

add_replica("Article_timestamp_asc", per_environment: true) { customRanking ["asc(timestamp)"] }

end

end

class_methods do

def trigger_sidekiq_worker(record, delete)

AlgoliaSearch::SearchIndexWorker.perform_async(record.class.name, record.id, delete)

end

end

def indexable

published && score.positive?

end

def indexable_changed?

published_changed? || score_changed?

end

end

end

Embracing AI for Progressive Enhancement

Algolia’s AI enhancements add an exciting dimension to our progressive enhancement strategy. AI-powered features can provide more personalized and relevant search results, improving the overall user experience. This concept of AI-driven progressive enhancement is intriguing and has the potential to revolutionize how we think about scaling and optimizing web applications.

Algolia gave us the latency we needed to implement typeahead and put more focus into a fast and effective overall search experience, but it has also given us the capacity to add intelligence to the experience as we build.

We have already implemented “recommendations” when a user initially interacts with the search engine and we are able to find similar content the user may be interested in based on the page they are on before they begin their search. We will also be exploring the neural search functionality of Algolia to further enhance the experience.

This is all done as a progressive enhancement, which is a matter of product design and engineering considerations. We have layered this onto the core experience the open source version of the platform is able to achieve out of the box — or necessarily having to develop with the Algolia dependency locally. The app can still be containerized and run with the core dependencies.

Success and Future Prospects

Our journey from Postgres to Algolia has been a success. We’ve managed to retain the simplicity and openness of Postgres while leveraging Algolia’s powerful capabilities to handle increased traffic and reduce latency. The AI enhancements offered by Algolia represent an exciting frontier, and we’re eager to explore their full potential.

In summary, our experience underscores the value of progressive enhancement, starting with robust open-source tools and scaling with advanced solutions like Algolia. This approach ensures flexibility, scalability, and continuous improvement, ultimately providing a better experience for our community.

By sharing our journey, we hope to inspire other developers to consider similar strategies, balancing the simplicity of open-source tools with the power of advanced technologies to achieve optimal performance and scalability.

Stay tuned for more big improvements. Happy coding. ❤️

Source link

lol