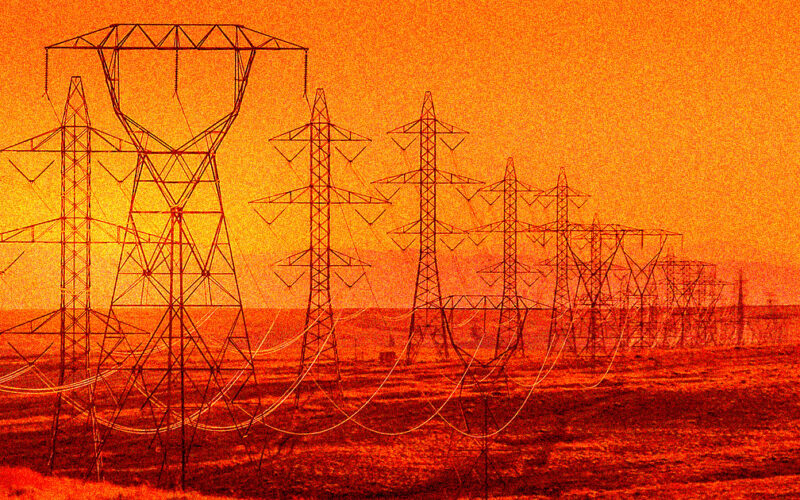

AI companies will have to get creative — or run out of power.

McGrid

Keeping generative AI models running requires astronomical amounts of electricity — and the United States’ aging power grid is struggling to handle the load.

As CNBC reports, experts are worried that the massive surge in interest in the tech could prove to be a major infrastructure problem. Transformers used to turn raw electricity into usable power are on average 38 years old and have quickly become a prime source of power outages. Building new transmission lines has also proven unpopular, since the extra costs tend to be passed onto local residents, raising their electric bills.

And it’s not just power that’s proving to be a bottleneck for generative AI — the data centers that power it also need copious amounts of water to keep cool.

According to recent estimates by Boston Consulting Group, demand for data centers is rising at a brisk pace in the US, and is expected to make up 16 percent of total US power consumption by the year 2030. Whether the country’s aging infrastructure will be able to support such a massive load remains to be seen.

Power Play

AI companies are already feeling the crunch, with data center company Vantage executive Jeff Tench telling CNBC that there’s already a “slowdown” in Northern California because of a “lack of availability of power from the utilities here in this area.”

“The industry itself is looking for places where there is either proximate access to renewables, either wind or solar, and other infrastructure that can be leveraged,” he added, “whether it be part of an incentive program to convert what would have been a coal-fired plant into natural gas, or increasingly looking at ways in which to offtake power from nuclear facilities.”

Tech leaders have floated exotic ideas for meeting sky-high energy demands. OpenAI CEO Sam Altman told audiences at this year’s World Economic Forum that the AI models of tomorrow would require a “breakthrough” — spurring him to invest in fusion power himself.

But despite decades of research, fusion energy currently remains largely hypothetical.

Other companies like Microsoft are looking into developing “small modular reactors” — basically scaled-down nuclear power plants — that could give data centers an in-situ boost.

Chipmakers are also hoping to reduce power demand by increasing the efficiency of AI chips.

But whether any of those efforts will be enough to meet the seemingly insatiable power demands of AI companies is anything but certain.

Meanwhile, the carbon footprint of generative AI continues to soar, with Google admitting in its latest environmental impact report that it’s woefully behind its plan to reach net-zero carbon emissions by 2030.

More on generative AI: Washington Post Launches AI to Answer Climate Questions, But It Won’t Say Whether AI Is Bad for the Climate

Source link

lol