You’ve likely identified numerous bugs from various error logs, but have also been troubled countless times by lengthy and complex logs.

I also often suffer from the agony of log reviewing, typically due to:

- Log files are so large that it’s difficult to even open them.

- The information within the log files is intricately intertwined, with the sequences of various modules being unclear.

- The log are split into multiple parts, necessitating constant switching between them

Not only are the logs hard to stomach, but the tools for viewing them are also not user-friendly:

- After searching for a keyword and jumping around a bit, I often forget what I was doing (Who am I, where am I, what am I doing?).

- When I want to filter logs by a certain keyword, some tools don’t even support it.

- Just when I’ve got my analysis all figured out, I forget how to start explaining it as soon as someone else comes over.

These issues can be divided into two categories: first, the tools themselves are problematic, with insufficient functionality; second, there is a lack of systematic methods for troubleshooting issues from logs.

For the former, what we need are professional log viewing tools, not just editors; for the latter, I have summarized some methodologies that I can share.

Visualization of Thought Process

The general steps for troubleshooting logs are: Understand the problem => Locate the error => Examine the context => Infer the cause of the error.

In this process, if you consider the logs as a one-dimensional line, then reviewing the logs is like jumping back and forth along the line, gathering information, and drawing conclusions.

The problems mentioned above, such as forgetting where you’ve read in the logs, or not knowing where to start when explaining to others, are due to not recording your browsing path, gathered information, summarized doubts, and tentative hypotheses in real-time.

What is the most suitable way to record and visualize these elements? I believe it is the “Timeline.”

How do we use a timeline? Imagine you are organizing historical events. When you find something valuable in the logs (a significant event), you place that spot (log line) into the timeline, becoming a “node.”

At first, the timeline is just a bookmark bar, helping us record location information. For example, where the error occurred, what was happening at a certain time, what happened before or after something else, etc.

Next, we start to combine the logs with the timeline, gradually discovering some doubts. If a place seems suspicious, we add a comment to that node; if a value printed seems wrong, we color that node yellow… This is very similar to a mind map.

The process above, which seems to be centered around organizing log events along the “timeline,” is essentially an examination of our own thought process (identifying suspicious points, connecting before and after, and arguing). In other words, at this point, the “timeline” is already playing the role of visualizing our thought process.

Using visualization brings another benefit: we can send this organized timeline to workmate to explain our log analysis process. Isn’t this more intuitive than just using plain text descriptions?

Primary + Auxiliary

The “timeline” mentioned above serves as a consistent place to record, edit, and visualize thoughts without being disturbed or interrupted by other information.

While the timeline helps us maintain our thought process along the dimension of time, the “filter window” helps us keep our thought process on track along the dimension of information.

In log troubleshooting, it’s common to filter logs containing a specific keyword to focus on a particular topic (information filtering). But is filtering enough?

Filtering can lead to the loss of information. What we need to understand is what’s happening in the entire log with the filtered log entries, where they are distributed, what the context (other modules) is doing, and how they relate to the nodes (events) in the timeline before and after…

In other words, the auxiliary information filtered by keywords, in addition to its own informational value, also needs to be extracted against the main log to obtain more information. In other words, it is both parallel and intertwined.

So, How to reasonably resolve this contradiction?

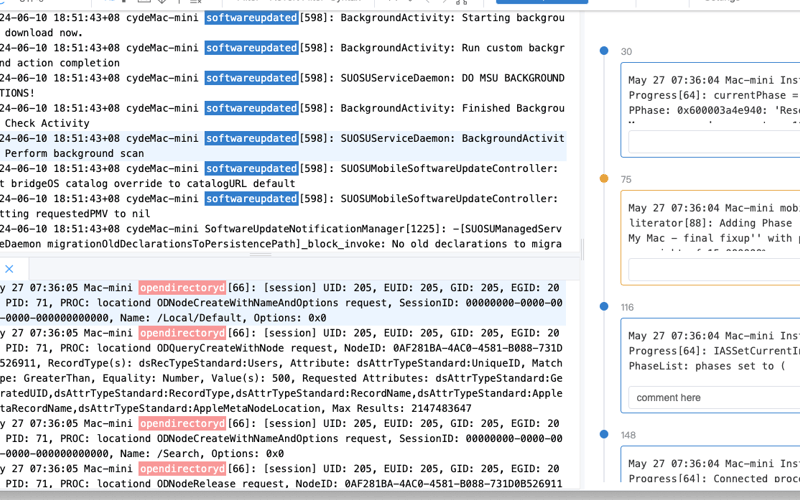

There are three major areas in the image above: the main window in the upper left, the filter pane in the lower left, and the timeline on the right.

I define the information filtered by keywords as auxiliary information, placed in the filter pane, and the main window displays the complete log to achieve parallel viewing.

At the same time, using ‘time’ as the ‘link’ to connect the ‘main window’, ‘filter pane’, and ‘timeline’, no matter which event is clicked in any of the windows, the other two windows will immediately locate to the corresponding position, thus achieving the interweaving of information.

TAG

Viewing logs is not something that can be accomplished by just searching for one or two keywords (what can be done by searching for one or two keywords is merely a cursory glance), but it involves many. Therefore, it would be best to record the searched keywords, and ideally, mark them with different colors.

So, I also designed a TAG bar to record the currently highlighted keywords.

Segmented log

Of course, we won’t forget about segmented logs. The most direct and intuitive way to deal with them is to ‘reassemble’ them! By viewing them in memory in a ‘continuous’ manner, we can forget that they are segmented during analysis and operate seamlessly as if analyzing a single ‘large log’.

Of course, different log segmentation rules will produce different segmentation reassembly rules. This point must not be overlooked in tool support.

More

In order to reviewing logs “happily”, functions such as locating to a specific line, go forward/backward, reverse search, font settings… and so on, cannot be lacking.

Since we have already explored certain experiences and methods in log analysis, and existing tools are not particularly convenient, of course I need to write one myself!

Please have a look at the log analysis tool I’ve created: “Loginsight” (Available on mac apple store).

Source link

lol