Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The well-funded French AI startup Mistral, known for its powerful open source AI models, launched two new entries in its growing family of large language models (LLMs) today: a math-based model and a code generating model for programmers and developers based on the new architecture known as Mamba developed by other researchers late last year.

Mamba seeks to improve upon the efficiency of the transformer architecture used by most leading LLMs by simplifying its attention mechanisms. Mamba-based models, unlike more common transformer-based ones, could have faster inference times and longer context. Other companies and developers including AI21 have released new AI models based on it.

Now, using this new architecture, Mistral’s aptly named Codestral Mamba 7B offers a fast response time even with longer input texts. Codestral Mamba works well for code productivity use cases, especially for more local coding projects.

Mistral tested the model, which will be free to use on Mistral’s la Plateforme API, handling inputs of up to 256,000 tokens — double that of OpenAI’s GPT-4o.

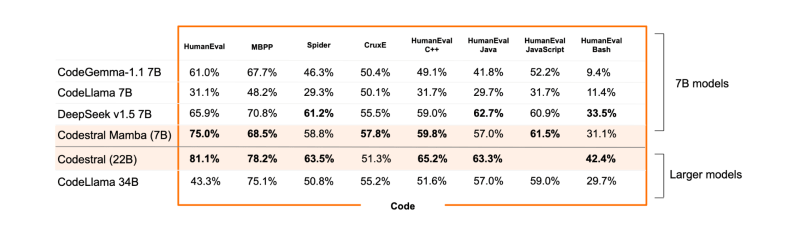

In benchmarking tests, Mistral showed that Codestral Mamba did better than rival open source models CodeLlama 7B, CodeGemma-1.17B, and DeepSeek in HumanEval tests.

Developers can modify and deploy Codestral Mamba from its GitHub repository and through HuggingFace. It will be available with an open source Apache 2.0 license.

Mistral claimed the earlier version of Codestral outperformed other code generators like CodeLlama 70B and DeepSeek Coder 33B.

Code generation and coding assistants have become widely used applications for AI models, with platforms like GitHub’s Copilot, powered by OpenAI, Amazon’s CodeWhisperer, and Codenium gaining popularity.

Mathstral is suited for STEM use cases

Mistral’s second model launch is Mathstral 7B, an AI model designed specifically for math-related reasoning and scientific discovery. Mistral developed Mathstral with Project Numina.

Mathstral has a 32K context window and will be under an Apache 2.0 open source license. Mistral said the model outperformed every model designed for math reasoning. It can achieve “significantly better results” on benchmarks with more inference-time computations. Users can use it as is or fine-tune the model.

“Mathstral is another example of the excellent performance/speed tradeoffs achieved when building models for specific purposes – a development philosophy we actively promote in la Plateforme, particularly with its new fine-tuning capabilities,” Mistral said in a blog post.

Mathstral can be accessed through Mistral’s la Plataforme and HuggingFace.

Mistral, which tends to offer its models on an open-source system, has been steadily competing against other AI developers like OpenAI and Anthropic.

It recently raised $640 million in series B funding, bringing its valuation close to $6 billion. The company also received investments from tech giants like Microsoft and IBM.

Source link lol