(treety/Shutterstock)

Some of the biggest AI companies in the world are using material taken from thousands of content creators on YouTube to their AI models without compensating the creators of those videos, ProofNews reported today.

According to the article by ProofNews authors Annie Gilbertson and Alex Reisner, AI companies like Anthropic, Apple, and Nvidia used a dataset called “YouTube Subtitles” that contained transcribed text from more than 173,000 YouTube videos to train their models.

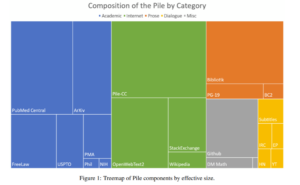

YouTube Subtitles is part of a larger, open-source data set created by EleutherAI called the Pile. According to a 2020 paper by EleutherAI researchers, the Pile is composed of 800GB of text pulled from 22 “high-quality” sources, including YouTube, GitHub, PubMed, HackerNews, Books3, the US Patent and Trademark Office, Stack Exchange, English-language Wikipedia, and a collection of Enron employee emails that the US Government released as part of its investigation.

Getting real-world text, such as the text in the Pile, is essential for improving the output of large language models, the EleutherAI authors write.

“Our evaluation of the untuned performance of GPT-2 and GPT-3 on the Pile shows that these models struggle on many of its components, such as academic writing,” they write. “Conversely, models trained on the Pile improve significantly over both Raw CC and CC-100 on all components of the Pile, while improving performance on downstream evaluations.”

Some of the biggest AI companies in the world have turned to the Pile to train their AI models. In addition to the companies mentioned above, Bloomberg, Databricks, and Salesforce have documentation showing that they’ve used the Pile to train their AI models, ProofNews reported. While it’s unclear if OpenAI used the Pile, it has used YouTube Subtitles to train its AI models, the New York Times reported earlier this year.

The ProofNews article brings thorny issues of content ownership in a free and open Web, and what constitutes “fair use”–that legal principle that allows journalists, for example, to replicate copyrighted content without first obtaining permission–to the forefront.

“No one came to me and said, ‘We would like to use this,’” said David Pakman, host of “The David Pakman Show,” according to the ProofNews article. “This is my livelihood, and I put time, resources, money, and staff time into creating this content.”

(Source: The David Pakman show)

Content creators are particularly fearful that tech giants will use their content to train AI models that could generate new content that could potentially compete with them in the future. While AI-generated content isn’t mainstream now, it’s within the realm of possibility that it could be in the near future, they say, and that should at least warrant a conversation.

“It’s theft,” Dave Wiskus, the CEO of Nebula, a developer of videos, podcasts, and classes, told ProofNews. “Will this be used to exploit and harm artists? Yes, absolutely.”

EleutherAI is reportedly working on the Pile version 2, which will be much bigger than the original version released in December 2020. The new version will also take into account issues like copyright and data licensing, the organization told VentureBeat earlier this year.

This isn’t the first time authors, actors, and other content creators have spoken out against their work being used to train LLMs. Comedian Sarah Silverman sued OpenAI for copyright infringement in 2023, as did a group of authors.

Related Items:

AI Ethics Issues Will Not Go Away

Do We Need to Redefine Ethics for AI?

It’s Time to Implement Fair and Ethical AI

Source link

lol