See the REFORMS pre-print and checklist here.

Every music producer is looking for the next hit song. So when a paper claimed that machine learning can predict hit songs with 97% accuracy, it would have been music to their ears. News outlets, including Scientific American and Axios, published pieces about how this “frightening accuracy” could revolutionize the music industry. Earlier studies have found that it is hard to predict if a song will be successful in advance, so this paper seemed to be a dramatic achievement.

Unfortunately for music producers, we found that the study’s results are bogus.

The model presented in the paper exhibits one of the most common pitfalls in machine learning: data leakage. This roughly means that the model is evaluated on the same, or similar, data as it is trained on, which makes estimates of accuracy exaggerated. In the real world, the model would perform far worse. This is like teaching to the test—or worse, giving away the answers before an exam.

This is far from the only example. Just last month, leakage was discovered in a prominent oncology paper from 2020. This one had been published in Nature, one of the most prestigious scientific journals, and had accumulated over 600 citations before the error was found.

Leakage is embarrassingly common in scientific research that uses ML. Earlier this month, our paper “Leakage and the reproducibility crisis in machine learning-based science” was published in the peer-reviewed journal Patterns. We found that leakage has caused errors in dozens of scientific fields and affected hundreds of papers.

Leakage is one of many errors in ML-based science. One reason such errors are common is that ML has been haphazardly adopted across scientific fields, and standards for reporting ML results in papers haven’t kept pace. Past research in other fields has found that reporting standards are useful in improving the quality of research, but such standards do not exist for ML-based science outside a few fields.

Leakage is an example of an execution error—it creeps in when conducting the analysis. Also common are errors of interpretation, which have more to do with how a study’s findings are described in the paper and understood by others.

For example, a systematic review found that it is common for papers proposing clinical prediction models to spin their findings—for instance, by claiming that a model is fit for clinical use without evidence that it works outside the specific conditions it was tested in. These errors don’t necessarily overstate the accuracy of the model. Instead, they oversell where and when it can be effectively used.

This is just one type of interpretation error. Another frequent oversight is not clarifying the level of uncertainty in a model’s output. Misjudging this can lead to misplaced confidence in the model. And many studies don’t precisely define the phenomenon being modeled, which leads to a lack of clarity about what a study’s results even mean.

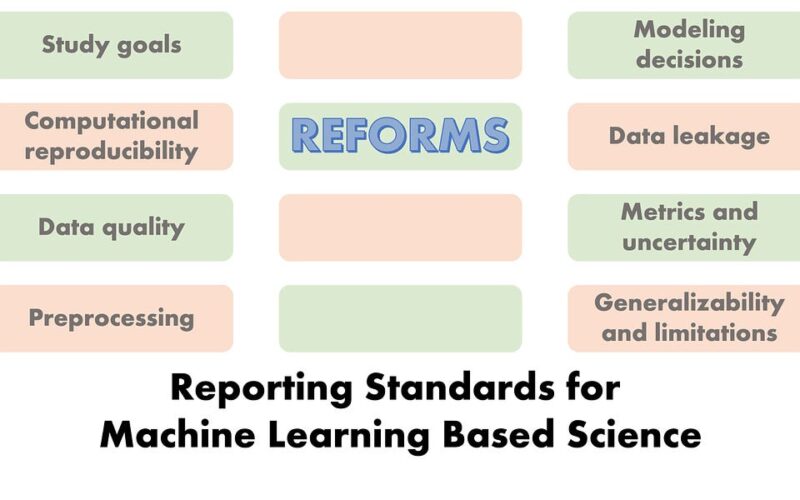

To minimize errors in ML-based science, and to make it more apparent when errors do creep in, we propose REFORMS (Reporting standards for Machine Learning Based Science) in a preprint released today. It is a checklist of 32 items that can be helpful for researchers conducting ML-based science, referees reviewing it, and journals where it is submitted and published.

The checklist was developed by a consensus of 19 researchers across computer science, data science, social sciences, mathematics, and biomedical research. The disciplinary diversity of the authors was essential to ensure that the standards are useful across many fields. A majority of the authors were speakers or organizers at a workshop we organized last year titled “The Reproducibility Crisis in ML-Based Science.” (Videos of the talks and discussions are available on the workshop page.)

The checklist and the paper introducing it are available on our project website. The paper also provides a review of past failures, as well as best practices for avoiding such failures.

A checklist is far from enough to address all the shortcomings of ML-based science. But given the prevalence of errors and the lack of systematic solutions, we think it is urgently needed. We look forward to feedback from the community, which will help inform future iterations of the checklist.

Source link

lol