24

May

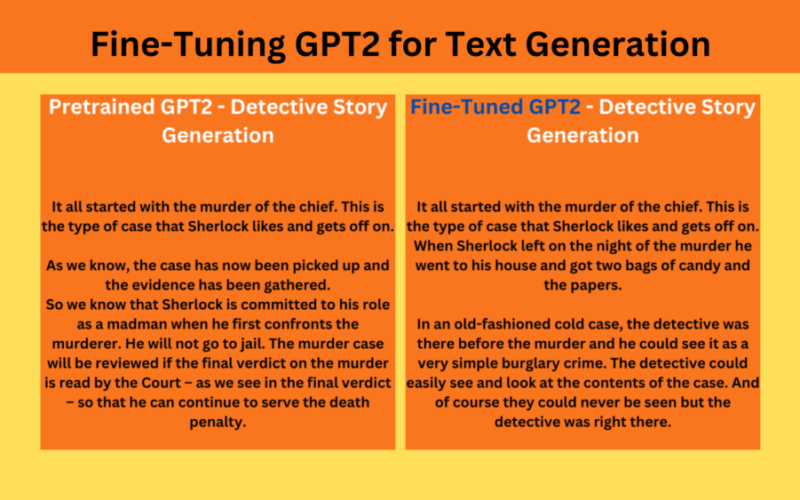

The first ever GPT model was released by OpenAI in 2018. Since then, we have seen tremendous research and models based on the same architecture. Although GPT1 is old by today’s standards, GPT2 still holds fairly well for many fine-tuning tasks. In this article, we will dive into fine-tuning GPT2 for text generation. In particular, we will teach the model to generate detective stories based on Arthur Conan Doyle’s Sherlock Holmes series. Figure 1. Text generation example using the trained GPT2 model. GPTs and similar large language models can be fine-tuned for various text generation tasks. With this article, we…