27

May

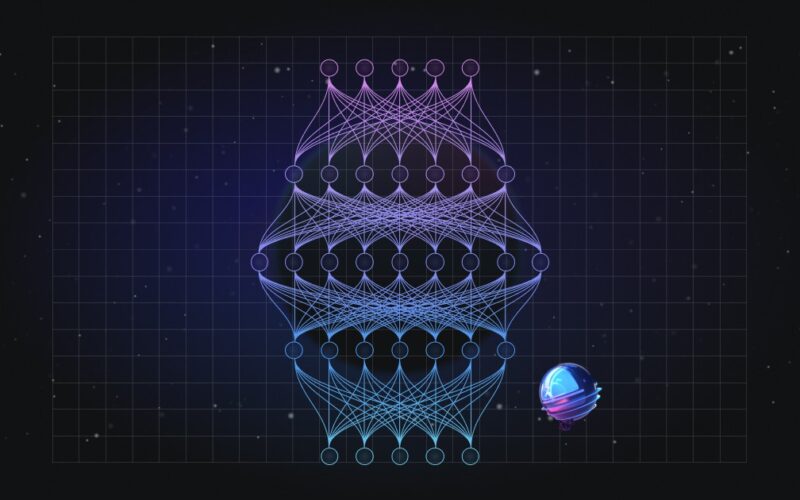

Data, analytics and AI governance is perhaps the most important yet challenging aspect of any data and AI democratization effort. For your data, analytics and AI needs, you've likely deployed two different systems — data warehouses for business intelligence and data lakes for AI. And now you've created data silos with data movement across two systems, each with a different governance model.But data isn't limited to files or tables. You also have assets like dashboards, ML models and notebooks, each with their own permission models, making it difficult to manage access permissions for all these assets consistently. The problem gets…