23

May

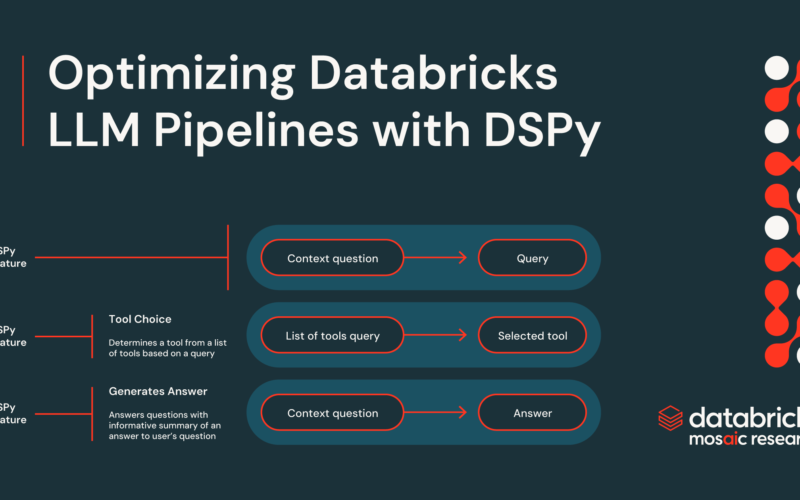

If you’ve been following the world of industry-grade LLM technology for the last year, you’ve likely observed a plethora of frameworks and tools in production. Startups are building everything from Retrieval-Augmented Generation (RAG) automation to custom fine-tuning services. Langchain is perhaps the most famous of all these new frameworks, enabling easy prototypes for chained language model components since Spring 2023. However, a recent, significant development has come not from a startup, but from the world of academia. In October 2023, researchers working in Databricks co-founder Matei Zaharia’s Stanford research lab released DSPy, a library for compiling declarative language model calls into…