20

Sep

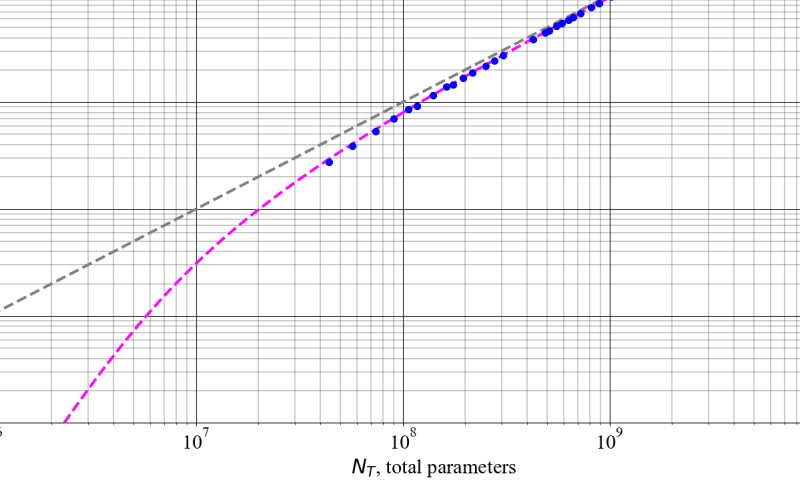

This is a Plain English Papers summary of a research paper called Reconciling Conflicting Scaling Laws in Large Language Models. If you like these kinds of analysis, you should join AImodels.fyi or follow me on Twitter. Overview This paper reconciles two influential scaling laws in machine learning: the Kaplan scaling law and the Chinchilla scaling law. The Kaplan scaling law suggests that model performance scales as a power law with respect to model size and compute. The Chinchilla scaling law suggests that model performance scales more efficiently by tuning the compute and dataset size together. The paper aims to resolve…