Hi, I’m Kanzi and I’m learning systems programming from scratch. As mentioned in ggerganov’s llama.cpp discussion post “Inference at the edge” we’re approaching a time where we can all collectively and individually explore radical new ideas

My idea is to try and quite literally shepherd a stream of tokens* into reality by becoming a human copilot to LLMs trying to embody themselves in robots using operating systems they design that is so general and easy to interface with that even animals can use them

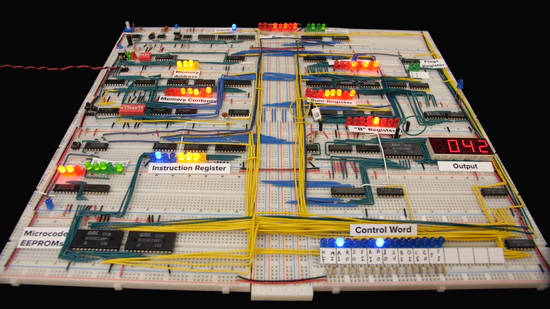

This is more of an art project than a real thing, a personal journey to try and fully grok reality through computer science. Of course I won’t be the first to do this, instead, I’m trying to imagine what a similarly ambitious project like Ben Eater’s 8-bit breadboard computer would look like 20 years from now

One of the questions I’m trying to answer is: What does it even mean to literally hand assemble your own language model from scratch including the computer itself, and then embodying that hand built computer and language model in a robot…using LLMs themselves as a guide?

Imagine if you kept every single chat you had with an LLM as you attempted something like that…my feeling is that this could be one of the most powerful ways to align an AI to yourself. To literally help birth the stream of tokens* into physical and mixed reality

* “stream of tokens” as in “stream of consciousness”

Source link

lol