OpenAI’s latest model, o1, has been touted as a huge leap forward. But after around a week of hands-on use and conversations with others in the community, it’s clear that while o1 does some things better, it also stumbles hard—particularly in areas that matter most to me, and, I assume, the rest of you.

On paper, o1 brings some big improvements. It introduces a new reasoning paradigm, generating long chains of thought before delivering a final answer. This approach suggests a model designed for deeper, more thoughtful problem-solving. And with its two versions—o1-preview and the faster, smaller o1-mini—OpenAI aimed to balance performance with accessibility.

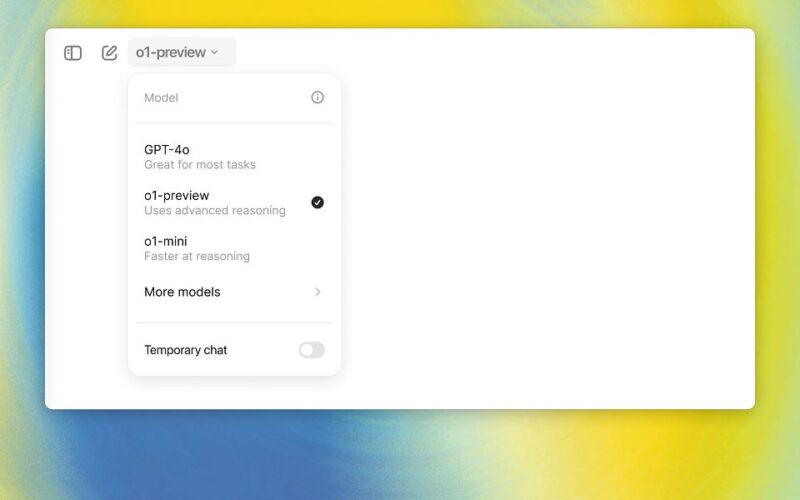

But the reality of using o1 is more frustrating than groundbreaking. The model’s coding abilities have improved modestly, but this gain is offset by its sluggish performance. Worse, the overall user experience is mega clunky. Switching models to fine-tune responses or save tokens is a tedious chore, especially in the chat interface. To me, this failure in execution overshadows o1’s supposed strengths and raises some questions about OpenAI’s direction.

In fact, I’d call this the second product miss for OpenAI in a row (after voice, which is still not rolled out?), which is great news for competitors like Anthropic, who seem more focused on delivering usable AI with a kickass UX centered around artifacts.

By the way, a lot of info in this post comes from Tibor Blaho – be sure to give him a follow!

Like I said before, o1 comes in two flavors: o1-preview and o1-mini. The preview version is the early checkpoint of the full model, while o1-mini is the streamlined, faster option expected to be available for free-tier users. In theory, o1-mini should excel in handling STEM and coding tasks. Both versions use the same tokenizer as GPT-4o, ensuring consistency in input token processing.

Source link

lol