Deephaven is a powerful analytics engine that makes processing large data more intuitive than ever. Iceberg is a table format that provides fast, efficient, and scalable data storage. Combining the two is like bringing Holmes and Watson together to solve a mystery. In this blog, we’ll explore Deephaven’s new Iceberg integration, why it matters, how to use it, and what’s to come.

Deephaven already has integrations with SQL, Parquet, Kafka, and CSV, which can all be used as storage backend for a Deephaven-powered application. Now Iceberg is part of that list as well. If you’re looking for a scalable, efficient, reliable, and cloud-native way to store your data and fetch it into Deephaven, look no further.

To follow along with this blog, you’ll need Docker. This specifically uses Docker Compose to manage the services, so check out the links if you’re unfamiliar with them.

Iceberg is now available as a storage backend for Deephaven, providing a scalable and efficient cloud-native storage mechanism for powerful applications.

To use Deephaven in tandem with Iceberg, you’ll need a configuration that allows the two to work together. Docker Compose is perfect for this. Below is an extended version of the YAML file found in Iceberg’s Spark Quickstart—it adds Deephaven as a service and makes it part of the iceberg_net Docker network so the services can communicate.

docker-compose.yml

services:

spark-iceberg:

image: tabulario/spark-iceberg

container_name: spark-iceberg

build: spark/

networks:

iceberg_net:

depends_on:

- rest

- minio

volumes:

- ./warehouse:/home/iceberg/warehouse

- ./notebooks:/home/iceberg/notebooks/notebooks

environment:

- AWS_ACCESS_KEY_ID=admin

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

ports:

- 8888:8888

- 8081:8080

- 11000:10000

- 11001:10001

rest:

image: tabulario/iceberg-rest

container_name: iceberg-rest

networks:

iceberg_net:

ports:

- 8181:8181

environment:

- AWS_ACCESS_KEY_ID=admin

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

- CATALOG_WAREHOUSE=s3://warehouse/

- CATALOG_IO__IMPL=org.apache.iceberg.aws.s3.S3FileIO

- CATALOG_S3_ENDPOINT=http://minio:9000

minio:

image: minio/minio

container_name: minio

environment:

- MINIO_ROOT_USER=admin

- MINIO_ROOT_PASSWORD=password

- MINIO_DOMAIN=minio

networks:

iceberg_net:

aliases:

- warehouse.minio

ports:

- 9001:9001

- 9000:9000

command: ['server', '/data', '--console-address', ':9001']

mc:

depends_on:

- minio

image: minio/mc

container_name: mc

networks:

iceberg_net:

environment:

- AWS_ACCESS_KEY_ID=admin

- AWS_SECRET_ACCESS_KEY=password

- AWS_REGION=us-east-1

entrypoint: >

/bin/sh -c "

until (/usr/bin/mc config host add minio http://minio:9000 admin password) do echo '...waiting...' && sleep 1; done;

/usr/bin/mc mb minio/warehouse;

/usr/bin/mc policy set public minio/warehouse;

tail -f /dev/null

"

deephaven:

image: ghcr.io/deephaven/server:latest

networks:

iceberg_net:

ports:

- '${DEEPHAVEN_PORT:-10000}:10000'

volumes:

- ./data:/data

environment:

- START_OPTS=-Xmx16g -DAuthHandlers=io.deephaven.auth.AnonymousAuthenticationHandler

networks:

iceberg_net:

You can start these services with a single command:

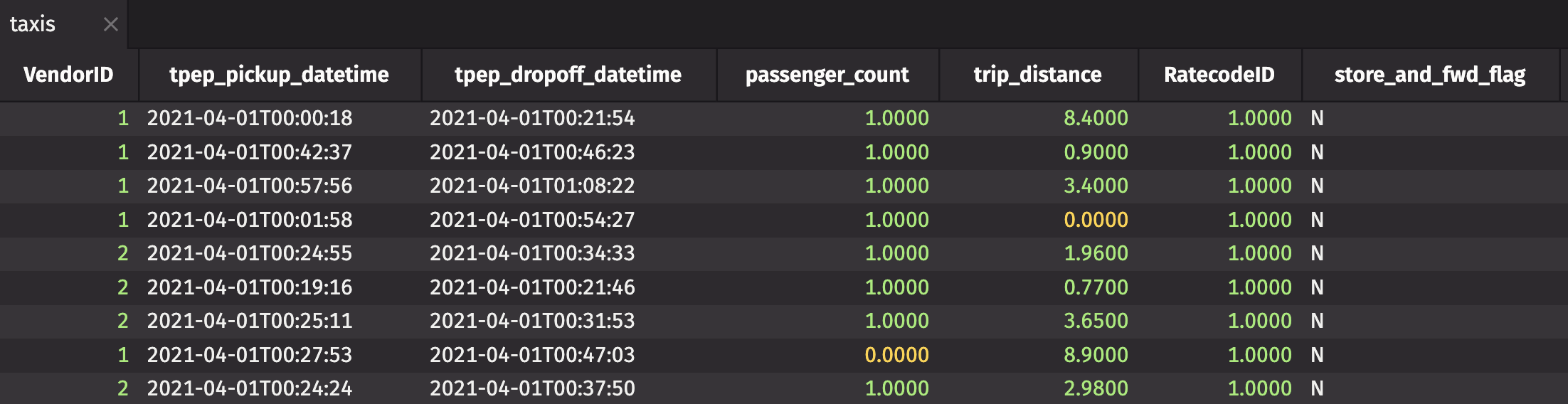

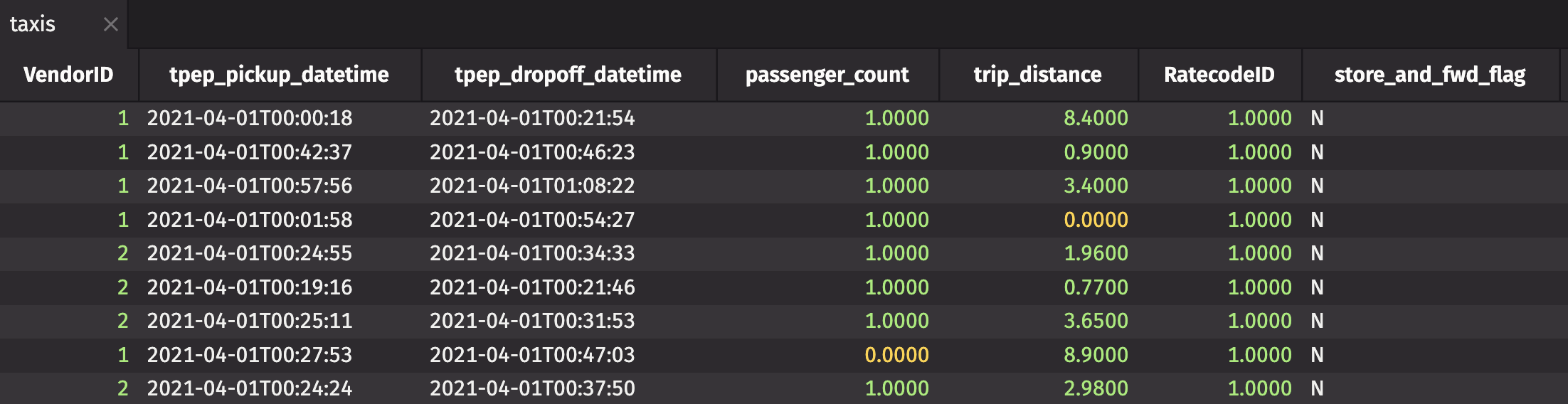

Iceberg stores data in tables just like Deephaven does. Iceberg tables live inside of catalogs, similar to how directories store files in a filesystem. Creating an Iceberg catalog is pretty easy – the Docker Compose configuration above has access to an Iceberg Spark server in Jupyter at http://localhost:8888. Head there and open the Iceberg - Getting Started notebook. The first four code blocks in that notebook will create an Iceberg table called nyc.taxis. You’ll read this table into Deephaven in the next section.

After creating the Iceberg catalog, head over to the Deephaven IDE at http://localhost:10000/ide in your preferred browser. To interact with an Iceberg catalog from Deephaven, you’ll first need to create an IcebergCatalogAdapter. You can create one in two different ways:

Each method will be used below.

With a REST catalog and MinIO

The following code block uses adapter_s3_rest to create an IcebergCatalogAdapter. It requires the catalog URI, warehouse location, region name, access key ID, secret access key, and an endpoint override.

from deephaven.experimental import iceberg

local_adapter = iceberg.adapter_s3_rest(

name="minio-iceberg",

catalog_uri="http://rest:8181",

warehouse_location="s3a://warehouse/wh",

region_name="us-east-1",

access_key_id="admin",

secret_access_key="password",

end_point_override="http://minio:9000",

)

With the catalog adapter in hand, you can now query namespaces, tables, and snapshots in an Iceberg catalog:

namespaces = local_adapter.namespaces()

tables = local_adapter.tables("nyc")

snapshots = local_adapter.snapshots("nyc.taxis")

To read an Iceberg table via a REST catalog and S3-compatible driver, you also need to define custom IcebergInstructions. In this case, the instructions give the region name, access key ID, secret access key, and endpoint override for the REST catalog:

from deephaven.experimental import s3

s3_instructions = s3.S3Instructions(

region_name="us-east-1",

access_key_id="admin",

secret_access_key="password",

endpoint_override="http://minio:9000",

)

iceberg_instructions = iceberg.IcebergInstructions(data_instructions=s3_instructions)

taxis = local_adapter.read_table("nyc.taxis", instructions=iceberg_instructions)

With an AWS Glue catalog

To use the AWS Glue catalog adapter, you need an AWS region and credentials. If you are running Deephaven from Docker, mount your AWS credentials as a volume in the Deephaven container. For more information, see here.

adapter_aws_glue creates an IcebergCatalogAdapter from an AWS Glue catalog. It requires a name, catalog URI, and S3 warehouse location:

from deephaven.experimental import s3, iceberg

cloud_adapter = iceberg.adapter_aws_glue(

name="aws-iceberg",

catalog_uri="s3://lab-warehouse/nyc",

warehouse_location="s3://lab-warehouse/nyc",

)

When using an AWS Glue catalog, custom Iceberg instructions are not required, so you can read the table directly:

namespaces = cloud_adapter.namespaces()

tables = cloud_adapter.tables("nyc")

snapshots = cloud_adapter.snapshots("nyc.taxis")

taxis = cloud_adapter.read_table("nyc.taxis")

Want to see Iceberg in action? Check out this developer demo from Larry:

This blog has demonstrated how to import Iceberg tables into Deephaven using a simple example. While these examples are straightforward, they illustrate the workflow for reading Iceberg tables into Deephaven. Our Iceberg integration is currently in development, so you can anticipate additional features, improvements, and examples in the future. As a preview, you can look forward to:

- Support for refreshing (ticking) Iceberg tables

- Generic adapters to make it even easier to interact with Iceberg catalogs

- Writing to Iceberg tables

Our Slack community continues to grow! Reach out to us with any questions, comments, or feedback. We’d love to hear from you!

Source link

lol