We recently announced the General Availability of our serverless compute offerings for Notebooks, Jobs, and Pipelines. Serverless compute provides rapid workload startup, automatic infrastructure scaling and seamless version upgrades of the Databricks runtime. We are committed to keep innovating with our serverless offering and continuously improving price/performance for your workloads. Today we’re excited to make a few announcements that will help improve your serverless cost experience:

- Efficiency improvements that result in a greater than 25% reduction in costs for most customers, especially those with short-duration workloads.

- Enhanced cost observability that helps track and monitor spending at an individual Notebook, Job, and Pipeline level.

- Simple controls (available in the future) for Jobs and Pipelines that will allow you to indicate a preference to optimize workload execution for cost over performance.

- Continued availability of the 50% introductory discount on our new serverless compute offerings for jobs and pipelines, and 30% for notebooks.

Efficiency Improvements

Based on insights gained from running customer workloads, we’ve implemented efficiency improvements that will enable most customers to achieve a 25% or greater reduction in their serverless compute spend. These improvements primarily reduce the cost of short workloads. These changes will be rolled out automatically over the coming weeks, ensuring that your Notebooks, Jobs, and Pipelines benefit from these updates without needing to take any actions.

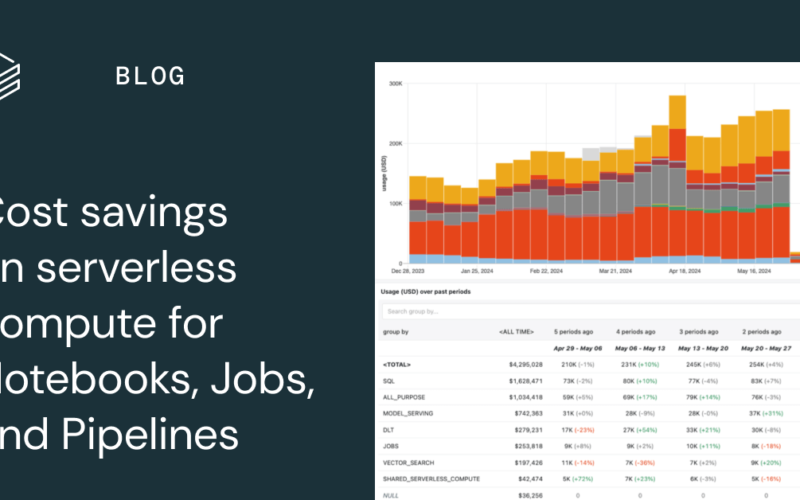

Enhanced cost observability

To make cost management more transparent, we’ve improved our cost-tracking capabilities. All compute costs associated with serverless will now be fully trackable down to the individual Notebook, Job, or Pipeline run. This means you will no longer see shared serverless compute costs unattributed to any particular workload. This granular attribution provides visibility into the full cost of each workload, making it easier to monitor and govern expenses. In addition, we’ve added new fields to the billable usage system table, including Job name, Notebook path, and user identity for Pipelines to simplify cost reporting. We’ve created a dashboard template that makes visualizing cost trends in your workspaces easy. You can learn more and download the template here.

Future controls that allow you to indicate a preference for cost optimization

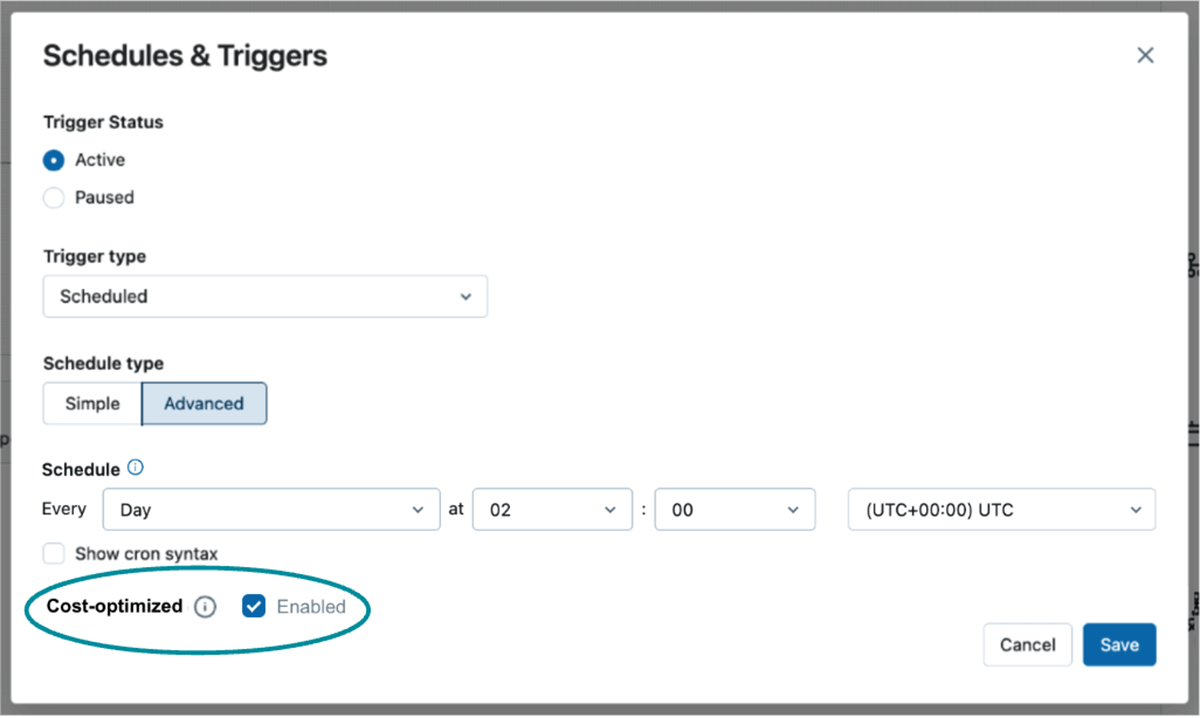

For each of your data platform workloads, you need to determine the right balance between performance and cost. With serverless compute, we are committed to simplifying how you meet your specific workloads’ price/performance goals. Currently, our serverless offering focuses on performance – we optimize infrastructure and manage our compute fleet so that your workloads experience fast startup and short runtimes. This is great for workloads with low latency needs and when you don’t want to manage or pay for instance pools.

However, we have also heard your feedback regarding the need for more cost-effective options for certain Jobs and Pipelines. For some workloads, you are willing to sacrifice some startup time or execution speed for lower costs. In response, we are thrilled to introduce a set of simple, straightforward controls that allow you to prioritize cost savings over performance. This new flexibility will allow you to customize your compute strategy to better meet the specific price and performance requirements of your workloads. Stay tuned for more updates on this exciting development in the coming months and sign-up to the preview waitlist here.

Unlock 50% Savings on Serverless Compute – Limited-Time Introductory Offer!

Take advantage of our introductory discounts: get 50% off serverless compute for Jobs and Pipelines and 30% off for Notebooks, available until October 31, 2024. This limited-time offer is the perfect opportunity to explore serverless compute at a reduced cost—don’t miss out!

Start using serverless compute today:

- Enable serverless compute in your account on AWS or Azure

- Make sure your workspace is enabled to use Unity Catalog and in a supported region in AWS or Azure

- For existing PySpark workloads, ensure they are compatible with shared access mode and DBR 14.3+

- Follow the specific instructions for connecting your Notebooks, Jobs, Pipelines to serverless compute

- Leverage serverless compute from any third-party system using Databricks Connect. Develop locally from your IDE, or seamlessly integrate your applications with Databricks in Python for a smooth, efficient workflow.

Source link

lol