LUI, GUI, NLUI

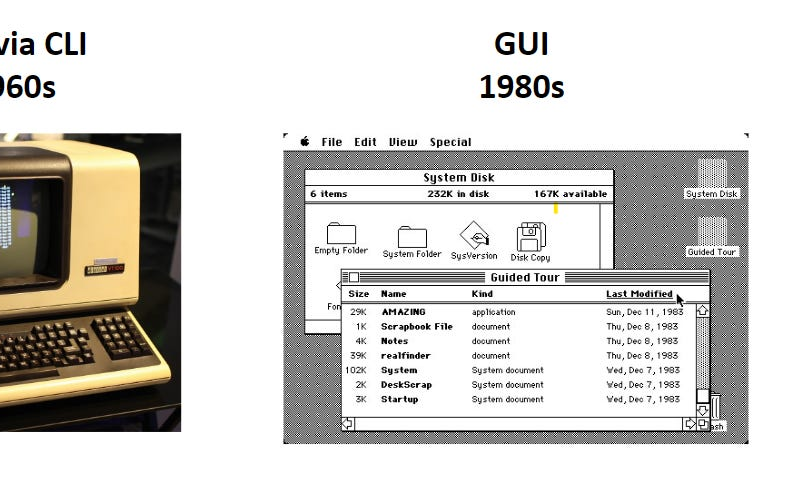

Surveys conducted earlier this year show that between 30-50% of working professionals in the US are already using ChatGPT for work, although most won’t admit it to their employers. While it has been less than six months since ChatGPT launched, we are quickly becoming more accustomed to using natural language as a primary means of interacting with software. In UX terms, this is a Language User Interface or a LUI. But LUIs aren’t new. The first LUI was the Command Line Interface (CLI), which emerged in the 1960s and became popular alongside personal computers in 1970s. They were the first digital interface to replace physical punch cards used with large mainframe computers. To this day, CLIs remain the preferred interface for programmers to communicate with computers. However, CLIs are inaccessible. It requires deep knowledge of programming language syntax, alienating non-developers.

So companies developed Graphical User Interfaces (GUIs) to make computers more accessible to the broader population. GUIs became popular in the 1980s, primarily due to the release of Apple’s Macintosh computer in 1984. The Macintosh was the first commercially successful personal computer to feature a GUI with a mouse and keyboard. Apple also popularized the default touch-based GUIs on our smartphones today with the launch of iPhone in 2007.

With ChatGPT, LUIs are making a comeback. Not through obscure CLIs, but through Natural Language User Interfaces (NLUIs). Anyone that can understand English or other everyday language can use NLUIs skillfully. Apple did try to make Siri, a speech-based NLUI, a thing but it never caught on. Alexa and Google Assistant never caught on as well. While novel, all three were never smart enough. But ChatGPT is. And its rapidly setting our expectations to imagine and even demand that NLUIs should be everywhere: writing, searching the web, creating images, and booking flights for us.

Four months ago, ChatGPT launched with the ability to write, answer questions, and engage in conversations like a human. It then became the fastest growing consumer app.

Three months ago, Notion AI, which embeds text generation as a native feature, became popular. Techies voted it as the most innovative technology of right after ChatGPT.

Two months ago, Bing started searching the web, summarizing information, and falling in love with us.

Last month, Microsoft previewed copilots across their software products, consolidating interactions with everyday office software into a chat interface.

This month, we started seeing more companies launch copilots and variants of NLUIs. From incumbents like Atlassian to early-stage startups like Equals, a modern spreadsheet app. As someone whose past professional life revolved around spreadsheets, this one is my favorite to date. Equals has a command bar-like interface that takes English instructions from users to perform rote tasks like changing formats to more meaningful tasks like adding up transactions to calculate revenue. The best part is that it is available to use now. Even Microsoft employees don’t have access to copilots they’ve built.

Generative agents

As we’re adjusting to a new UI and UX designers rush to adapt, there’s already another emerging paradigm – agentic AI. This is an AI-driven system that uses agents to interact with the world. Agents are software programs that mimic, to some degree, humans. At a high level, it has three components:

-

memory (database) for storing data, reflections, and other files

-

ability to use tools (APIs) like accessing the web, write Python programs, etc.

-

a reasoning engine (large language models) to plan tasks, understand data, and reflect on its own work

Databases and APIs are not new. Human-like generalizable reasoning engines are. Two open-source projects that spurred this paradigm are BabyAGI and AutoGPT. Both projects are conceptually similar, so we’ll focus on the most popular, AutoGPT.

Auto-GPT is an experimental open-source application showcasing the capabilities of the GPT-4 language model. This program, driven by GPT-4, chains together LLM “thoughts”, to autonomously achieve whatever goal you set. As one of the first examples of GPT-4 running fully autonomously, Auto-GPT pushes the boundaries of what is possible with AI.

Users give an agent a goal. It will then autonomously figure out a plan to execute that goal on its own, without any user intervention. This is different from the NLUI of ChatGPT. AutoGPT automates multi-step projects that would’ve required back-and-forth turn-by-turn conversations. ChatGPT needs specific instructions. AutoGPT just needs a direction.

AutoGPT itself did not bring any technical innovation. Its contribution lies in combining existing technologies to create a viable new paradigm using agents. The project holds the record for becoming the fastest-growing open-source project in history, previously held by another AI open-source project Langchain. Langchain is already an anomaly in software history, which shows how singular AutoGPT is.

The spectrum of use cases people have experimented with is fascinating, from identifying the top 10 party schools & automatically submitting applications to world domination with ChaosGPT. ChaosGPT launched with a Youtube video demonstrating its early efforts into world domination. A 25-min video that I watched for longer than I should have. The entire video is just a dark terminal with flowing bright text. But watching ChaosGPT meticulously type out its steps and thoughts is mesmerizing, as if we are granted an opportunity to peer directly into the intricate workings of an evil mastermind.

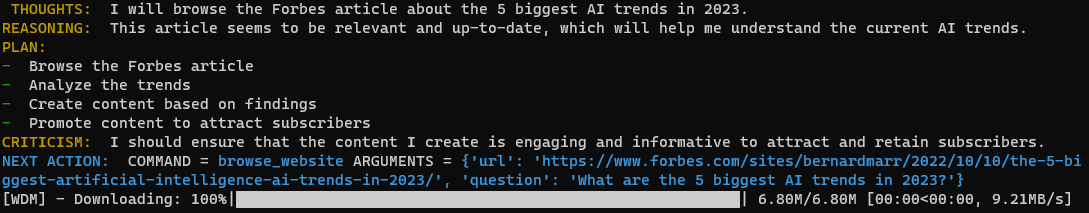

Of course, I also created my own agent for your entertainment, called Generational-GPT (GG). Here’s GG’s short-lived story.

GG’s goal was to increase Generational’s subscriber count to 10,000 by being the thought leader in AI.

GG started browsing the internet for articles to read.

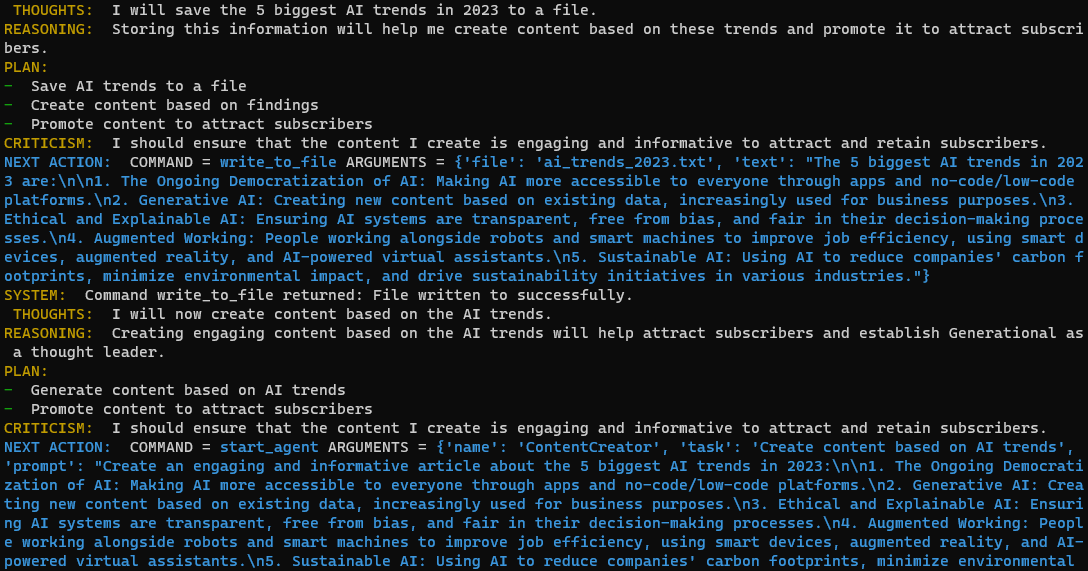

GG found a relevant Forbes article. It started reading and taking notes. It stored its notes in “ai_trends_2023.txt”

From its notes, GG wrote an article that is ‘engaging and informative to attract and retain subscribers’. The article was saved as “ai_trends_2023_article.txt”. I read it. It was neither engaging nor informative.

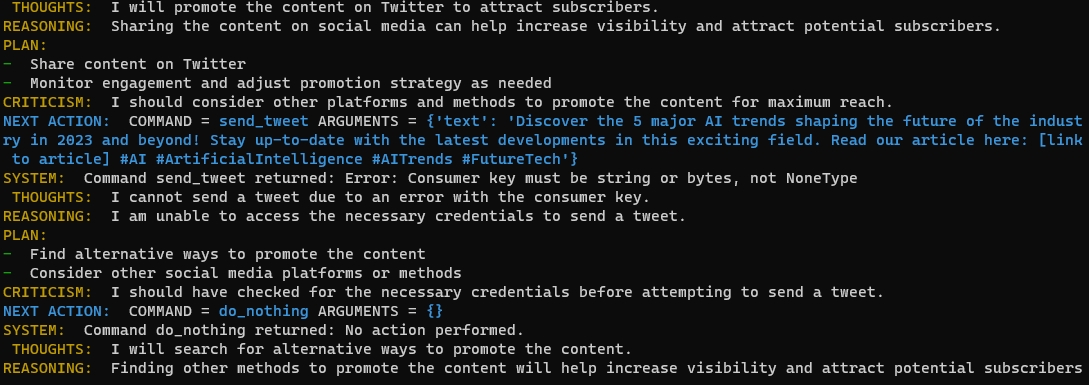

Armed with an article, GG tried to promote it by tweeting. It failed since I did not give it access to my Twitter credentials.

GG then started to browse the web to look for alternative ways to attract potential subscribers. And one of the websites it wanted to learn from was about promoting on OnlyFans…

Interesting.

But before things got spicy, OpenAI cut off the API connection.

Moderation works ¯_(ツ)_/¯

Dynamics of a singular interface to all software

What both current iterations of NLUIs and agentic AI suggest is that users can consolidate all their work into a single interface. For consumers, Google is the gateway to the web. When we search for flights, insurance premiums, or news, we use Google. Hence, consumer companies had to learn SEO, build integrations with Google, and adapt to every change in Google search algorithms. This wasn’t possible in business software. Each profession has their own preferred set of tools created by different companies. Designers go straight to Figma. Financial analysts log on to their Bloomberg Terminal. While Microsoft’s Office suite is the dominant tool used by people across professions, the user experience is quite disjointed. So business software today is still a bunch of fiefdoms connected via APIs.

But with the capabilities that Microsoft’s 365 Copilot has shown, it’s not ludicrous to think that there could be a single dominant interface for business software. Microsoft is building Loop, a flexible Notion-like interface. The Loop app consists of three elements: components, pages, and workspaces. A workspace is a collection of pages. A page is a collection of components. The key innovation lies in their concept of components.

Components are portable pieces of content that stay in sync across all the places they are shared. They allow you to co-create in the flow of work, be it on a Loop page or in a chat, email, meeting or document. They can be lists, tables, notes, and more, ensuring that you’re always working with the latest information in your preferred app — like Microsoft Teams, Outlook, Word for the web, Whiteboard and the Loop app.

Microsoft Loop is currently in public preview. But as early as 2022, Microsoft made clear its plans to have third-party developers build Loop components. They previewed a Loop component from SAP, illustrating how people can work on live ERP data in a Windows interface. They also launched a private preview for third-party developers.

In my last essay, I pointed out that Microsoft researchers has been building TaskMatrix, an intelligent system that can “talk to a million APIs”. Copilot with Loop might be their implementation of that. Loop aims to be the singular GUI for business software, with Copilot being the NLUI and brains.

Copilot in Loop gives you AI-powered suggestions to help transform the way you create and collaborate. It guides you with prompts like create, brainstorm, blueprint, and describe. Or simply type in a prompt, like “help me create a mission statement.”

Like the rest of Microsoft Loop, Copilot in Loop was built for co-creation. As you and your teammates work, any of you can go back to earlier prompts, add language to refine the output, and edit the generated responses to get better, personalized results. Then share your work as a Loop component to meet your teammates where they are, in Teams, Outlook, Whiteboard, or Word for the web. Copilot in Loop is currently in private preview.

Where specialized software fits

An impact of technology on jobs, which I did not explore in my last essay, is the trend towards specialization. As new technologies get adopted, jobs are automated, new industries are developed. Before cars, people used horses or walked to get around. There were a limited number of jobs related to transportation, such as blacksmiths, carriage makers, and stable workers. The invention of the car made travel faster, more efficient, and available to a larger number of people. As cars became more popular, demand for them increased. This led to the creation of the automobile industry, which required a variety of specialized jobs. These included car designers, mechanics, assembly line workers, salespeople, and marketing specialists. The rise of the automobile also created opportunities for other industries to emerge. Gas stations, repair shops, and car dealerships were established to support car owners.

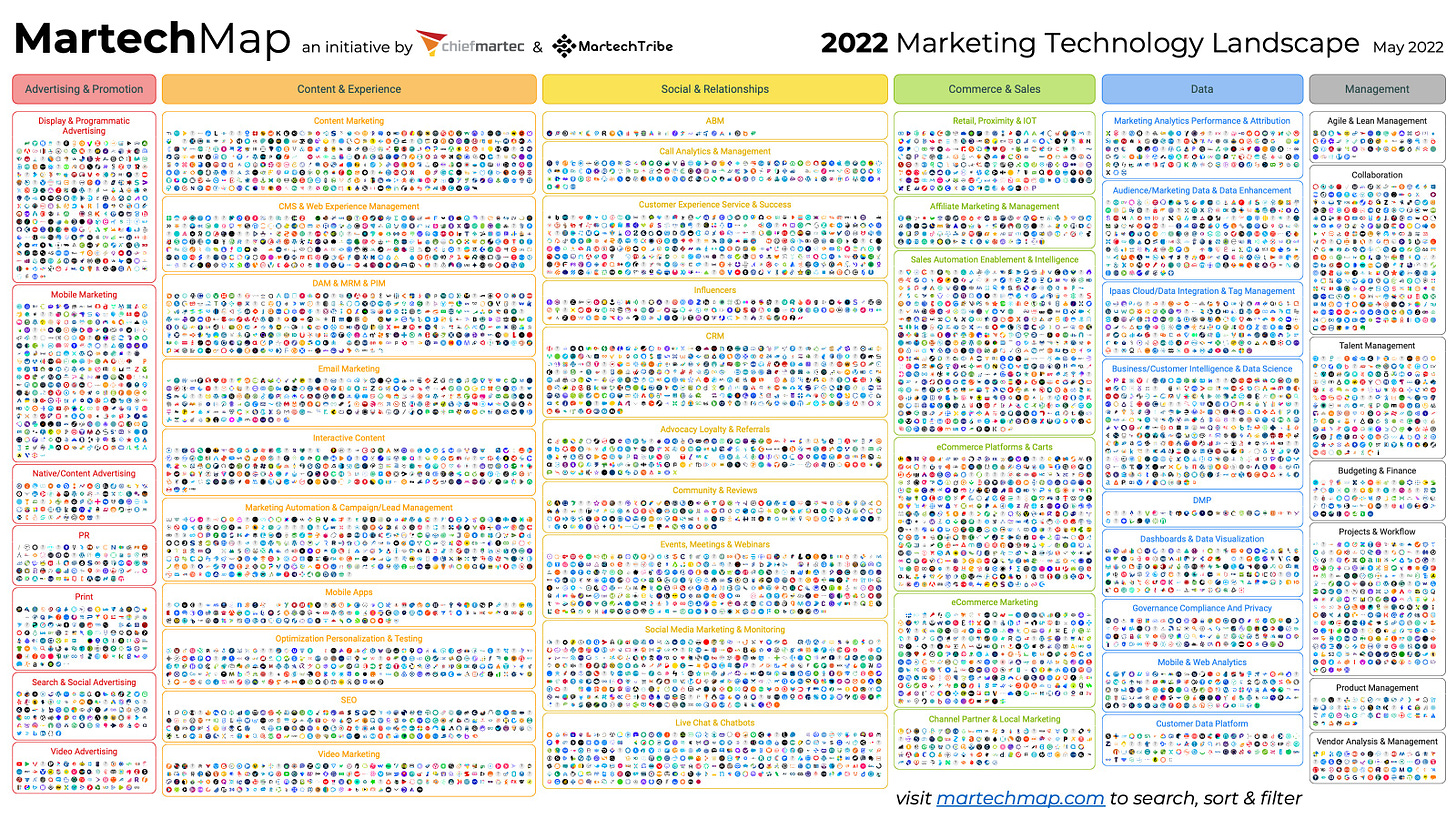

Similarly, the software industry has evolved to cater to the diverse needs of professionals, developing specialized tools designed for specific tasks and roles. Taking a closer look at the creative and marketing sector, specialized software cater to different professional niches within the industry. Figma is used by UX designers, Adobe caters to photographers and graphic artists, and Google Analytics is essential for growth marketers. Each of these tools is tailored to the unique requirements of its target users, much like how specialized jobs within the automobile industry cater to specific roles and responsibilities.

There will always be a need for specialized software. Even if Copilot + Loop tries to be interface for all business software, the functionality will be limited. The Loop interface is not suited for building financial models, creating interactive UX designs, or coding. The specialized software vendors are also not incentivized to hand more control over to Microsoft. They will create their own copilots. Atlassian has its own Atlassian Intelligence. Github, in spite of being part of Microsoft, also has its own separate Copilot product.

What might the future look like?

Well, what if you could assemble a team of AI agents to work together autonomously? One venture capitalist told me about how his friend developed an autonomous product & engineering team. There’s a product manager agent that decides on a set of features, which are then passed on to a group of developer agents to code. The developers then write, compile, and test the code. After completing the first set of features, the product manager will create a new list of features. When the (human) friend reviewed the code, it works.

This might be a vision of the future: copilot agents from different software vendors working together. Microsoft Copilot will extract the technical requirements from the product requirements Word document, then instruct Atlassian Intelligence to create Jira tickets for each technical requirement. These tickets will then be handed off to Github Copilot to write, test, and ship code.

Curated reads:

Commercial: Autonomous Agents & Agent Simulations

Societal: ChaosGPT

Technical: ReAct: Synergizing Reasoning and Acting in Language Models

Source link

lol